Developer Blogs

Forum Discussion

Game Developer News

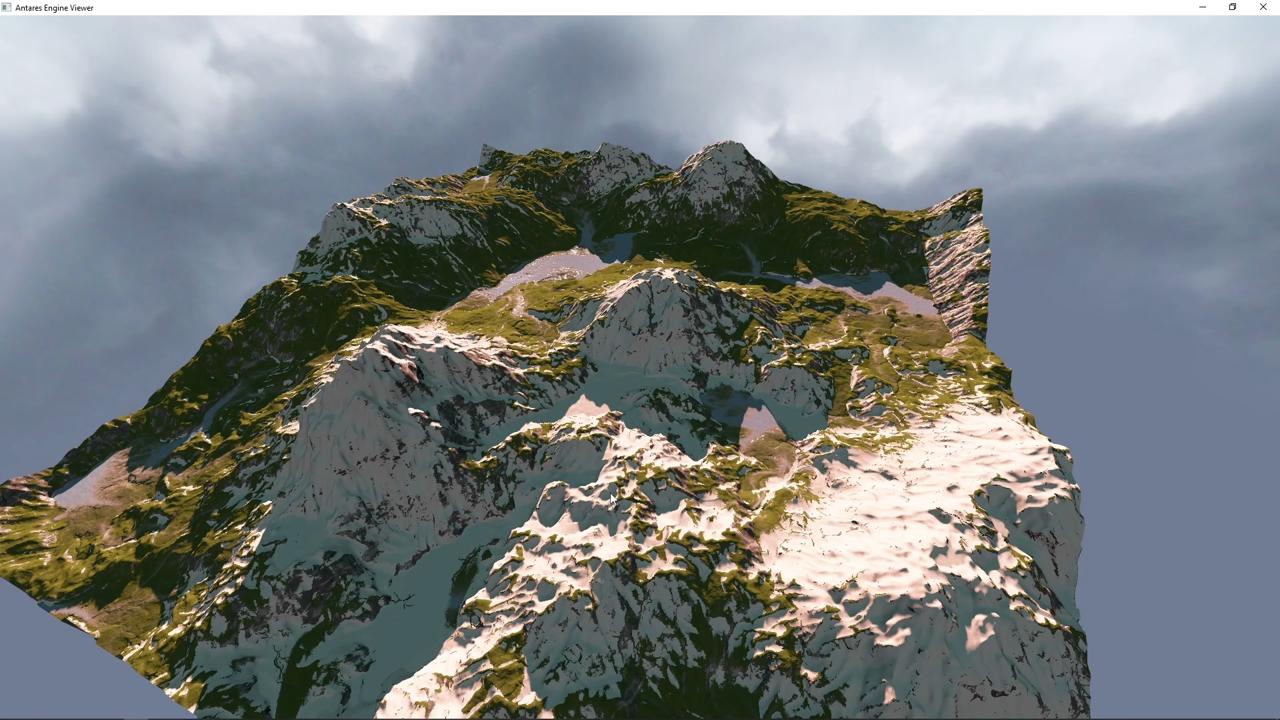

NeoAxis Game Engine 2024.1 Released

April 18, 2024 03:01 PM

Khronos Releases OpenXR 1.1 to Further Streamline Cross-Platform XR Development

April 15, 2024 01:47 PM

zephyr3d: Rendering engine for web browsers that supports WebGL and WebGPU.

March 21, 2024 10:16 AM

Feature Tutorials

Latest GameDev Projects

Informal tutorial/blog series specifically around what I've learned over the years working with unr…

Explore and discover the mysteries aboard an interstellar freighter in Omega Warp, a 2D sci-fi acti…

Loading...

Finding the llamas...

You must login to follow content. Don't have an account? Sign up!

Advertisement

Top Members

Advertisement

Popular Blogs

Beginnings of the Wayward Programmer

12 entries

HunterGaming

28 entries

1000 Monkeys

5 entries

Experimental graphics technology

7 entries

Journal of mattdev

7 entries