I'm using the algorithm described in:

A Simple and Practical Approach to SSAO:

I think the issue stems from my calculation of view space normals and positions. Is the view space position suppose to be normalized?

Here is the code for storing view space normals and positions to two D3DFMT_A32B32G32R32F render targets:

Position and Normal Map Generation:

struct PosNormMap_PSIn

{

float4 PosWVP : POSITION0; // Homogenious position

float4 PosWV : TEXCOORD0; // View space position

float4 NormWV : TEXCOORD1; // View space normal

};

struct PosNormMap_PSOut // 128 bit buffers

{

float4 Pos : COLOR0; // View space position

float4 Norm : COLOR1; // View space normal

};

// Vertex Shader

PosNormMap_PSIn BuildPosNormMapVS(float3 Position : POSITION,

float3 Normal : NORMAL0 )

{

PosNormMap_PSIn Output;

Output.PosWVP = mul(float4(Position, 1.0f), gWVP);

Output.PosWV = mul(float4(Position, 1.0f), gWV);

Output.NormWV = mul(float4(Normal, 0.0f), gWV);

return Output;

}

// Normalize into [0,1]... Not really necessary for floating point textures, but required for integer textures

float4 NormalToTexture(float4 norm)

{

return norm * 0.5f + 0.5f;

}

// Pixel Shader

PosNormMap_PSOut BuildPosNormMapPS(PosNormMap_PSIn Input)

{

PosNormMap_PSOut Output;

Output.Pos = Input.PosWV;

//Output.Pos.xy = Output.Pos.xy / Output.Pos.w;

//Output.Pos.z = linstep(gCameraNearFar.x, gCameraNearFar.y, Output.Pos.z); // Rescale from 0 to 1

Output.Norm = NormalToTexture(normalize(Input.NormWV)); // Interpolated normals can become unnormal--so normalize., put into [0,1] range

return Output;

}

technique BuildPosNormMap_Tech

{

pass P0

{

vertexShader = compile vs_3_0 BuildPosNormMapVS();

pixelShader = compile ps_3_0 BuildPosNormMapPS();

}

}

Here is the code for populating the occlusion buffer (D3DFMT_R32F):

uniform extern float2 gVectorNoiseSize; // default: 64x64

uniform extern float2 gScreenSize;

uniform extern float gSSAOSampleRadius; // default: 0.5-->2.0

uniform extern float gSSAOBias; // default: 0.05

uniform extern float gSSAOIntensity; // default: 3.0

uniform extern float gSSAOScale; // default: 1.0-->2.0

sampler PPSampPosition = sampler_state

{

Texture = <gPPPosition>;

MipFilter = POINT;

MinFilter = POINT;

MagFilter = POINT;

MaxAnisotropy = 1;

AddressU = CLAMP;

AddressV = CLAMP;

};

sampler PPSampNormal = sampler_state

{

Texture = <gPPNormal>;

MipFilter = POINT;

MinFilter = POINT;

MagFilter = POINT;

MaxAnisotropy = 1;

AddressU = CLAMP;

AddressV = CLAMP;

};

sampler PPSampVectorNoise = sampler_state

{

Texture = <gPPVectorNoise>;

MipFilter = LINEAR;

MinFilter = LINEAR;

MagFilter = LINEAR;

MaxAnisotropy = 1;

AddressU = WRAP;

AddressV = WRAP;

};

// Vertex Shader

void OcclusionMap_VS(float3 pos0 : POSITION,

float2 tex0 : TEXCOORD0,

out float4 oPos0 : POSITION0,

out float2 oTex0 : TEXCOORD1)

{

// Pass on texture and position coords to PS

oTex0 = tex0;

oPos0 = float4(pos0,1.0f);

}

float3 getPosition(in float2 tex0)

{

return tex2D(PPSampPosition,tex0).xyz;

}

float3 getNormal(in float2 tex0)

{

return normalize(tex2D(PPSampNormal, tex0).xyz * 2.0f - 1.0f);

//return tex2D(PPSampNormal, tex0).xyz * 2.0f - 1.0f;

}

float2 getRandom(in float2 tex0)

{

return normalize(tex2D(PPSampVectorNoise, gScreenSize * tex0 / gVectorNoiseSize).xy * 2.0f - 1.0f);

}

float doAmbientOcclusion(in float2 tcoord,in float2 tex0, in float3 p, in float3 cnorm)

{

float3 diff = getPosition(tcoord + tex0) - p;

const float3 v = normalize(diff);

const float d = length(diff)*gSSAOScale;

return max(0.0,dot(cnorm,v)-gSSAOBias)*(1.0/(1.0+d))*gSSAOIntensity;

}

// Pixel Shader

float4 OcclusionMap_PS(float2 tex0 : TEXCOORD1) : COLOR

{

const float2 vec[4] = { float2(1,0),float2(-1,0),float2(0,1),float2(0,-1) };

float3 p = getPosition(tex0);

float3 n = getNormal(tex0);

float2 rand = getRandom(tex0);

float ao = 0.0f;

float rad = gSSAOSampleRadius/p.z;

//SSAO Calculation

const int iterations = 4;

for (int j = 0; j < iterations; ++j)

{

float2 coord1 = reflect(vec[j],rand)*rad;

// float2 coord2 = float2(coord1.x*0.707 - coord1.y*0.707, coord1.x*0.707 + coord1.y*0.707);

float2 coord2 = float2(coord1.x - coord1.y, coord1.x + coord1.y) * 0.707f;

ao += doAmbientOcclusion(tex0,coord1*0.25, p, n);

ao += doAmbientOcclusion(tex0,coord2*0.5, p, n);

ao += doAmbientOcclusion(tex0,coord1*0.75, p, n);

ao += doAmbientOcclusion(tex0,coord2, p, n);

}

ao/=(float)iterations*4.0;

//END

return float4(ao, 1.0f, 1.0f, 1.0f);

}

// Technique

technique OcclusionMap_Tech

{

pass P0

{

// Specify the vertex and pixel shader associated with this pass.

vertexShader = compile vs_3_0 OcclusionMap_VS();

pixelShader = compile ps_3_0 OcclusionMap_PS();

}

}

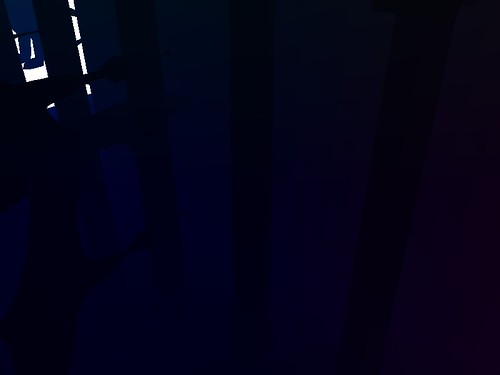

The normal buffer output looks ok. But the view buffer output looks off. I think each object is close to the camera near plane so (Z << 1). I think that's why everything looks dark.

Also, what Filters should be used for position and normal buffers? When calculating the occlusion buffer I'm rendering a full screen quad the same size as the position and normal buffers, so 1:1 texel mapping. I'm guessing POINT, with CLAMP addressing is correct?

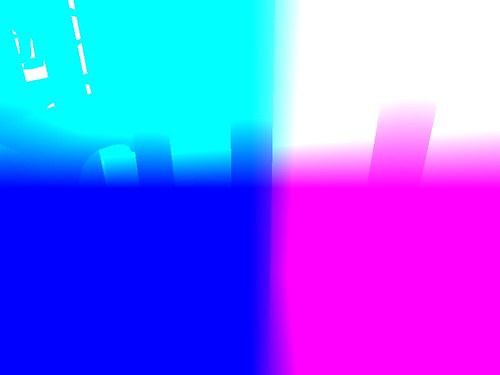

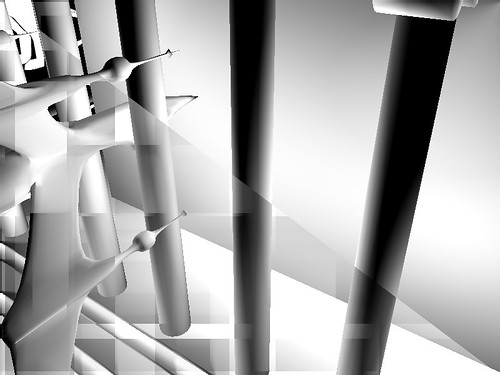

Viewspace Position Buffer (62.5% of original size):

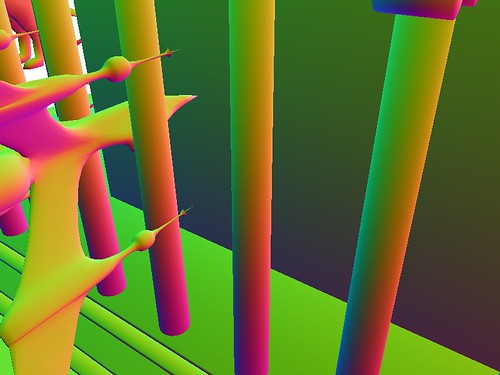

Viewspace Normal Buffer (62.5% of original size):

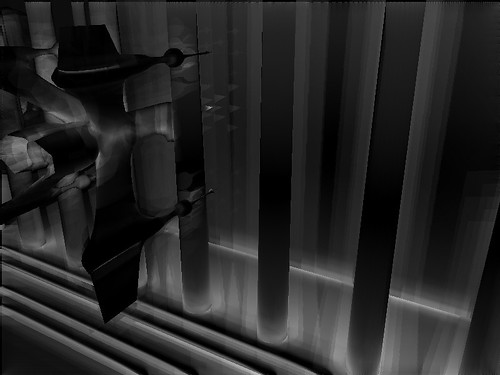

Occlusion Buffer (62.5% of original size):

Thanks in advance for the help!