I'm trying to implement it without using a normal buffer. In theory this would work well for the toon-shaded game I'm making, except I've run into a problem.

[color=#4A4A4A]

When I render a pointlight (as a sphere) in the lighting pass, I'm sampling from the depth-map and comparing the sphere's depth.[color=#4A4A4A]

Clipping any pixels from drawing where the scene is closer to the camera then the light. This sadly only works for one side of the point-light

[font="'Segoe UI"][size="2"][color="#4a4a4a"]BTW: I'm using XNA, but that's irrelevant to this problem... [/font]

[color=#4A4A4A]

VertexShaderOutput VertexShaderFunction(VertexShaderInput input)

{

VertexShaderOutput output;

float4 worldPosition = mul(input.Position, World);

float4 viewPosition = mul(worldPosition, View);

output.Position = mul(viewPosition, Projection);

output.Depth = 1 - (viewPosition.z / viewPosition.w);

return output;

}

float4 PixelShaderFunction(VertexShaderOutput input, float2 screenSpace : VPOS ) : COLOR0

{

screenSpace /= float2(1280, 720);

float depth = tex2D(Depth, screenSpace);

clip(depth < input.Depth ? -1:1);

return LightColor;

}

[color=#4A4A4A]

When I render with this code, the clip function works as expected, but I don't know how to clip the sphere from lighting parts of the scene that are behind it, beyond it's reach.

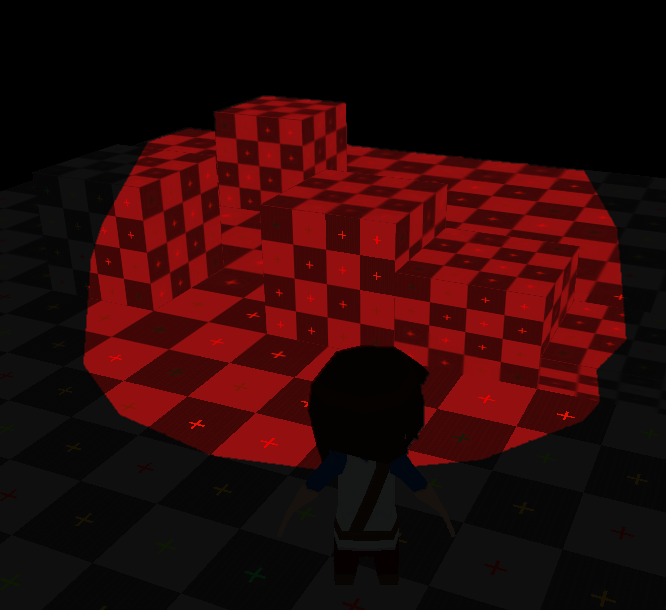

Here's a pic showing that the depth behind the sphere isn't being taken into account:

The farthest cube shouldn't be receiving any light, and the plane shouldn't be shaded that mush in the rear.

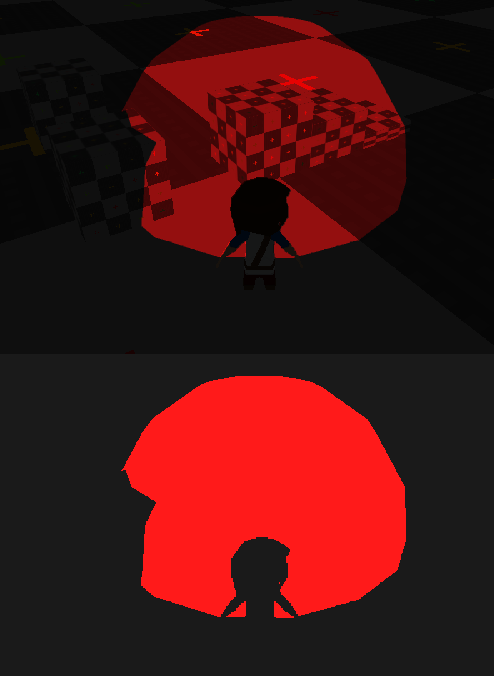

Here's another picture showing the problem a bit more visibly. Top is the final image, bottom is the light buffer:

I think I'm just missing the last step for determining the influence the light has, but I just can't figure it out!

Any help would be greatly appreciated.