Previously I had been using 3 render-targets for my light pre-pass implementation and 1 more render-target for shadows.

My G-Buffer / Render-targets = ~7.5 MB

- Depth-map: R32 1024 x 576

- Light-map: ARGB32 1024 x 576

- Output : ARGB32 1024 x 576

- Shadows: R16 512 x 512

What I was doing looked something like this:

- Draw scene depth to the depth-map

- Draw the shadow buffer to its render target

- Draw/Calculate AO and shadows using ONLY the depth-map, to the Light-map

- Blur the light-map using the output render-target as a temporary buffer

- Draw scene lighting using depth

- Draw the scene to the Output buffer using the light-map

- Ping-pong between the light-map and output for post-process effects

Now this looked FINE, but I wasn't satisfied. I had completely ignored normals and as a result was stuck with only simple point-lights.

So I got to thinking about how I could squeeze in a normal-buffer. The most obvious idea of just making a new render-target would leave me squeezed at ~9.5 MB, which is NOT OK!

So after much pondering I finally came up with a way to do it in the render-targets I already had!

I can use the SAME G-BUFFER!

What I'm doing now, and what I'm stuck on:

- Draw scene depth to the depth-map and scene Normals to the output render-target

- Draw the shadow buffer to its render target

- Draw/Calculate AO and shadows using ONLY the depth-map, to the Light-map

- Blur the light-map using the Shadow render-target as a temporary buffer (Both AO and Shadows are only a representation of lightness / darkness and can easily be represented as just 1 byte / pixel)

- Draw scene lighting using depth and Normals!

- Draw the scene to the Output buffer using the light-map

- Ping-pong between the light-map and output for post-process effects

Now theoretically this should work, and it does... sort of.

I can go all the way through the render-cycle, and when I go to draw lights at step 5, I have both a depth and a normal buffer!

Unfortunately, when I try to draw lights taking into account the normal-buffer, the lights are calculated incorrectly.

Now after much pondering, searching and tweaking, I have still yet to get over this little obstacle.

I previously had only been using point-lights, just calculating the attenuation with a depth-calculated world position, and that worked fine,

and if I remember correctly, to take normals into account for an omni-directional light I just have to multiply my current output by the dot product of the lightVector and the surface normal, right?

So here's the pixel shader for the point light:

[source lang="cpp"]float4 PixelShaderFunction(VertexShaderOutput input) : COLOR0

{

float2 screenSpace = GetScreenCoord(input.ScreenSpace);

float lightDepth = input.Depth.x / input.Depth.y;

float sceneDepth = tex2D(Depth, screenSpace).r;

clip(sceneDepth > lightDepth || sceneDepth == 0 ? -1:1);

float4 position;

position.x = screenSpace.x * 2 - 1;

position.y = (1 - screenSpace.y) * 2 - 1;

position.z = sceneDepth;

position.w = 1.0f;

position = mul(position, iViewProjection);

position.xyz /= position.w;

// Surface World Position is calculated correctly

// Light position is in world space

float Distance = distance(LightPosition, position);

clip( Distance > LightRadius ? -1:1);

float3 normal = (2.0f * tex2D(Normal, screenSpace).xyz) - 1.0f;

float3 lightVector = normalize(LightPosition - position);

float lighting = saturate(dot(normal, lightVector));

return lighting * (1.1f - Distance / LightRadius) * LightColor;

}[/source]

Here is the little bit of shader code that generates the Depth and Normal buffers:

[source lang="cpp"]PreVertexShaderOutput PreVertexShaderFunction(PreVertexShaderInput input)

{

PreVertexShaderOutput output;

float4 worldPosition = mul(input.Position, World);

float4 viewPosition = mul(worldPosition, View);

output.Position = mul(viewPosition, Projection);

output.Depth.xy = output.Position.zw;

output.Normal = mul(input.Normal, World);

return output;

}

PrePixelOutput PrePixelShaderFunction(PreVertexShaderOutput input)

{

PrePixelOutput output;

output.Normal.xyz = (normalize(input.Normal).xyz * 0.5f) + 0.5f;

output.Normal.a = 1;

output.Depth = input.Depth.x / input.Depth.y;

return output;

}[/source]

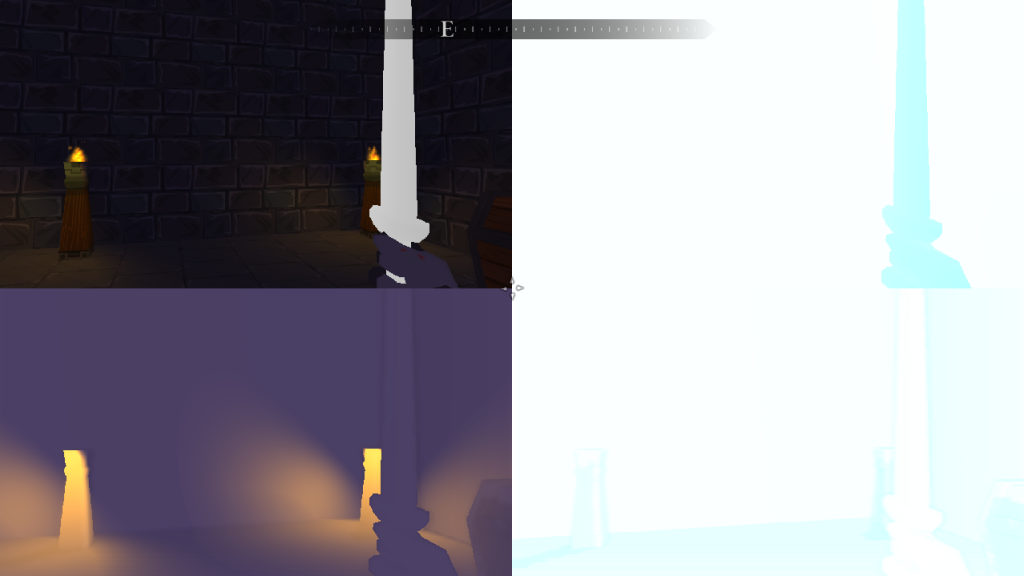

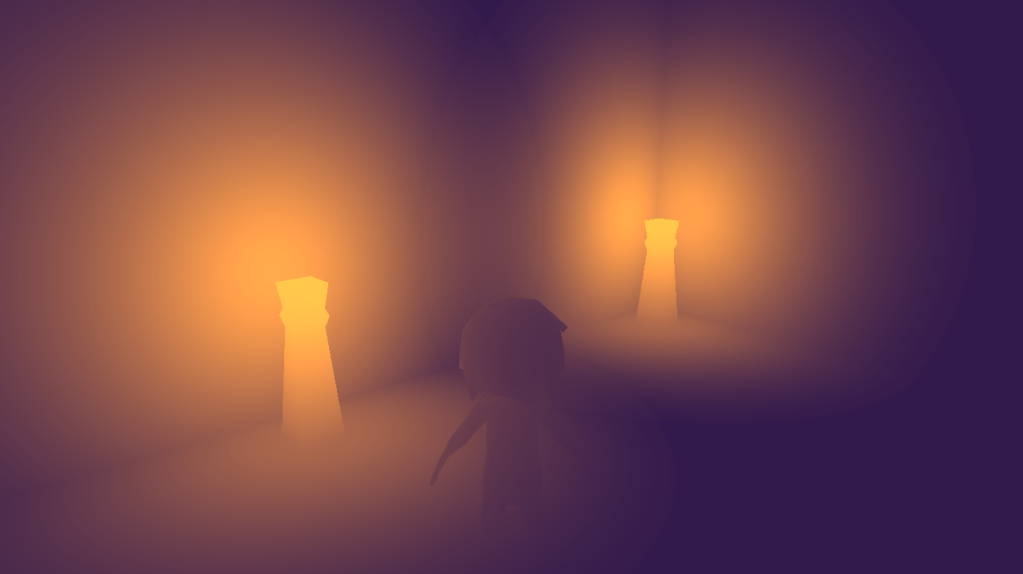

Now, a couple of pictures would be nice to illustrate what I'm seeing.

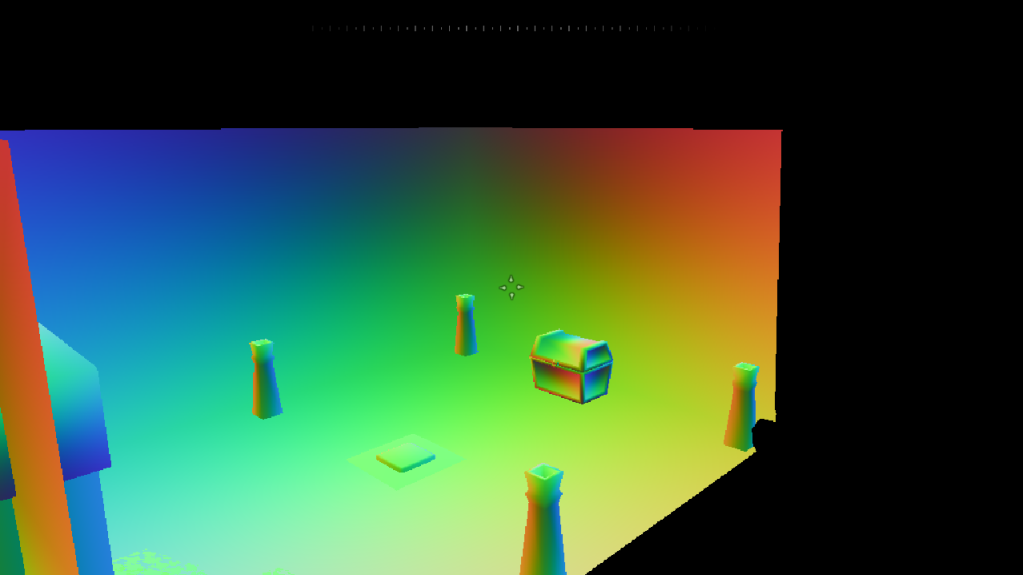

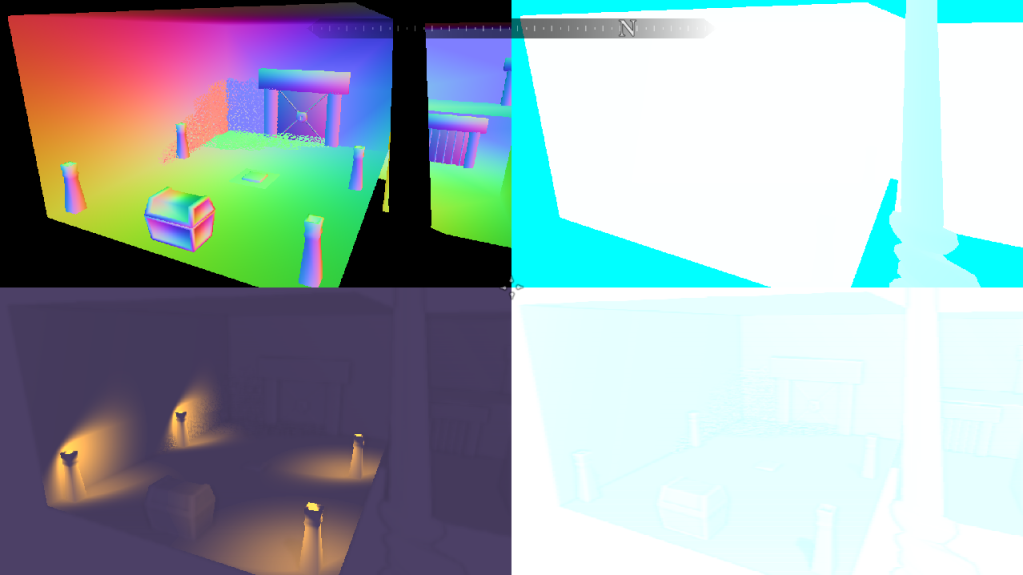

- I overrode the final scene drawing to get the normal buffer to show up for this pic, so I am getting some sort of normal value,

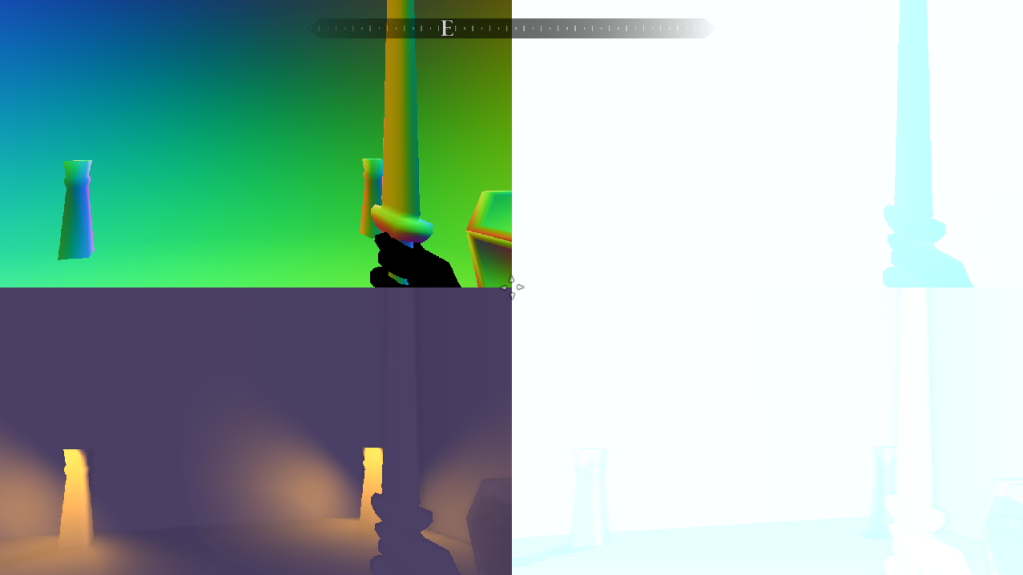

- And here's what it looks like with the incorrect lighting, the lights are all squeezed and distorted.

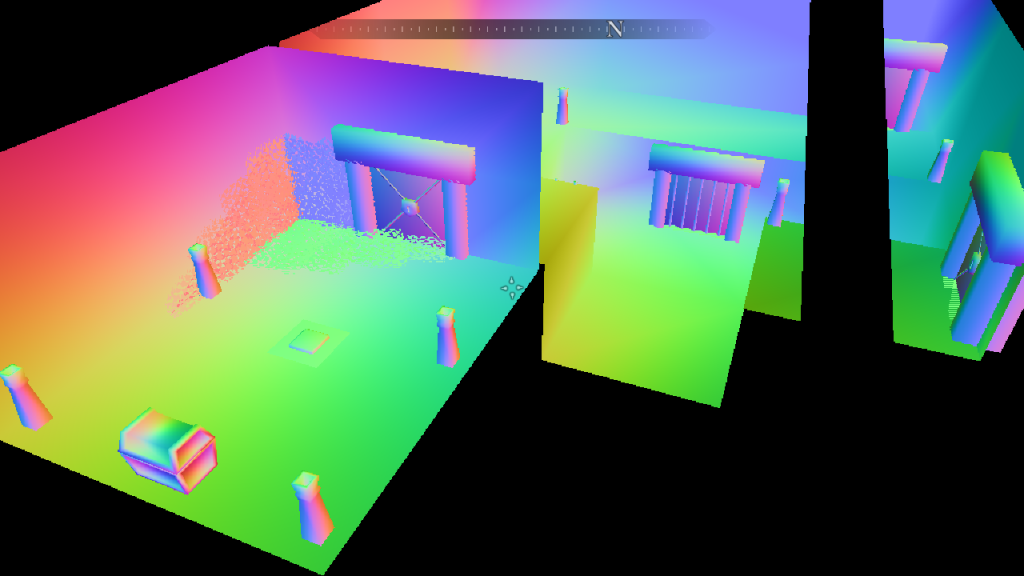

- Here's what it should/used to look like in the light-buffer

So even after typing ALL this up, I still haven't been able to figure this out...

Is it the normals? is it the light? I don't know...

Any help would be greatly appreciated

-Thanks in advance