The game itself will be in HD and as you can guess, the problem is kinect's depth map has row resolution and it wouldn't be good. So i decided to use hq4x to smooth the kinect's depth map since this filtering is very good on binary image.

Here's my steps:

- My depth map resolution is only 320x240 (it's a sufficient resolution for gesture tracking, higher resolution may cause performance problem)

- Segment user's body only (this is very easy using user mask from OpenNI)

- I am using texture buffer and for speed consideration, the buffer should be power-of-two which is 512x512 (i don't want to cut my depth map so 512 is decided as the higher-nearest power-of-two).

- Then the 320x240 depth image is downsampled to 80x60 so when it's applied on hq4x the resolution is back to 320x240 and it's fit to 512x512 texture buffer

- Apply hq2x upsampling on 80x60 image

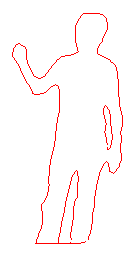

Here's my input:

And this is my output:

As you can see, hq4x does pretty well and the result is very good but there's an issue, the resulted image still has sharp curvatures on the edge.

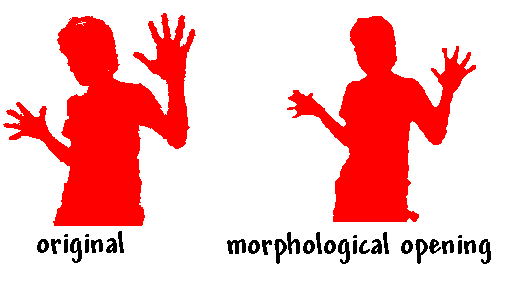

What i want look like this:

Currently i am stuck with this problem and i'm still thinking how to improve the result. Actually, I have an idea to do morphological erosion (or dilation) first, anyway i need to do research again

Perhaps anyone here has another idea

Thanks !