Hi All,

I am a seasoned DX Developer and having scoured the web for decent deferred shader approaches I am trying to adapt samples from the web to the forsaken tongue ![]()

Deferred Rendering Tutorials I have seen:

- http://ogldev.atspace.co.uk/www/tutorial35/tutorial35.html - Uses Extra Depth Texture & Inefficient Stencil

- http://www.catalinzima.com/xna/tutorials/deferred-rendering-in-xna/creating-the-g-buffer/ - Uses Extra Depth Texture

- http://www.openglsuperbible.com/example-code/ (Deferred Rendering Demo) - Uses Extra Depth Texture

- http://mynameismjp.wordpress.com/2010/09/05/position-from-depth-3/ - Only snippets, limited success with the frag_depth "vertex / w" approach (with perspective screen space distortions), fell flat otherwise.

- https://github.com/Circular-Studios/Dash - Looks great but I was unable to inspect using dev tools debugger did not play nice with D.

- http://www.humus.name/index.php?page=3D&&start=0 - No excess position buffers, no stencil buffers, advanced depth inspection to filter lights efficiently, its beautiful!

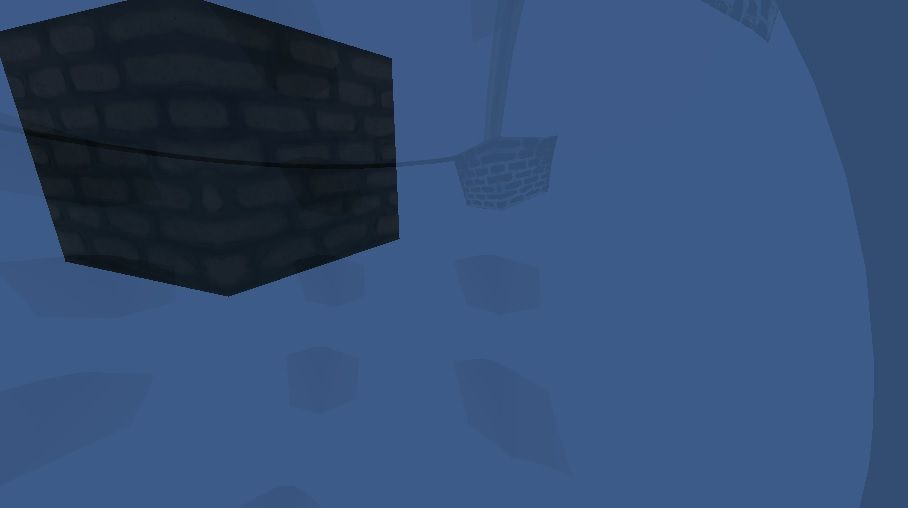

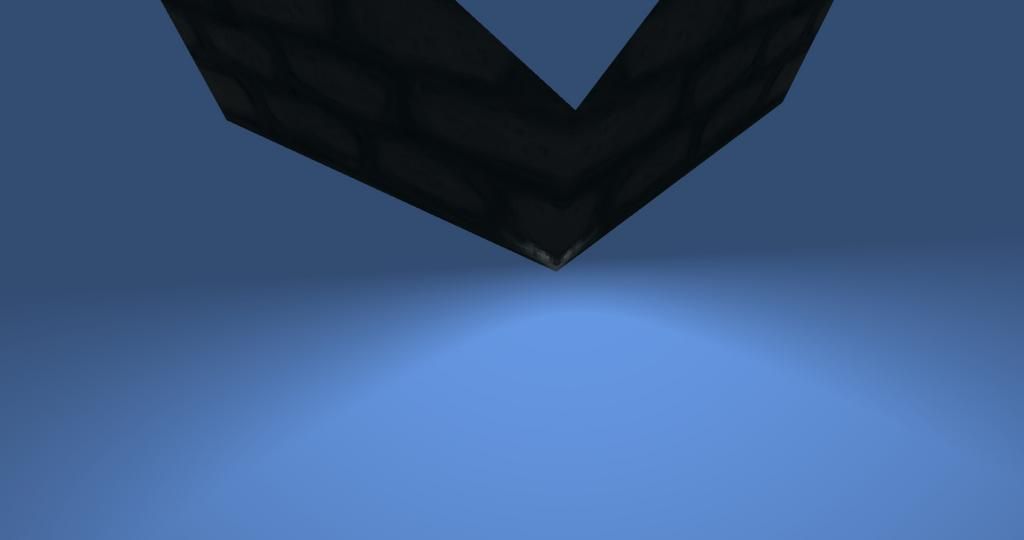

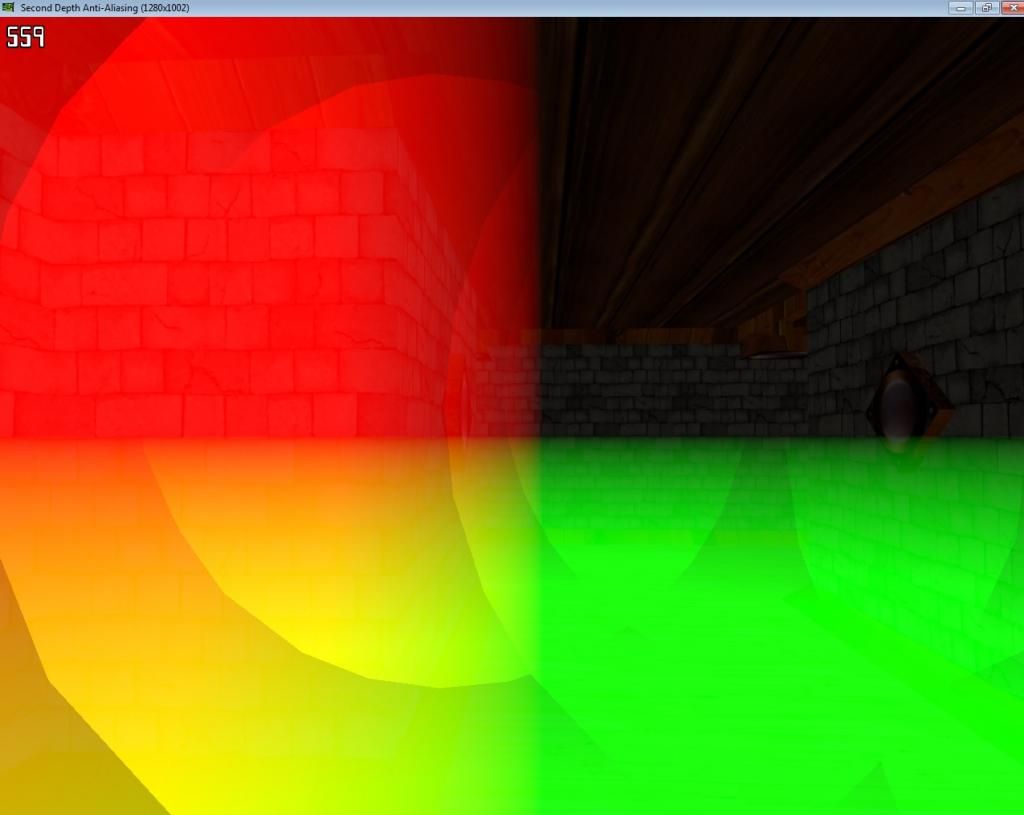

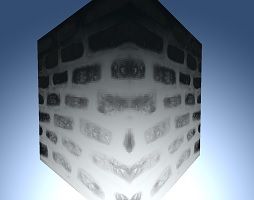

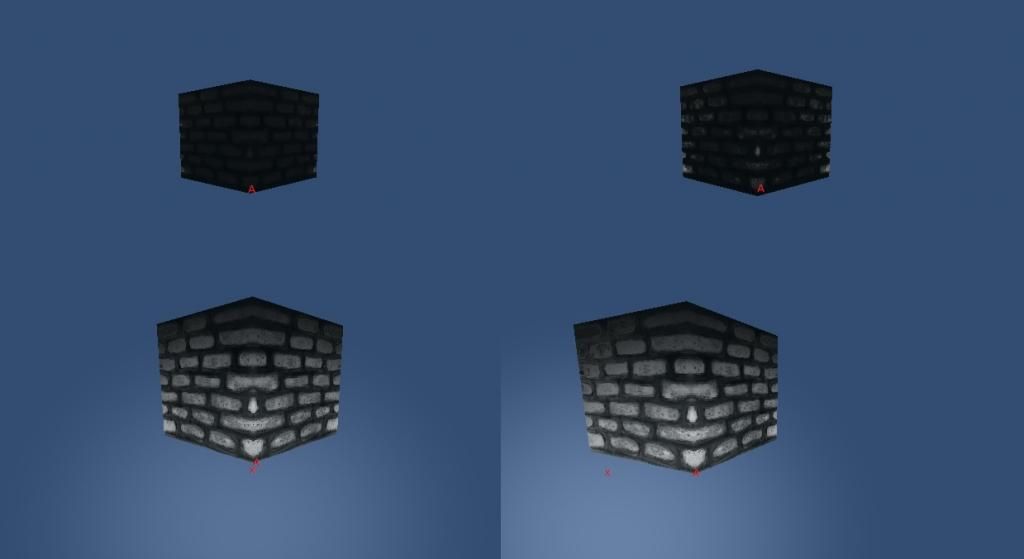

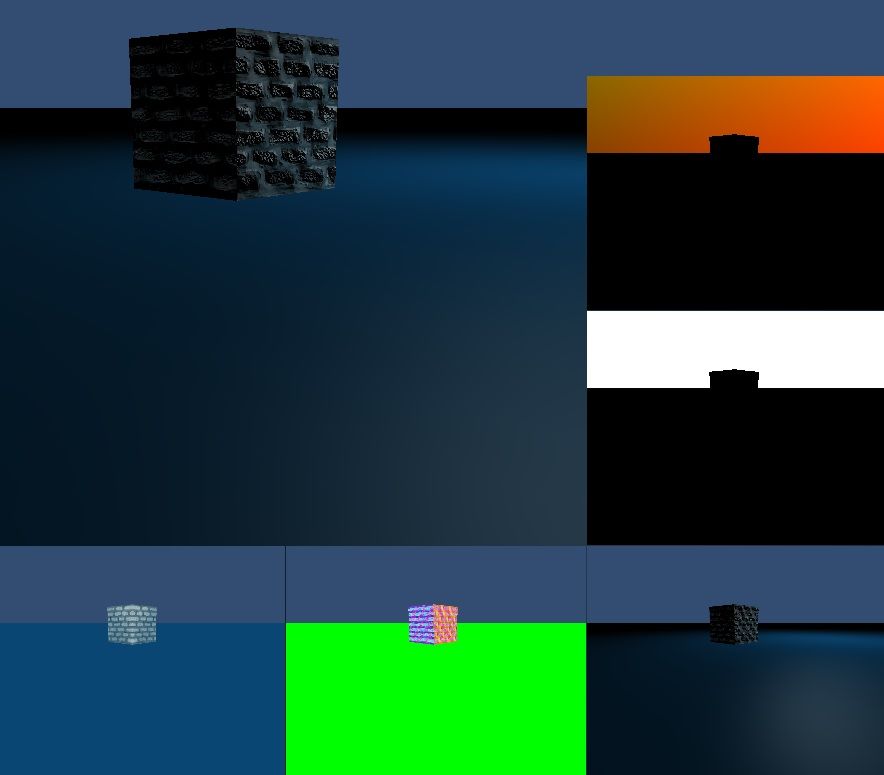

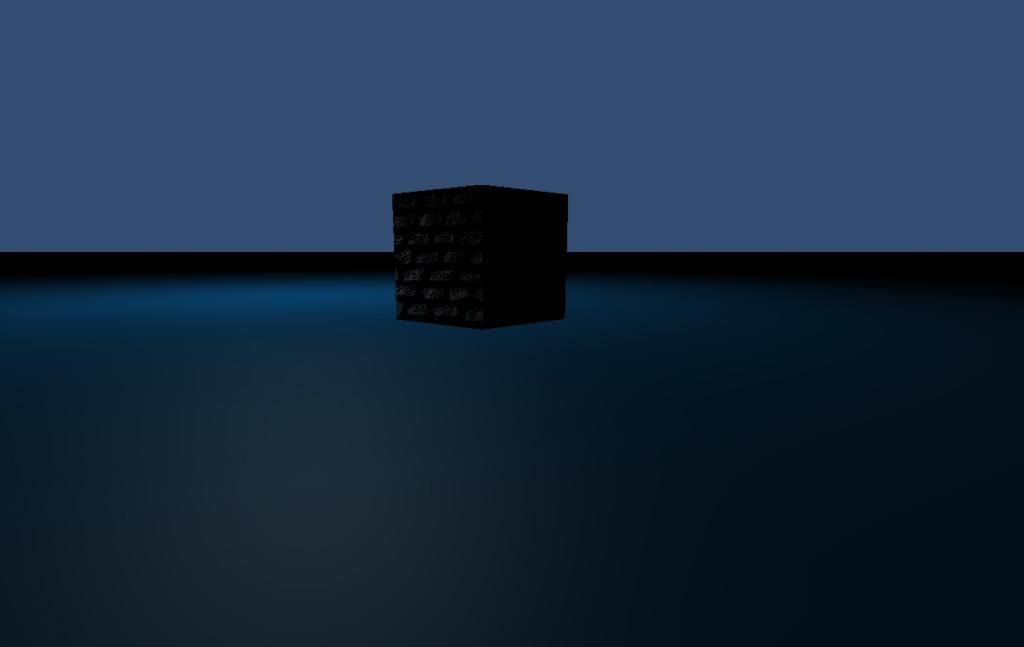

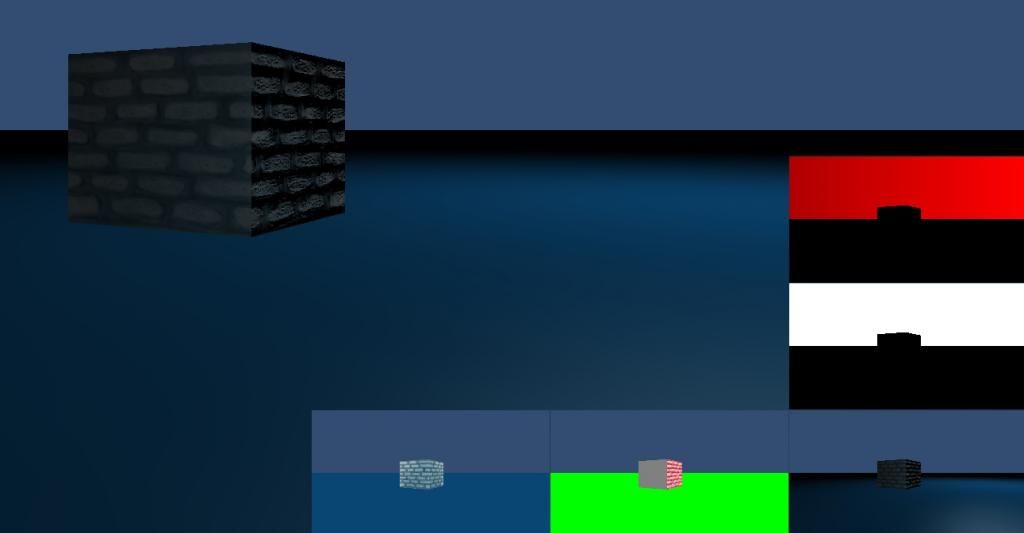

In my attempt to port the last sample (based on the Second-Depth Anti-Alias sample) I get world space wrapped inside the light. Clearly the primary fault is that the inverse view projection is invalid (this was the worst number of artifacts I could generate rotating around a cube).

I intentionally have a very well rounded light sphere to make the distortions clear.

Provided I can get the basics like a world space position I can write beautiful shaders, I am struggling to get this point of reference and without it I feel about 2cm tall.

Because I want this to be available for everyone (I hate the lack of GL sample code!) here is what I have so far (apologies that there is no sample app):

GeomVertShader:

#version 330

in vec3 inPos;

in vec2 inUV;

in vec3 inNormal;

in vec3 inBinormal;

in vec3 inTangent;

uniform mat4 wvpMatrix;

out vec4 mWVPPosition;

out vec3 pNormal;

out vec3 pBinormal;

out vec3 pTangent;

out vec2 texCoord;

void main(void) {

mWVPPosition = wvpMatrix * vec4(inPos, 1.0f);

gl_Position = mWVPPosition;

pNormal = inNormal;

pBinormal = inBinormal;

pTangent = inTangent;

texCoord = inUV;

}

GeomFragShader

#version 330

in vec4 mWVPPosition;

in vec3 pNormal;

in vec3 pBinormal;

in vec3 pTangent;

in vec2 texCoord;

uniform mat4 wvpMatrix;

uniform sampler2D diffuseTexture;

uniform sampler2D normalTexture;

uniform sampler2D heightTexture;

uniform sampler2D specularTexture;

layout (location = 0) out vec4 colourOut;

layout (location = 1) out vec4 normalOut;

void main(void) {

vec3 bump = 2 * texture(normalTexture, texCoord).xyz -1;

vec3 normal = pTangent * bump.x + pBinormal * bump.y + pNormal * bump.z;

normal = normalize(normal);

colourOut = texture( diffuseTexture, texCoord );

// specular intensity

vec3 specularSample = texture( specularTexture, texCoord ).xyz;

colourOut.w = ( specularSample.x + specularSample.y + specularSample.z ) / 3;

normalOut.xyz = normal;

normalOut.w = 1;

}

PointLightVertShader

#version 330

in vec3 inPos;

in vec2 inUV;

uniform mat4 wvpMatrix;

uniform mat4 ivpMatrix;

uniform vec2 zBounds;

uniform vec3 camPos;

uniform float invRadius;

uniform vec3 lightPos;

uniform vec3 lightColour;

uniform float lightRadius;

uniform float lightFalloff;

out vec4 mWVPPosition;

void main(void) {

vec3 position = inPos;

position *= lightRadius;

position += lightPos;

mWVPPosition = wvpMatrix * vec4(position, 1.0f);

gl_Position = mWVPPosition;

}

PointLightFragShader

#version 330

in vec4 mWVPPosition;

uniform mat4 wvpMatrix;

uniform mat4 ivpMatrix;

uniform vec2 zBounds;

uniform vec3 camPos;

uniform float invRadius;

uniform vec3 lightPos;

uniform vec3 lightColour;

uniform float lightRadius;

uniform float lightFalloff;

uniform sampler2D diffuseTexture;

uniform sampler2D normalTexture;

uniform sampler2D depthTexture;

layout (location = 0) out vec4 colourOut;

void main(void) {

vec2 UV = mWVPPosition.xy;

float depth = texture(diffuseTexture, UV).x;

vec3 addedLight = vec3(0,0,0);

//if (depth >= zBounds.x && depth <= zBounds.y)

{

vec4 diffuseTex = texture(diffuseTexture, UV);

vec4 normal = texture(normalTexture, UV);

// Screen-space position

vec4 cPos = vec4(UV, depth, 1);

// World-space position

vec4 wPos = ivpMatrix * cPos;

vec3 pos = wPos.xyz / wPos.w;

// Lighting vectors

vec3 lVec = (lightPos - pos) * invRadius;

vec3 lightVec = normalize(lVec);

vec3 viewVec = normalize(camPos - pos);

// Attenuation that falls off to zero at light radius

float atten = clamp(1.0f - dot(lVec, lVec), 0.0, 1.0);

atten *= atten;

// Lighting

float colDiffuse = clamp(dot(lightVec, normal.xyz), 0, 1);

float specular_intensity = diffuseTex.w * 0.4f;

float specular = specular_intensity * pow(clamp(dot(reflect(-viewVec, normal.xyz), lightVec), 0.0, 1.0), 10.0f);

addedLight = atten * (colDiffuse * diffuseTex.xyz + specular);

}

colourOut = vec4(addedLight.xyz, 1);

}

Note that for the moment I am totally ignoring the optimisation of "if (depth >= zBounds.x && depth <= zBounds.y)" because I want to crack the basic reconstruction before experimenting with this.

Shader Binding:

Matrix4f inverseViewProjection = new Matrix4f();

Vector3f camPos = cameraController.getActiveCameraPos();

GL20.glUniform3f(shader.getLocCamPos(), camPos.x, camPos.y, camPos.z);

inverseViewProjection = cameraController.getActiveVPMatrixInverse();

//inverseViewProjection = inverseViewProjection.translate(new Vector3f(-1f, 1f, 0));

//inverseViewProjection = inverseViewProjection.scale(new Vector3f(2, -2, 1));

inverseViewProjection = inverseViewProjection.scale(new Vector3f(1f/engineParams.getDisplayWidth(), 1f/engineParams.getDisplayHeight(), 1));

GL20.glUniformMatrix4(shader.getLocmIVPMatrix(), false, OpenGLHelper.getMatrix4ScratchBuffer(inverseViewProjection));

float nearTest = 0, farTest = 0;

Matrix4f projection = new Matrix4f(cameraController.getCoreCameraProjection());

GL20.glUniformMatrix4(shader.getLocmWVP(), false, OpenGLHelper.getMatrix4ScratchBuffer(cameraController.getActiveViewProjectionMatrix()));

Vector2f zw = new Vector2f(projection.m22, projection.m23);

//Vector4f testLightViewSpace = new Vector4f(lightPos.getX(), lightPos.getY(), lightPos.getZ(), 1);

//testLightViewSpace = OpenGLHelper.columnVectorMultiplyMatrixVector((Matrix4f)cameraController.getActiveCameraView(), testLightViewSpace);

// Compute z-bounds

Vector4f lPos = OpenGLHelper.columnVectorMultiplyMatrixVector(cameraController.getActiveCameraView(), new Vector4f(lightPos.x, lightPos.y, lightPos.z, 1.0f));

float z1 = lPos.z + lightRadius;

//if (z1 > NEAR_DEPTH)

{

float z0 = Math.max(lPos.z - lightRadius, NEAR_DEPTH);

nearTest = (zw.x + zw.y / z0);

farTest = (zw.x + zw.y / z1);

if (nearTest > 1) {

nearTest = 1;

} else if (nearTest < 0) {

nearTest = 0;

}

if (farTest > 1) {

farTest = 1;

} else if (farTest < 0) {

farTest = 0;

}

GL20.glUniform3f(shader.getLocLightPos(), lightPos.getX(), lightPos.getY(), lightPos.getZ());

GL20.glUniform3f(shader.getLocLightColour(), lightColour.getX(), lightColour.getY(), lightColour.getZ());

GL20.glUniform1f(shader.getLocLightRadius(), lightRadius);

GL20.glUniform1f(shader.getLocInvRadius(), 1f/lightRadius);

GL20.glUniform1f(shader.getLocLightFalloff(), lightFalloff);

GL20.glUniform2f(shader.getLocZBounds(), nearTest, farTest);

}

The line "inverseViewProjection = cameraController.getActiveVPMatrixInverse();" depends on the multiplied result of the inverse of these two:

View Matrix

public void updateViewMatrix(Matrix4f coreViewMatrix) {

Matrix4f.setIdentity(coreViewMatrix);

if (lookAtVector.length() != 0) {

lookAtVector.normalise();

}

Vector3f.cross(up, lookAtVector, right);

if (right.length() != 0) {

right.normalise();

}

Vector3f.cross(lookAtVector, right, up);

if (up.length() != 0) {

up.normalise();

}

coreViewMatrix.m00 = right.x;

coreViewMatrix.m01 = up.x;

coreViewMatrix.m02 = lookAtVector.x;

coreViewMatrix.m03 = 0;

coreViewMatrix.m10 = right.y;

coreViewMatrix.m11 = up.y;

coreViewMatrix.m12 = lookAtVector.y;

coreViewMatrix.m13 = 0;

coreViewMatrix.m20 = right.z;

coreViewMatrix.m21 = up.z;

coreViewMatrix.m22 = lookAtVector.z;

coreViewMatrix.m23 = 0;

//Inverse dot from eye position

coreViewMatrix.m30 = -Vector3f.dot(eyePosition, right);

coreViewMatrix.m31 = -Vector3f.dot(eyePosition, up);

coreViewMatrix.m32 = -Vector3f.dot(eyePosition, lookAtVector);

coreViewMatrix.m33 = 1;

}

Projection Matrix:

public static void createProjection(Matrix4f projectionMatrix, float fov, float aspect, float znear, float zfar) {

float scale = (float) Math.tan((Math.toRadians(fov)) * 0.5f) * znear;

float r = aspect * scale;

float l = -r;

float t = scale;

float b = -t;

projectionMatrix.m00 = 2 * znear / (r-l);

projectionMatrix.m01 = 0;

projectionMatrix.m02 = 0;

projectionMatrix.m03 = 0;

projectionMatrix.m10 = 0;

projectionMatrix.m11 = 2 * znear / (t-b);

projectionMatrix.m12 = 0;

projectionMatrix.m13 = 0;

projectionMatrix.m20 = (r + l) / (r-l);

projectionMatrix.m21 = (t+b)/(t-b);

projectionMatrix.m22 = -(zfar + znear) / (zfar-znear);

projectionMatrix.m23 = -1;

projectionMatrix.m30 = 0;

projectionMatrix.m31 = 0;

projectionMatrix.m32 = -2 * zfar * znear / (zfar - znear);

projectionMatrix.m33 = 0;

}

TLDR:

Please help me diagnose what is wrong with the lighting from the picture/code above, my holy grail is a working sample of true depth reconstruction in OpenGL preferably to world space.