Hey guys!

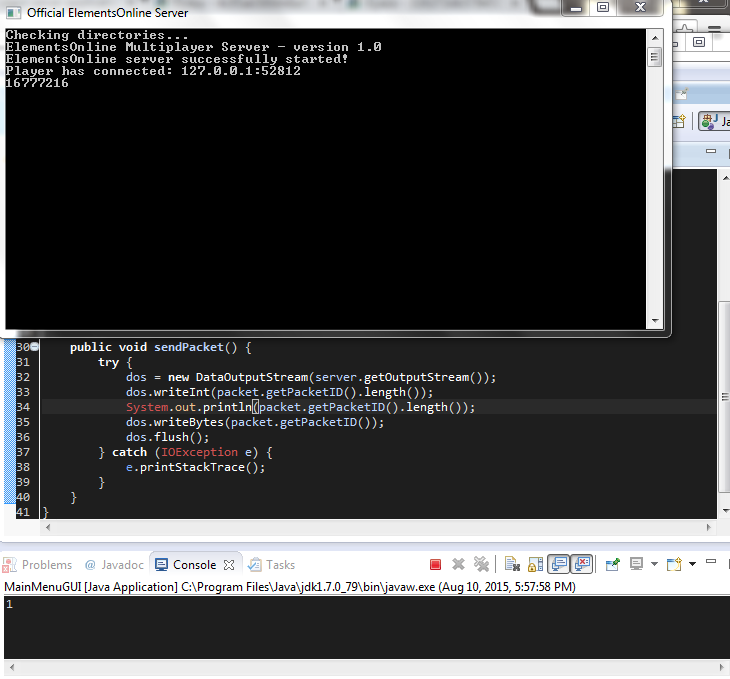

I am writing a multiplayer game and I'm going on to the networking now. But I run into an issue.

My C# server is not reading the bytes sent from my java client correctly.

This is the code on both sides:

Client:

public void sendPacket() {

try {

dos = new DataOutputStream(server.getOutputStream());

dos.writeBytes(packet.getPacketID());

dos.flush();

} catch (IOException e) {

e.printStackTrace();

}

}

Server:

NetworkStream playerStream = client.GetStream();

int recv;

byte[] data = new byte[client.ReceiveBufferSize];

Console.WriteLine("Player has connected: {0}", client.Client.RemoteEndPoint);

if (client.Client.Available > 0) {

PacketReader s = new PacketReader(data);

s.s(); // This does "ReadString()" from BinaryReader.

}

My Strings show up blank in the console. Any help?