General note on this article

The following article is all about AI and the different ways that we, at GolemLabs, have decided to address some challenges regarding the development of the EHE (Evolutive Human Emulator), our technological middleware. The idea of a completely dedicated AI middleware that can adapt to multitudes of types of game play, and that does the things we're making our technology do, is quite new in the industry. So we've decided to start promoting these things with the hope of increasing awareness, dialogue and interest in this field of research.

It's important to note, though, what we mean by "AI". Today more than ever before, AI has become a buzzword that encompasses anything and everything. We believe AI will be the next big wave, not only in gaming (replacing the current focus that has been on graphics for a number of years now) but in many other areas as well. Sensing the opportunity, marketing-type individuals affix the name "AI" to a lot of different things, most of which we're not agreeing with.

I'll surrender the point from the get-go that our view of what constitutes artificial intelligence is the view of purists. A fridge that starts beeping when the door is left opened for too long, for example, isn't "intelligent", no matter what the company says. If the fridge found out your patterns, detected that you fell asleep on the couch, and closed the door all by itself because you're not coming back - now, that would be a feat, and would certainly qualify better. Many companies today market physics engines, path finding, rope engines, etc. as "intelligent". While their technology are often impressive at what they do, this isn't how we've decided to (narrowly) define what constitutes artificial intelligence.

Our research and development has focused on the technology of learning, adapting, and interpreting the world independently. The state of the EHE today, and the next iterations of development that we'll start presenting here, will focus on personality, emotions, common core knowledge, forced feedback loops, and other such components. We hope that the discussions they will bring will generate ideas, debates, and innovations on this very important and often misused field.

"Making Machines Learn"

At the core of any adaptive artificial intelligence technology is the idea of learning. A system that doesn't learn is pre-programmed - the "correct" solution is integrated inside the program at launch, and the task of the system is to navigate through a series of conditions and caveats to determine which end of the pre-calculated decisions best fits its current condition. A large percentage of AI engines works that way. A "fixed" system like that certainly has its advantages:

1. The outcomes are "managed" and under control.

2. The programmers can better debug and maintain the source code.

3. The design teams can help push any action in the desired direction to move the story along.

These advantages, especially the second one, have traditionally outweighed the scale towards creating such pre-programmed decision-tree systems. The people responsible for creating AI in games are programmers, and programmers like to be able to predict what happens in any given moment. Since, very often, designers indications on artificial intelligence revolve around "make them not too dumb", it's no secret that programmers will choose systems they can maintain.

But these advantages also have a downside:

1. New situations, often introduced by human players finding unforeseen circumstances overlooked during development, aren't handled.

2. Decision patterns can be deducted and "reverse-engineered" by astute players.

Often, development teams circumvent these disadvantages by giving these "dumb" AI opponents superior force, agility, hit points, etc. to level the playing field with the player. An enemy bot can, for instance, always hit you between the eyes with his gun as soon as he has a line of sight. The balance needed to create an interesting play experience is difficult to achieve, almost impossible to please both novice players and experienced ones. Usually, once a player becomes more expert in a game, playing against the AI doesn't offer an interesting experience and the players look for human opponents online.

But what if the system could reproduce the learning patterns of the human player: starting inexperienced and, by being thought by actions, how to play better? After all, playing a game is reproducing simple patterns in an always more complex set of situations, something computers are made to do. What would it mean to make the system learn how to play better, as it's playing?

To answer that question, we need to look outside the field of computer software and go into psychology and biology - what does it mean to learn, and how is the process shaping our expertise in playing a game? How come two different players, playing the same game, will build two completely different styles of playing (a question we're addressing on our next article about personalities and emotions)?

Let's look at three different ways of learning, and see how machines could use them.

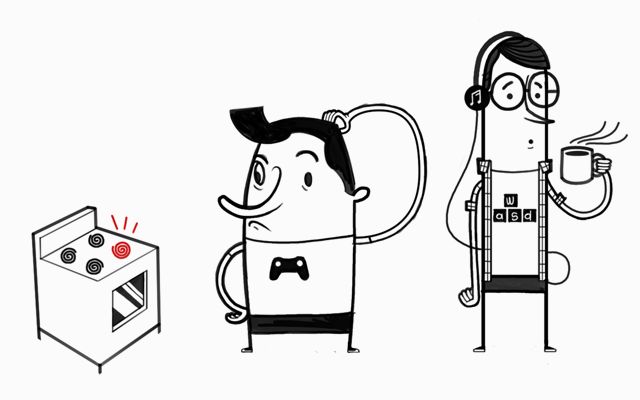

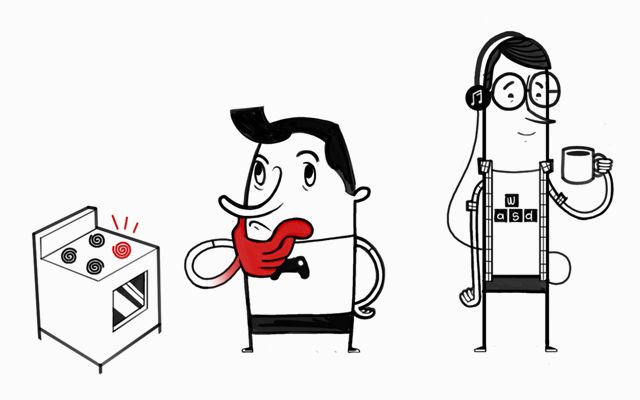

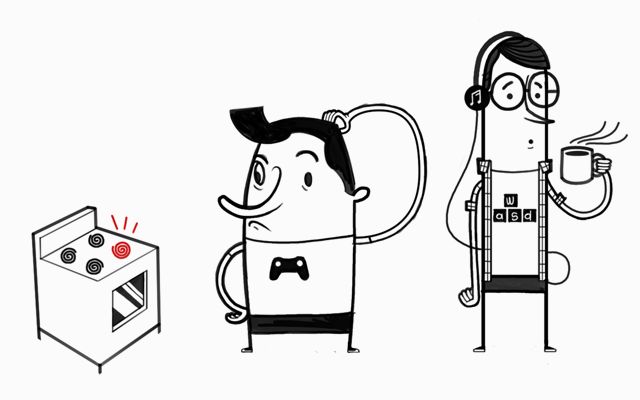

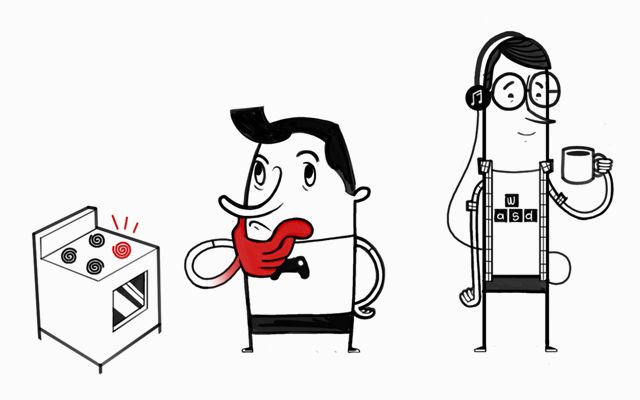

The first kind is learning through action: the stove top is turned on, you stick a finger on it, it burns, and you just learned the hard way not to touch the stove. This (Pavlovian?) way of learning is a simple example of action/reaction. Looking at the consequence of the action, the effects are so negative and severe that the expected positive stimulus (taking food now) is outmatched. Teaching computers to learn through this process is not that difficult - you need to weight the consequences of an action and compare them with the expected consequences, or ideal consequences. The worse the real effects are, the harder you learn not to repeat the specific action.

The second kind builds upon the first one: learning through observation. You see the stove top, and you can see the water boiling. You deduct that there is a heat source underneath, and putting your finger there wouldn't be wise. This means that you can predict the consequences of an action without having to experience it yourself. A computer that would do it would, of course, need basic information on the reality of the world - it needs to know what a heat source is, and it's possible side effects. Even without having experienced direct harm, it's possible to have it "know" the effects nonetheless. This is achieved through what we call the common core knowledge and will be the topic of an upcoming article. Basically, we know that the stove burns because some people got burned before us. They learned through action, their effects were severe (maybe fatal), and society as a whole learned from the mistakes of these people. The common core (or "Borg" as we call it) is designed to reproduce that.

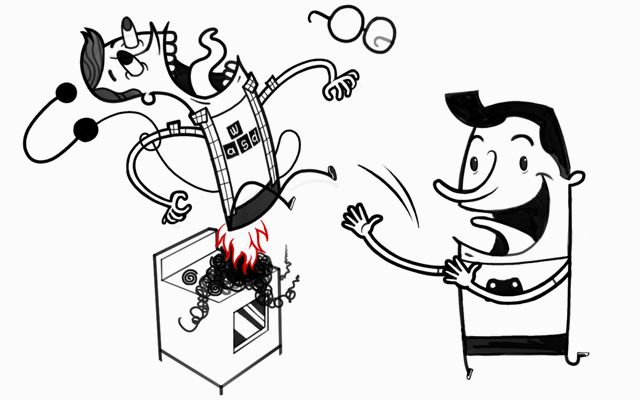

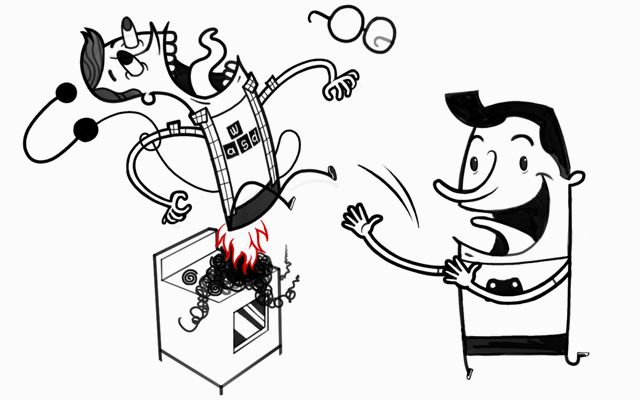

The third kind, the most interesting for gamers, is learning through planning. Again, it builds on its predecessor. If putting you in contact with the stove top inflicts important, possible fatal damages, then it's possible to use that information on others. Like a nemesis, for instance (by essentially doing the same reasoning as above, but with different measurements of what would be a positive or a negative outlook). I don't want to burn myself, but I might want to burn someone else. Again, I've never burned myself, and I haven't use the stove top ever in my life before, but I have general knowledge of its use and possible side effects, and I'm using that to project in time a plan during a fight. If I push my opponent now on the stove top, it should bring him pain, and this brings me closer to my goal of winning the fight.

These three types of learning get exponentially more complex to translate in computer terms, but yet they represent simple, binary ways of thinking. Breaking down information and action into simple elements enables computers to comprehend them and work with them. This creates a very different challenge to the game designers and programmers - instead of scripting behaviors in an area, they need to teach the system about the rules of the world around them, and then let the system "understand" how best to use them. The large drawback is the total forfeiture of the first big advantage of fixed systems we listed above, namely to control the behavior of the entities. If the system is poorly constructed, and the rules of the physical world aren't translated properly to the system, then the entities will behave chaotically (Garbage-in-garbage-out rule).

Building and training the systems to go through the various ways of learning is the main challenge of a technology like the EHE, but we believe the final outcome is well worth the effort.

But what about the roles & effects of emotions and personality on learning? On the decision-making process?

What about the concept of common core knowledge?

That part of the article will probably seem a little weird to some as it deals with emotions and personalities. Why "weird"? Because if there's one thing we usually are safe in asserting, is that machines are cold calculators. They calculate the odds and make decisions based on fixed mathematical criteria. Usually, programmers prefer them that way as well.

When companies talk about giving personality and emotion to AI-driven agents, they usually refer to the fixed-decision making pipeline that we mentioned in this article. Using different graphs will generate different types of responses to these agents, so we can code one to be more angry, more playful, more curious, etc. While these are personalities, the agents themselves don't really have a choice in the matter and don't understand the differences in the choices they're making. They're only following a different fixed set of order.

Science-fiction has often touched the topic of emotional machines, or machines with personalities. This is the last step before we get to the sentient machine, a computer system that knows it exists.

You can rest assured, though. I won't reveal here that we've created such a system. But these concepts are what constitutes the weirdness of this part of the article, and the often unsettling analysis of its consequences.

Personality

Earlier in this article, I mentioned how many companies use buzz-words as marketing strategies, thus the "intelligent" fridge beeped when the door was left opened. In the same vein, many companies will talk about personality in machines and software. Let's define how we're addressing it to lessen the confusion: for the EHE, a personality is a filter through which you understand the world. A "neutral" entity, without any personality, will analyze that they're 50% hungry. That is the ratio it finds of food in system vs. need. But we humans usually don't view the situation as coldly as this. Some of us are very prone to gluttony, and will eat all the time, with even the minimal level of hunger. Some others are borderline anorexic and will never eat, no matter how badly we physically need it. Then there's the question of the interactions with the other needs - I'd normally eat, but I'm playing right now, so it'll have to wait. In short, the "playing computer games" need trumps the "hunger" need. But if I'm bored and have nothing else to do, my minimal hunger will be felt as more urgent.

All these factors, and dozens more, vary from one individual to another. This is why you can talk about someone and say things like "Don't mention having a baby to her, she'll break down crying". Or "Get him away from alcohol or he'll make a fool of himself". We know these traits in the people close to us because we can anticipate how they'll react to various events. We're even much better to notice these traits in others than in ourselves, and are often surprised when someone will mention such a trait about us. We see them in others most of the time because they differ than ours.

For some events - let's continue using the "hunger" example - we will, of course, feel that we're "normal", so our own perception of how someone should react to food is based on how we ourselves would react. For instance, if someone reacts less strongly than we would, then this surely means that this person has a low appetite. But if this person reacts way less strongly when hungry, then we become worried about their health. The opposite is also true, if the person has a stronger reaction when starving.

These levels of reactions to various events in our lives are what we define as being our personality. It is the filter with which we put a bias on the rational reality of our lives. Without it, everyone would be clones, reacting the same way to everything happening. But because of various reasons (cultural, biological, taught) we're all unique in these aspects.

Our EHE-driven agents also have such elements to filter up or down the various events that happen to them. An agent that is very "campy" and guarded will react much more strongly to danger than another one who is more brazen. The same event will be felt by both entities differently, the first one ducking for cover and the second one charging, all of this dynamically determined based on the situation at hand, not pre-scripted for each "types".

Emotions

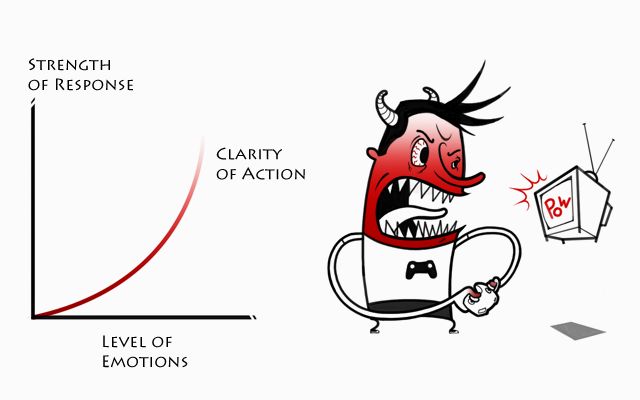

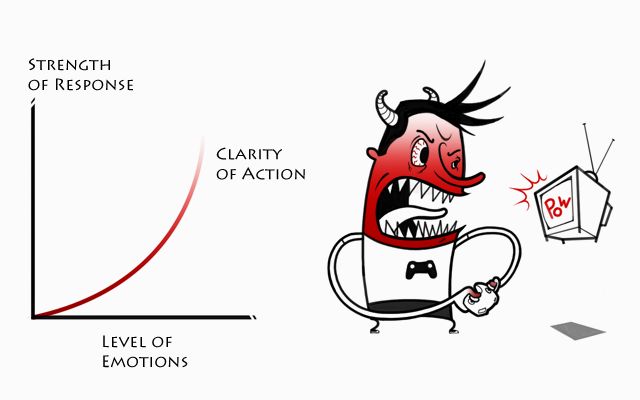

If a personality filters differently the "in" component of an EHE entity, emotions filters the "out". If a personality shapes how we perceive the various events in our lives, our emotions will modulate how we determine our reactions to them. Thus, emotions are used a little bit like an exponential scale that starts to block rational thinking.

The way we're approaching this is to say that someone who isn't emotional is logical, cold, and cerebral. That person will analyze the situation and take the best decision based on facts. One of the events in the movie I, Robot illustrates this perfectly when a robot rescues Del Spooner - the Will Smith character - from drowning. The robot analyzed that Spooner had more chances of survival than the little girl next to him, so it saved him. It was a cold decision based on facts and probabilities, not on our human tendencies to save children first, and be more affected by the death of a child (or a cute animal).

On the contrary, someone who is overtly emotional will become erratic, unhinged, crazy, etc. That person will react to events in unpredictable, illogical ways. They will not think of the consequences of their actions and, as would a more rational person would do, do whatever seems best to address the situation right away. I'm angry at you? I'll punch you in the face. That is an emotional response. I "saw red", I "wasn't thinking", etc. That is, I stopped caring about what would happen next. I simply went to the most brutal and efficient way to address my anger.

Normally, the EHE will consider outside consequences when dealing with a problem to solve. This is one of the core tenants of the technology. If I'm angry at you, there's a number of things that I could do, ranging from ignoring, to politely arguing, to yelling, to fist-fighting. These things escalate in efficiency to solve the problem - ignoring you doesn't address my anger in the slightest (until a certain amount of time will have passed), but it does the least collateral damages. On the other end of the spectrum, punching you in the face is probably the most satisfying way of addressing my anger, but it has the unpleasant consequence of possibly landing me in jail.

If I'm in control of my emotions, I'll juggle the satisfaction of punching you in the face with the unpleasantness of going to jail, and will probably judge that going to jail is more unpleasant than punching is pleasant, thus the action will be discarded from the range of things that I should do.

But if ignoring you doesn't solve the problem (and possibly even increases it), and if trying to sort things out doesn't solve it as well, then me being "angry at you" is starting to be a real problem, and my inefficiency at solving it is increasing my "anger" emotion. The more it rises, the less I start considering the collateral effects of my actions. So after a certain amount of time, the punching-in-the-face action that was completely discarded earlier will become a totally sane response, because I've temporarily forgotten some of the consequences of it and focuses only on "does it solve my angry at you problem?".

Of course, an emotion can also be positive. Who hasn't done something stupid because of joy? Or because of love? Who hasn't done something he or she later regretted, wondering "What was I thinking?". Well, that's precisely the point: this person wasn't thinking, and was temporarily blinded by a high level of emotion, incapable of seeing outside consequences of what he or she was doing.

When broken down like that, personalities and emotions become manageable for computers, and they can then reproduce convincing behaviors without direction or scripts. Depending on the definitions of the world, they can adapt and evolve in time, and become truly believable characters.

With that being said, two problems that were identified earlier in this article start to appear: unpredictability and the limitations of a closed system. Even if we do a very good job at creating entities that are dynamic and evolving, our world - the computer game in which this technology lives - is behaving according to fixed rules. Even if the data of the world evolves, the reality of the game is finite, and thus the possibility of the entity to learn is bound by that limitation.

It becomes a problem when, for some freak reason, the AI starts to learn the "wrong" thing. It happens a lot, and we usually blame the human player for that.

Why?

Because we, humans, play games for various reasons. And not all of them logical. Sometimes, we just want to see what will happen if we try to do something crazy like that. Sometimes we want to unlock a specific goody, cinematic, prize, etc. Sometimes we're just finding a specific animation funny and want to see it again and again. Also, a human player can suck at the game, especially at first. What happens then? How is the AI supposed to learn and adapt based on the reality of the world under which it's thrown when the parameters of that reality are not realistic themselves?

We made a game a while back called Superpower 2 (newly re-released on Steam; :-) ). In this game, the player would select a country and play it in a more detailed version of Risk, if you will. But sometimes, the player would just pick the US and nuke Paris, for no particular reason. Absolutely nothing in the game world explained that behavior, but here we were anyway. The AI was, in situations like this one, understandably becoming confused, and reacting outside the bounds of where we had envisioned it, because the player itself was feeding the confusion. The EHE would then learn the wrong thing, and in subsequent games would confront the player under these parameters that were wrong in the first place, causing further confusion for the players.

The memory hub (or Borg) comes in to help alleviate these situations. Let's look at it, using, once more, traditional human psychology.

The human race is a social one, and it's one of the reasons explaining our success. We work together, learn from one another, and pass on that knowledge from generation to generation. That way, we don't always reset knowledge with each new generation, and become more and more advanced as time passes. We also use these social interactions to validate each other to conform into a specific "mold" that varies from society to society, and evolves with time. A specific behavior that was perfectly fine a generation ago could get you in trouble today, and vice-versa. That "mold" is how we define normalcy - the further you are from the center, the more "weird" you become. If some weirdness in you makes you dangerous to yourself (if you like to eat chairs, for example) or to others (you like to eat other people), then society as a whole intervenes to either bring you back towards the center ("stop doing that") or, in cases where it's too dangerous, to cut you away from society. The goal is always the same, even if we celebrate each other's individuality and quirky specifics. We still need to be consistent with what is considered "acceptable behavior".

These behaviors are what society determined what "works" to keep it functional and relatively happy. Just a couple of decades ago, gays could be put in prison for being overtly... well... gay. This was how a majority of people thought, and their reasoning was logical, back then: gays were bad for children; this is how God wants it, etc. These days, the pendulum is changing direction: a majority of people don't see gays as a danger to society any longer, as more civil rights are now given to them. This is how society works best to solve its problems because it is what a majority of people came to accept as being normal. Back in the 1950's, someone who said there was nothing wrong with being gay would've been viewed as outside the mainstream. Today, such an opinion is considered the norm.

The memory hub thus serves as a sort of aggregate of the conditions of what works for every EHE entity. Imagine a game coming out powered by the EHE. That game would be connected to the memory hub, and remain connected to be validated and updated based on the shared knowledge of thousands of other players around the world.

The hub would be used to do multiple things:

1. Upon initial boot, and periodically after that, the AI would be updated with the latest logic, solutions and strategies to better "win", based on the experience of everyone else who has played so far.

2. Your EHE learns individually based on your reality, but it always checks in to validate that its memory matrix isn't too far off course. When the particular cases of a player (like the nuking players mentioned above) mean that their EHE becomes confused, it can reassure itself with the mother ship and disregard knowledge that would have been proven "wrong".

3. When the particular experience of an EHE makes it efficient at solving a specific problem - because a player confronted it in a specific fashion, for instance - the new information can be uploaded to the hub and shared back to the community.

So while the game learns by playing against you, and adapts to your style of playing, it also shares the knowledge of thousands of other players around the world. This knowledge means that an EHE-powered game connected to the hub would have tremendous replay value, as the game's AI is continually evolving and adapting to the game's circumstances and experience of play.

The hub, viewed as a social tool, can also be very interesting. How would the community react to a specific event, or trigger? Are all players worldwide playing the same way? All age groups...? Studying the memory hub based on how it evolves and reacts would be a treasure trove of information for future games, but also for the game designers working on a current game: if an exploit arises or a way to "cheat" is found, the memory hub will learn about it very quickly, thus being able to warn game designers what players are doing, how they're beating the game or, on the other side, how the game is always beating them under determined conditions, thus guiding the designers to adjust afterwards, almost in real time, based on real play data.

In the end, we propose the Borg not only as a safeguard against bad memory, but as a staging ground for better learning, and better dissemination of what is being learned for the shared benefit of all players.

Of course, we welcome your questions, comments, and thoughts on this article.

Let's look at three different ways of learning, and see how machines could use them.

The first kind is learning through action: the stove top is turned on, you stick a finger on it, it burns, and you just learned the hard way not to touch the stove. This (Pavlovian?) way of learning is a simple example of action/reaction. Looking at the consequence of the action, the effects are so negative and severe that the expected positive stimulus (taking food now) is outmatched. Teaching computers to learn through this process is not that difficult - you need to weight the consequences of an action and compare them with the expected consequences, or ideal consequences. The worse the real effects are, the harder you learn not to repeat the specific action.

Let's look at three different ways of learning, and see how machines could use them.

The first kind is learning through action: the stove top is turned on, you stick a finger on it, it burns, and you just learned the hard way not to touch the stove. This (Pavlovian?) way of learning is a simple example of action/reaction. Looking at the consequence of the action, the effects are so negative and severe that the expected positive stimulus (taking food now) is outmatched. Teaching computers to learn through this process is not that difficult - you need to weight the consequences of an action and compare them with the expected consequences, or ideal consequences. The worse the real effects are, the harder you learn not to repeat the specific action.

The second kind builds upon the first one: learning through observation. You see the stove top, and you can see the water boiling. You deduct that there is a heat source underneath, and putting your finger there wouldn't be wise. This means that you can predict the consequences of an action without having to experience it yourself. A computer that would do it would, of course, need basic information on the reality of the world - it needs to know what a heat source is, and it's possible side effects. Even without having experienced direct harm, it's possible to have it "know" the effects nonetheless. This is achieved through what we call the common core knowledge and will be the topic of an upcoming article. Basically, we know that the stove burns because some people got burned before us. They learned through action, their effects were severe (maybe fatal), and society as a whole learned from the mistakes of these people. The common core (or "Borg" as we call it) is designed to reproduce that.

The second kind builds upon the first one: learning through observation. You see the stove top, and you can see the water boiling. You deduct that there is a heat source underneath, and putting your finger there wouldn't be wise. This means that you can predict the consequences of an action without having to experience it yourself. A computer that would do it would, of course, need basic information on the reality of the world - it needs to know what a heat source is, and it's possible side effects. Even without having experienced direct harm, it's possible to have it "know" the effects nonetheless. This is achieved through what we call the common core knowledge and will be the topic of an upcoming article. Basically, we know that the stove burns because some people got burned before us. They learned through action, their effects were severe (maybe fatal), and society as a whole learned from the mistakes of these people. The common core (or "Borg" as we call it) is designed to reproduce that.

The third kind, the most interesting for gamers, is learning through planning. Again, it builds on its predecessor. If putting you in contact with the stove top inflicts important, possible fatal damages, then it's possible to use that information on others. Like a nemesis, for instance (by essentially doing the same reasoning as above, but with different measurements of what would be a positive or a negative outlook). I don't want to burn myself, but I might want to burn someone else. Again, I've never burned myself, and I haven't use the stove top ever in my life before, but I have general knowledge of its use and possible side effects, and I'm using that to project in time a plan during a fight. If I push my opponent now on the stove top, it should bring him pain, and this brings me closer to my goal of winning the fight.

The third kind, the most interesting for gamers, is learning through planning. Again, it builds on its predecessor. If putting you in contact with the stove top inflicts important, possible fatal damages, then it's possible to use that information on others. Like a nemesis, for instance (by essentially doing the same reasoning as above, but with different measurements of what would be a positive or a negative outlook). I don't want to burn myself, but I might want to burn someone else. Again, I've never burned myself, and I haven't use the stove top ever in my life before, but I have general knowledge of its use and possible side effects, and I'm using that to project in time a plan during a fight. If I push my opponent now on the stove top, it should bring him pain, and this brings me closer to my goal of winning the fight.

These three types of learning get exponentially more complex to translate in computer terms, but yet they represent simple, binary ways of thinking. Breaking down information and action into simple elements enables computers to comprehend them and work with them. This creates a very different challenge to the game designers and programmers - instead of scripting behaviors in an area, they need to teach the system about the rules of the world around them, and then let the system "understand" how best to use them. The large drawback is the total forfeiture of the first big advantage of fixed systems we listed above, namely to control the behavior of the entities. If the system is poorly constructed, and the rules of the physical world aren't translated properly to the system, then the entities will behave chaotically (Garbage-in-garbage-out rule).

Building and training the systems to go through the various ways of learning is the main challenge of a technology like the EHE, but we believe the final outcome is well worth the effort.

But what about the roles & effects of emotions and personality on learning? On the decision-making process?

What about the concept of common core knowledge?

That part of the article will probably seem a little weird to some as it deals with emotions and personalities. Why "weird"? Because if there's one thing we usually are safe in asserting, is that machines are cold calculators. They calculate the odds and make decisions based on fixed mathematical criteria. Usually, programmers prefer them that way as well.

When companies talk about giving personality and emotion to AI-driven agents, they usually refer to the fixed-decision making pipeline that we mentioned in this article. Using different graphs will generate different types of responses to these agents, so we can code one to be more angry, more playful, more curious, etc. While these are personalities, the agents themselves don't really have a choice in the matter and don't understand the differences in the choices they're making. They're only following a different fixed set of order.

Science-fiction has often touched the topic of emotional machines, or machines with personalities. This is the last step before we get to the sentient machine, a computer system that knows it exists.

You can rest assured, though. I won't reveal here that we've created such a system. But these concepts are what constitutes the weirdness of this part of the article, and the often unsettling analysis of its consequences.

These three types of learning get exponentially more complex to translate in computer terms, but yet they represent simple, binary ways of thinking. Breaking down information and action into simple elements enables computers to comprehend them and work with them. This creates a very different challenge to the game designers and programmers - instead of scripting behaviors in an area, they need to teach the system about the rules of the world around them, and then let the system "understand" how best to use them. The large drawback is the total forfeiture of the first big advantage of fixed systems we listed above, namely to control the behavior of the entities. If the system is poorly constructed, and the rules of the physical world aren't translated properly to the system, then the entities will behave chaotically (Garbage-in-garbage-out rule).

Building and training the systems to go through the various ways of learning is the main challenge of a technology like the EHE, but we believe the final outcome is well worth the effort.

But what about the roles & effects of emotions and personality on learning? On the decision-making process?

What about the concept of common core knowledge?

That part of the article will probably seem a little weird to some as it deals with emotions and personalities. Why "weird"? Because if there's one thing we usually are safe in asserting, is that machines are cold calculators. They calculate the odds and make decisions based on fixed mathematical criteria. Usually, programmers prefer them that way as well.

When companies talk about giving personality and emotion to AI-driven agents, they usually refer to the fixed-decision making pipeline that we mentioned in this article. Using different graphs will generate different types of responses to these agents, so we can code one to be more angry, more playful, more curious, etc. While these are personalities, the agents themselves don't really have a choice in the matter and don't understand the differences in the choices they're making. They're only following a different fixed set of order.

Science-fiction has often touched the topic of emotional machines, or machines with personalities. This is the last step before we get to the sentient machine, a computer system that knows it exists.

You can rest assured, though. I won't reveal here that we've created such a system. But these concepts are what constitutes the weirdness of this part of the article, and the often unsettling analysis of its consequences.

Earlier in this article, I mentioned how many companies use buzz-words as marketing strategies, thus the "intelligent" fridge beeped when the door was left opened. In the same vein, many companies will talk about personality in machines and software. Let's define how we're addressing it to lessen the confusion: for the EHE, a personality is a filter through which you understand the world. A "neutral" entity, without any personality, will analyze that they're 50% hungry. That is the ratio it finds of food in system vs. need. But we humans usually don't view the situation as coldly as this. Some of us are very prone to gluttony, and will eat all the time, with even the minimal level of hunger. Some others are borderline anorexic and will never eat, no matter how badly we physically need it. Then there's the question of the interactions with the other needs - I'd normally eat, but I'm playing right now, so it'll have to wait. In short, the "playing computer games" need trumps the "hunger" need. But if I'm bored and have nothing else to do, my minimal hunger will be felt as more urgent.

All these factors, and dozens more, vary from one individual to another. This is why you can talk about someone and say things like "Don't mention having a baby to her, she'll break down crying". Or "Get him away from alcohol or he'll make a fool of himself". We know these traits in the people close to us because we can anticipate how they'll react to various events. We're even much better to notice these traits in others than in ourselves, and are often surprised when someone will mention such a trait about us. We see them in others most of the time because they differ than ours.

For some events - let's continue using the "hunger" example - we will, of course, feel that we're "normal", so our own perception of how someone should react to food is based on how we ourselves would react. For instance, if someone reacts less strongly than we would, then this surely means that this person has a low appetite. But if this person reacts way less strongly when hungry, then we become worried about their health. The opposite is also true, if the person has a stronger reaction when starving.

These levels of reactions to various events in our lives are what we define as being our personality. It is the filter with which we put a bias on the rational reality of our lives. Without it, everyone would be clones, reacting the same way to everything happening. But because of various reasons (cultural, biological, taught) we're all unique in these aspects.

Our EHE-driven agents also have such elements to filter up or down the various events that happen to them. An agent that is very "campy" and guarded will react much more strongly to danger than another one who is more brazen. The same event will be felt by both entities differently, the first one ducking for cover and the second one charging, all of this dynamically determined based on the situation at hand, not pre-scripted for each "types".

Earlier in this article, I mentioned how many companies use buzz-words as marketing strategies, thus the "intelligent" fridge beeped when the door was left opened. In the same vein, many companies will talk about personality in machines and software. Let's define how we're addressing it to lessen the confusion: for the EHE, a personality is a filter through which you understand the world. A "neutral" entity, without any personality, will analyze that they're 50% hungry. That is the ratio it finds of food in system vs. need. But we humans usually don't view the situation as coldly as this. Some of us are very prone to gluttony, and will eat all the time, with even the minimal level of hunger. Some others are borderline anorexic and will never eat, no matter how badly we physically need it. Then there's the question of the interactions with the other needs - I'd normally eat, but I'm playing right now, so it'll have to wait. In short, the "playing computer games" need trumps the "hunger" need. But if I'm bored and have nothing else to do, my minimal hunger will be felt as more urgent.

All these factors, and dozens more, vary from one individual to another. This is why you can talk about someone and say things like "Don't mention having a baby to her, she'll break down crying". Or "Get him away from alcohol or he'll make a fool of himself". We know these traits in the people close to us because we can anticipate how they'll react to various events. We're even much better to notice these traits in others than in ourselves, and are often surprised when someone will mention such a trait about us. We see them in others most of the time because they differ than ours.

For some events - let's continue using the "hunger" example - we will, of course, feel that we're "normal", so our own perception of how someone should react to food is based on how we ourselves would react. For instance, if someone reacts less strongly than we would, then this surely means that this person has a low appetite. But if this person reacts way less strongly when hungry, then we become worried about their health. The opposite is also true, if the person has a stronger reaction when starving.

These levels of reactions to various events in our lives are what we define as being our personality. It is the filter with which we put a bias on the rational reality of our lives. Without it, everyone would be clones, reacting the same way to everything happening. But because of various reasons (cultural, biological, taught) we're all unique in these aspects.

Our EHE-driven agents also have such elements to filter up or down the various events that happen to them. An agent that is very "campy" and guarded will react much more strongly to danger than another one who is more brazen. The same event will be felt by both entities differently, the first one ducking for cover and the second one charging, all of this dynamically determined based on the situation at hand, not pre-scripted for each "types".

If a personality filters differently the "in" component of an EHE entity, emotions filters the "out". If a personality shapes how we perceive the various events in our lives, our emotions will modulate how we determine our reactions to them. Thus, emotions are used a little bit like an exponential scale that starts to block rational thinking.

The way we're approaching this is to say that someone who isn't emotional is logical, cold, and cerebral. That person will analyze the situation and take the best decision based on facts. One of the events in the movie I, Robot illustrates this perfectly when a robot rescues Del Spooner - the Will Smith character - from drowning. The robot analyzed that Spooner had more chances of survival than the little girl next to him, so it saved him. It was a cold decision based on facts and probabilities, not on our human tendencies to save children first, and be more affected by the death of a child (or a cute animal).

On the contrary, someone who is overtly emotional will become erratic, unhinged, crazy, etc. That person will react to events in unpredictable, illogical ways. They will not think of the consequences of their actions and, as would a more rational person would do, do whatever seems best to address the situation right away. I'm angry at you? I'll punch you in the face. That is an emotional response. I "saw red", I "wasn't thinking", etc. That is, I stopped caring about what would happen next. I simply went to the most brutal and efficient way to address my anger.

Normally, the EHE will consider outside consequences when dealing with a problem to solve. This is one of the core tenants of the technology. If I'm angry at you, there's a number of things that I could do, ranging from ignoring, to politely arguing, to yelling, to fist-fighting. These things escalate in efficiency to solve the problem - ignoring you doesn't address my anger in the slightest (until a certain amount of time will have passed), but it does the least collateral damages. On the other end of the spectrum, punching you in the face is probably the most satisfying way of addressing my anger, but it has the unpleasant consequence of possibly landing me in jail.

If I'm in control of my emotions, I'll juggle the satisfaction of punching you in the face with the unpleasantness of going to jail, and will probably judge that going to jail is more unpleasant than punching is pleasant, thus the action will be discarded from the range of things that I should do.

But if ignoring you doesn't solve the problem (and possibly even increases it), and if trying to sort things out doesn't solve it as well, then me being "angry at you" is starting to be a real problem, and my inefficiency at solving it is increasing my "anger" emotion. The more it rises, the less I start considering the collateral effects of my actions. So after a certain amount of time, the punching-in-the-face action that was completely discarded earlier will become a totally sane response, because I've temporarily forgotten some of the consequences of it and focuses only on "does it solve my angry at you problem?".

Of course, an emotion can also be positive. Who hasn't done something stupid because of joy? Or because of love? Who hasn't done something he or she later regretted, wondering "What was I thinking?". Well, that's precisely the point: this person wasn't thinking, and was temporarily blinded by a high level of emotion, incapable of seeing outside consequences of what he or she was doing.

When broken down like that, personalities and emotions become manageable for computers, and they can then reproduce convincing behaviors without direction or scripts. Depending on the definitions of the world, they can adapt and evolve in time, and become truly believable characters.

With that being said, two problems that were identified earlier in this article start to appear: unpredictability and the limitations of a closed system. Even if we do a very good job at creating entities that are dynamic and evolving, our world - the computer game in which this technology lives - is behaving according to fixed rules. Even if the data of the world evolves, the reality of the game is finite, and thus the possibility of the entity to learn is bound by that limitation.

It becomes a problem when, for some freak reason, the AI starts to learn the "wrong" thing. It happens a lot, and we usually blame the human player for that.

Why?

Because we, humans, play games for various reasons. And not all of them logical. Sometimes, we just want to see what will happen if we try to do something crazy like that. Sometimes we want to unlock a specific goody, cinematic, prize, etc. Sometimes we're just finding a specific animation funny and want to see it again and again. Also, a human player can suck at the game, especially at first. What happens then? How is the AI supposed to learn and adapt based on the reality of the world under which it's thrown when the parameters of that reality are not realistic themselves?

We made a game a while back called Superpower 2 (newly re-released on Steam; :-) ). In this game, the player would select a country and play it in a more detailed version of Risk, if you will. But sometimes, the player would just pick the US and nuke Paris, for no particular reason. Absolutely nothing in the game world explained that behavior, but here we were anyway. The AI was, in situations like this one, understandably becoming confused, and reacting outside the bounds of where we had envisioned it, because the player itself was feeding the confusion. The EHE would then learn the wrong thing, and in subsequent games would confront the player under these parameters that were wrong in the first place, causing further confusion for the players.

The memory hub (or Borg) comes in to help alleviate these situations. Let's look at it, using, once more, traditional human psychology.

The human race is a social one, and it's one of the reasons explaining our success. We work together, learn from one another, and pass on that knowledge from generation to generation. That way, we don't always reset knowledge with each new generation, and become more and more advanced as time passes. We also use these social interactions to validate each other to conform into a specific "mold" that varies from society to society, and evolves with time. A specific behavior that was perfectly fine a generation ago could get you in trouble today, and vice-versa. That "mold" is how we define normalcy - the further you are from the center, the more "weird" you become. If some weirdness in you makes you dangerous to yourself (if you like to eat chairs, for example) or to others (you like to eat other people), then society as a whole intervenes to either bring you back towards the center ("stop doing that") or, in cases where it's too dangerous, to cut you away from society. The goal is always the same, even if we celebrate each other's individuality and quirky specifics. We still need to be consistent with what is considered "acceptable behavior".

These behaviors are what society determined what "works" to keep it functional and relatively happy. Just a couple of decades ago, gays could be put in prison for being overtly... well... gay. This was how a majority of people thought, and their reasoning was logical, back then: gays were bad for children; this is how God wants it, etc. These days, the pendulum is changing direction: a majority of people don't see gays as a danger to society any longer, as more civil rights are now given to them. This is how society works best to solve its problems because it is what a majority of people came to accept as being normal. Back in the 1950's, someone who said there was nothing wrong with being gay would've been viewed as outside the mainstream. Today, such an opinion is considered the norm.

The memory hub thus serves as a sort of aggregate of the conditions of what works for every EHE entity. Imagine a game coming out powered by the EHE. That game would be connected to the memory hub, and remain connected to be validated and updated based on the shared knowledge of thousands of other players around the world.

The hub would be used to do multiple things:

1. Upon initial boot, and periodically after that, the AI would be updated with the latest logic, solutions and strategies to better "win", based on the experience of everyone else who has played so far.

2. Your EHE learns individually based on your reality, but it always checks in to validate that its memory matrix isn't too far off course. When the particular cases of a player (like the nuking players mentioned above) mean that their EHE becomes confused, it can reassure itself with the mother ship and disregard knowledge that would have been proven "wrong".

3. When the particular experience of an EHE makes it efficient at solving a specific problem - because a player confronted it in a specific fashion, for instance - the new information can be uploaded to the hub and shared back to the community.

So while the game learns by playing against you, and adapts to your style of playing, it also shares the knowledge of thousands of other players around the world. This knowledge means that an EHE-powered game connected to the hub would have tremendous replay value, as the game's AI is continually evolving and adapting to the game's circumstances and experience of play.

The hub, viewed as a social tool, can also be very interesting. How would the community react to a specific event, or trigger? Are all players worldwide playing the same way? All age groups...? Studying the memory hub based on how it evolves and reacts would be a treasure trove of information for future games, but also for the game designers working on a current game: if an exploit arises or a way to "cheat" is found, the memory hub will learn about it very quickly, thus being able to warn game designers what players are doing, how they're beating the game or, on the other side, how the game is always beating them under determined conditions, thus guiding the designers to adjust afterwards, almost in real time, based on real play data.

In the end, we propose the Borg not only as a safeguard against bad memory, but as a staging ground for better learning, and better dissemination of what is being learned for the shared benefit of all players.

Of course, we welcome your questions, comments, and thoughts on this article.

If a personality filters differently the "in" component of an EHE entity, emotions filters the "out". If a personality shapes how we perceive the various events in our lives, our emotions will modulate how we determine our reactions to them. Thus, emotions are used a little bit like an exponential scale that starts to block rational thinking.

The way we're approaching this is to say that someone who isn't emotional is logical, cold, and cerebral. That person will analyze the situation and take the best decision based on facts. One of the events in the movie I, Robot illustrates this perfectly when a robot rescues Del Spooner - the Will Smith character - from drowning. The robot analyzed that Spooner had more chances of survival than the little girl next to him, so it saved him. It was a cold decision based on facts and probabilities, not on our human tendencies to save children first, and be more affected by the death of a child (or a cute animal).

On the contrary, someone who is overtly emotional will become erratic, unhinged, crazy, etc. That person will react to events in unpredictable, illogical ways. They will not think of the consequences of their actions and, as would a more rational person would do, do whatever seems best to address the situation right away. I'm angry at you? I'll punch you in the face. That is an emotional response. I "saw red", I "wasn't thinking", etc. That is, I stopped caring about what would happen next. I simply went to the most brutal and efficient way to address my anger.

Normally, the EHE will consider outside consequences when dealing with a problem to solve. This is one of the core tenants of the technology. If I'm angry at you, there's a number of things that I could do, ranging from ignoring, to politely arguing, to yelling, to fist-fighting. These things escalate in efficiency to solve the problem - ignoring you doesn't address my anger in the slightest (until a certain amount of time will have passed), but it does the least collateral damages. On the other end of the spectrum, punching you in the face is probably the most satisfying way of addressing my anger, but it has the unpleasant consequence of possibly landing me in jail.

If I'm in control of my emotions, I'll juggle the satisfaction of punching you in the face with the unpleasantness of going to jail, and will probably judge that going to jail is more unpleasant than punching is pleasant, thus the action will be discarded from the range of things that I should do.

But if ignoring you doesn't solve the problem (and possibly even increases it), and if trying to sort things out doesn't solve it as well, then me being "angry at you" is starting to be a real problem, and my inefficiency at solving it is increasing my "anger" emotion. The more it rises, the less I start considering the collateral effects of my actions. So after a certain amount of time, the punching-in-the-face action that was completely discarded earlier will become a totally sane response, because I've temporarily forgotten some of the consequences of it and focuses only on "does it solve my angry at you problem?".

Of course, an emotion can also be positive. Who hasn't done something stupid because of joy? Or because of love? Who hasn't done something he or she later regretted, wondering "What was I thinking?". Well, that's precisely the point: this person wasn't thinking, and was temporarily blinded by a high level of emotion, incapable of seeing outside consequences of what he or she was doing.

When broken down like that, personalities and emotions become manageable for computers, and they can then reproduce convincing behaviors without direction or scripts. Depending on the definitions of the world, they can adapt and evolve in time, and become truly believable characters.

With that being said, two problems that were identified earlier in this article start to appear: unpredictability and the limitations of a closed system. Even if we do a very good job at creating entities that are dynamic and evolving, our world - the computer game in which this technology lives - is behaving according to fixed rules. Even if the data of the world evolves, the reality of the game is finite, and thus the possibility of the entity to learn is bound by that limitation.

It becomes a problem when, for some freak reason, the AI starts to learn the "wrong" thing. It happens a lot, and we usually blame the human player for that.

Why?

Because we, humans, play games for various reasons. And not all of them logical. Sometimes, we just want to see what will happen if we try to do something crazy like that. Sometimes we want to unlock a specific goody, cinematic, prize, etc. Sometimes we're just finding a specific animation funny and want to see it again and again. Also, a human player can suck at the game, especially at first. What happens then? How is the AI supposed to learn and adapt based on the reality of the world under which it's thrown when the parameters of that reality are not realistic themselves?

We made a game a while back called Superpower 2 (newly re-released on Steam; :-) ). In this game, the player would select a country and play it in a more detailed version of Risk, if you will. But sometimes, the player would just pick the US and nuke Paris, for no particular reason. Absolutely nothing in the game world explained that behavior, but here we were anyway. The AI was, in situations like this one, understandably becoming confused, and reacting outside the bounds of where we had envisioned it, because the player itself was feeding the confusion. The EHE would then learn the wrong thing, and in subsequent games would confront the player under these parameters that were wrong in the first place, causing further confusion for the players.

The memory hub (or Borg) comes in to help alleviate these situations. Let's look at it, using, once more, traditional human psychology.

The human race is a social one, and it's one of the reasons explaining our success. We work together, learn from one another, and pass on that knowledge from generation to generation. That way, we don't always reset knowledge with each new generation, and become more and more advanced as time passes. We also use these social interactions to validate each other to conform into a specific "mold" that varies from society to society, and evolves with time. A specific behavior that was perfectly fine a generation ago could get you in trouble today, and vice-versa. That "mold" is how we define normalcy - the further you are from the center, the more "weird" you become. If some weirdness in you makes you dangerous to yourself (if you like to eat chairs, for example) or to others (you like to eat other people), then society as a whole intervenes to either bring you back towards the center ("stop doing that") or, in cases where it's too dangerous, to cut you away from society. The goal is always the same, even if we celebrate each other's individuality and quirky specifics. We still need to be consistent with what is considered "acceptable behavior".

These behaviors are what society determined what "works" to keep it functional and relatively happy. Just a couple of decades ago, gays could be put in prison for being overtly... well... gay. This was how a majority of people thought, and their reasoning was logical, back then: gays were bad for children; this is how God wants it, etc. These days, the pendulum is changing direction: a majority of people don't see gays as a danger to society any longer, as more civil rights are now given to them. This is how society works best to solve its problems because it is what a majority of people came to accept as being normal. Back in the 1950's, someone who said there was nothing wrong with being gay would've been viewed as outside the mainstream. Today, such an opinion is considered the norm.

The memory hub thus serves as a sort of aggregate of the conditions of what works for every EHE entity. Imagine a game coming out powered by the EHE. That game would be connected to the memory hub, and remain connected to be validated and updated based on the shared knowledge of thousands of other players around the world.

The hub would be used to do multiple things:

1. Upon initial boot, and periodically after that, the AI would be updated with the latest logic, solutions and strategies to better "win", based on the experience of everyone else who has played so far.

2. Your EHE learns individually based on your reality, but it always checks in to validate that its memory matrix isn't too far off course. When the particular cases of a player (like the nuking players mentioned above) mean that their EHE becomes confused, it can reassure itself with the mother ship and disregard knowledge that would have been proven "wrong".

3. When the particular experience of an EHE makes it efficient at solving a specific problem - because a player confronted it in a specific fashion, for instance - the new information can be uploaded to the hub and shared back to the community.

So while the game learns by playing against you, and adapts to your style of playing, it also shares the knowledge of thousands of other players around the world. This knowledge means that an EHE-powered game connected to the hub would have tremendous replay value, as the game's AI is continually evolving and adapting to the game's circumstances and experience of play.

The hub, viewed as a social tool, can also be very interesting. How would the community react to a specific event, or trigger? Are all players worldwide playing the same way? All age groups...? Studying the memory hub based on how it evolves and reacts would be a treasure trove of information for future games, but also for the game designers working on a current game: if an exploit arises or a way to "cheat" is found, the memory hub will learn about it very quickly, thus being able to warn game designers what players are doing, how they're beating the game or, on the other side, how the game is always beating them under determined conditions, thus guiding the designers to adjust afterwards, almost in real time, based on real play data.

In the end, we propose the Borg not only as a safeguard against bad memory, but as a staging ground for better learning, and better dissemination of what is being learned for the shared benefit of all players.

Of course, we welcome your questions, comments, and thoughts on this article.