When you are like me, as old as the Gulf Wars, you probably started programming in a managed language like Java, Ruby, Python or C#. As many of you before, you didn't really know what the language is doing with your objects, you create them when you need them and forget about it.

As many programmers gain experience, they start to learn more and more about what happens behind the facade, about Garbage Collecting and overall memory management. Sadly, I see many programmers filled with half right knowledge and wrong conclusions especially in the field of Garbage Collection and Performance. I saw attempts to "optimize" C# code, which did, if anything, slow down the program. So let us take a closer look at the modern Garbage Collectors, how they work and what problems they want to solve.

I am going to explain the specific Java implementation, but don't worry, most of them work fairly similar.

Garbage Collection isn't a beginner's topic, I assume you have a decent knowledge of your programming language of choice. I also assume you know what Heaps and Stacks are, how you use them in C++ and how pointers work. If you feel unsure about any of those topics, you should probably read this article later. Unless you are writing performance critical code, you can live a happy programmers life without knowing anything about Garbage Collection. So take your time, this article won't run away.

Reference Counting, or why would I need a Garbage Collector?

This is maybe the oldest strategy to find unused resources. It is still widely used in C/C++ programs and OS code. This strategy does exactly what the name implies, it counts how many references a resource has. When it drops to zero it can be cleaned up, usually by the thread that decreased the counter to zero. It is a beautifully simple design. The resources a thread has to clean up before resuming are very small, meaning that there are no noticable "hiccups" for the user. It appears as if the program runs seamlessly. Sounds great, but sadly Reference Counting also has its downsides:

- Referemce Counting is rarely supported on the language level. The compiler doesn't help to enforce correct increase/decrease of the counters. Nothing stops the programmer from creating memory leaks.

- Free Space needs to be managed, typically by a Freelist that is able to merge neighboring free areas. It also takes time to allocate a new object, since the program has to go through the list and find an area big enough.

- Cyclic dependencies are hard to find. There are solutions to this problem, like weak pointers in C++, but the programmer has to identify the cyclic dependancy and resolve it manually, or the program has a memory leak.

- The Heap tends to fragment as time goes on. It is possible to make it less of a problem by allocating as much on the stack as possible, like you can in C++. But when you are developing a server application that should run 24/7, fragmentation can become a real issue.

Simple Mark and Sweep Garbage Collector

Java's Garbage Collectors are using a Mark and Sweep algorithm. Every time the GC kicks in, the JVM and all Applications running on it get suspended. After that it cleans the heap in two phases

- Mark Phase:The GC marks all objects that are reachable

- Sweep Phase:The GC sweeps all unreachable objects

Fair enough, but how does the GC actually find unreachable objects?

How to find reachable Objects, the Root Set

Think of the current in memory objects as a graph, each Node represents an object and each edge a reference. All objects reachable from the Root Set should stay in memory. So if you traverse each edge, you find all reachable objects, the mark phase is basically a graph traversal. Each object is reachable as soon as it was visited once, so the GC can mark a visited object with a flag.

The last missing puzzle piece is what the Root Set actually contains. All objects belong to the Root Set that are:

- References on the Callstack (A stackVar = new A(); ...)

- Static, global object referenes

- very short lived references ( return new Integer(3);)

- Java allows through the JNI (Java Native Interface) the invocation of C/C++ code which is also able to allocate Java objects. Those are also part of the Root Set

Sweep the Heap

Now it comes to actually cleaning up the heap. The GC has to somehow walk through the whole Heap, visiting each object. How does it do that? The GC knows the starting adress of the Heap and it knows the heapsize. Now the only thing it needs are the sizes of the individual objects, so it knows where an object starts and where it has to look for the next one. The object size together with the mark flag are typically stored as meta information in each object.

Now we know how to find objects and we can clean them up. Awesome, let's have a look at how we improved compared to the Reference Counting strategy:

Allocation, STW Phases and Other Bad Habits

But how does it find a new space for an object? Sadly, we are still dependant on a Freelist for managing our free spaces. Even worse, now

every object is allocated on the heap. Not only do we still have fragmentation issues, but we made them

worse.

If that isn't enough, now we have Stop The World (STW) phases, the time span from the GC kicking in to it giving control back to the actual program are very noticable. Which is pretty bad if you are the provider of a streaming service, or playing a video game. Note that the Mark and Sweep GC is doing the same work as Reference Counting, the only difference is the distribution of when and how much each strategy cleans up.

But what do we get? The GC automatically solves cyclic dependancies, if a cluster of objects isn't reachable, it can get deleted no matter how many references they have internally. The GC also hides memory management from the programmer, it is one thing less he or she has to worry about.

That sounds great, but the Mark and Sweep GC did so by making everything else worse. If you think this can't be worth it, then you are right! Just for legacy, the first experimental Garbage Collectors worked in this way. I don't know if they were ever thrown in a productive system in this form... I hope not. They were devastatingly impractical and required a reboot after five hours or so, because the program managed to fragment the heap to hell.

The problem does not lie the actual Mark and Sweep algorithm itself. This is nice and tidy and is used in every Garbage Collector I know of. So let us analyze the problem we are trying to solve, how many objects are allocated and when exactly are they becoming garbage?

Object Lifetime

Typically a program allocates some objects at the start which stay alive during the whole runtime. There are also many objects that become useless shortly after their creation. The following diagram shows what a typical program usually does. The Axis are a bit puzzling, but think of the x axis as the age of a byte and the y axis the number of bytes for each age. For now, ignore the dashed lines

What does this mean?

What does this mean?

The average computer program tends to have three different classes of objects. By far the largest amount of objects die shortly after they were created. Then follows the middle class, still moderately in size and stays useful for a little while. At last, we have the old class. Not as many objects as the other classes, but they stay in memory for a long while, some even as long as the program runs.

Now it becomes clear why the simple Mark and Sweep GC fragmented even worse. At the start of the program, short, middle and long lived objects get allocated at roughly the same time. After a second, a big amount of bytes became garbage already, but they are all spread out between medium and long lived objects. In C++ for example, short lived objects could at least sometimes be allocated on the stack.

As you see, in order to make a "good" Garbage Collector, we need to handle the classes differently. It makes sense to regularly clean up the short lived objects and make space for new ones. On the other hand we should be able to let the long lived objects alone as long as possible, only few objects die when they are already old. It may not surprise you, but modern Collectors can do that!

When Java only cleans up young objects, it calls it a

Minor Collection and when it cleans up the young and the old objects, it calls it a

Major/Full Collection. But how do we only search through young objects? And how can we take care of fragmentation?

The Generational GC

So this should be it, the solution to all our problems.

It is still a Mark and Sweep GC, but far more sophisticated. This GC uses Minor and Major Collections and it splits up the Heap in five different Generations or Spaces. Again, these are the Java-specific names, other Garbage Collectors work similarly but use different names:

- Eden Space: Here is where every object will be created. Every minor collection completely clears out the Eden Space.

- Survivor Space 1 and 2: Both spaces have to be exactly the same. As the name implies, the survivors of a minor collection get copied here. Why we exactly need two Survivor spaces gets clear when we talk about aging. After every Minor Collection, one Survivor Space is completely empty.

- Old Space (or Tenured Generation): When a object has lived long enough, it gets promoted to the Old Space. Here, it can only get cleaned out by a Major Collection.

- Permanent Space: No GC will be performed here, that is why it is called permanent. You are also unable to allocate here, this space is used by the JVM to store metadata and other implementation-specific stuff.

Now let us have a look at the Generational GC in detail:

Allocation

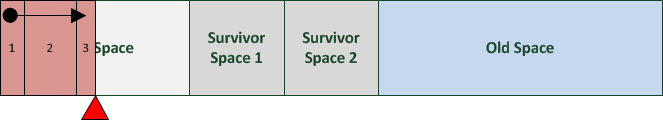

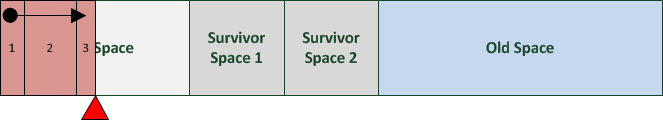

As we talked about in Reference Counting, we don't want a Freelist to manage our free space. But we do have our heap divided in several different spaces. We can use a "Free" Pointer for each space. As the name suggests, it marks the beginning of the free space:

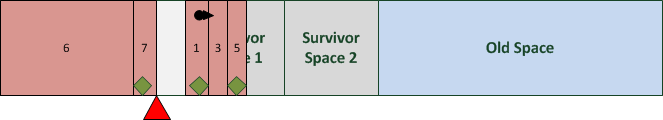

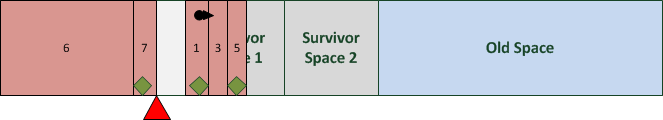

The red squares are objects, the black arrow are refrences to other objects and the bright red triangle is the ominous free pointer.

New objects are always allocated in the Eden Space, unless they are too big for it, then the JVM allocates it directly in the Old Space.

Minor Collection

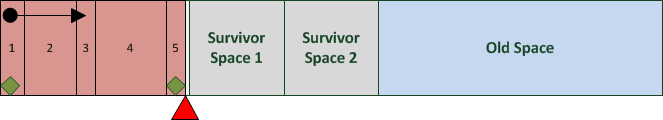

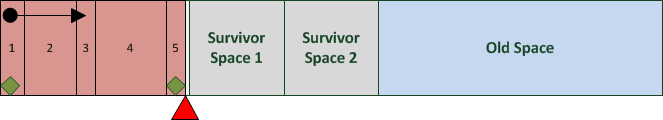

Now, let us assume that the Eden Space is getting full. The Garbage Collector has to perform a Minor Collection:

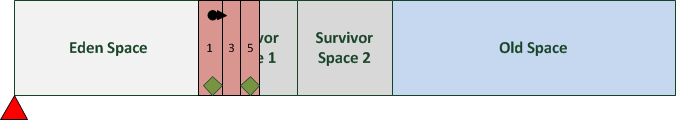

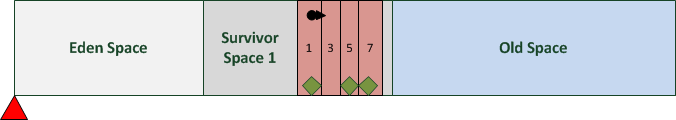

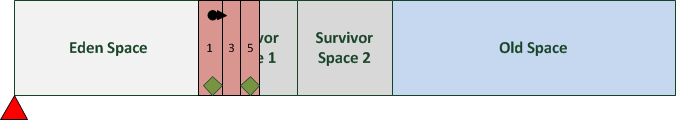

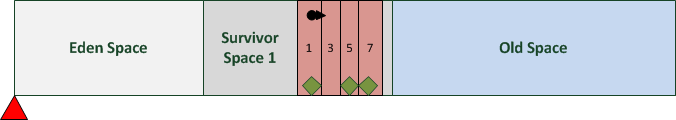

Each green diamond means that this object is part of the root set. Now, the Garbage Collector goes through the root set and marks all reachable objects. In this case, it marks objects 1, 3 and 5. It then copies the still-reachable objects in one of the survivor spaces:

After each Minor Collection, the Eden Space and one of the Survivor Spaces

have to be empty. This gets important when we have a look at when the Generational GC runs out of memory.

All references of the surviving objects have to be adjusted, otherwise the reference in object 1 would point to something completely different, which we obviously don't want to happen.

The Garbage Collector doesn't have to initialize the whole heap with zeros. In Java, when the Constructor gets called, every variable should be initialized to zero. It is not specified if the Garbage Collector or the new operator should be responsible for this.

Aging

As you recall from

Object Lifetime, an object is less likely to become garbage the older it gets. Having an object die in Eden or the Survivor spaces is far cheaper than in the Old Generation, so the Garbage Collector tries to keep it outside of the Old Space as long as possible, so it is less likely to die in the Old Generation. This process is called

Aging.

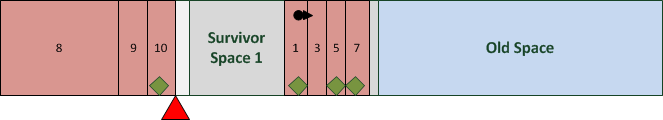

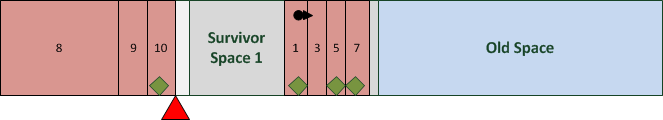

Let us go back to our example, and imagine that the Eden Space is getting crowded again. The next new object doesn't fit in the Eden Space, so the Garbage Collector performs another Minor Collection:

The mark phase now reaches object 1,3,5,7. All surviving objects are now copied in the free Survivor Space and all other objects get cleaned out.

This can take a while, the Garbage Collector can perform serveral Minor Collections, swap the objects from one space to the other. This sounds like a very expensive operation. It can get out of hand when objects get promoted too late, or the Eden Space is too small and Minor Collections happen often. So aging is a good optimization, if the Garbage Collector has enough memory to work with. We can start to see a trend in this Generational strategy: The more memory we have, the less overhead it has to do for the memory management.

If an object can't fit in a survivor space, it gets promoted directly to the Old Generation.

Promotion to Old Generation

There are two reasons for a Garbage Collector to promote objects:

- Objects in the Survivor Spaces are getting older, their chances of becoming garbage have sunk dramatically.

- The Survivor Space is getting full.

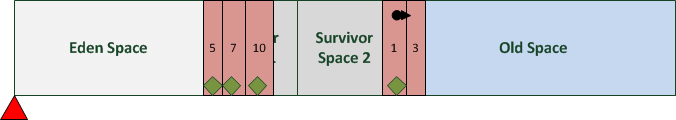

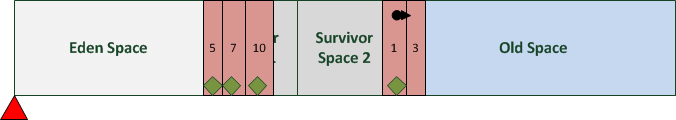

Let us have a look what happens, when the survivor space is getting full:

The mark phase reaches object 1,3,5,7 and 10. But you'll see quickly that they don't fit in one Survivor space. Somebody has to get promoted. The Generational GC always promotes the oldest objects first. In this example, object 1 and 3 are moved to the old space.

It is possible to tune how old an object should get before it gets promoted.

Fragmentation in Old Space

We have looked at the Minor Collection in detail and can conclude: It is free from freelists and fragmentation. Awesome! But what about the Old Space? This is still the same as the Simple Mark and Sweep GC algorithm. So what magic concept can we use here?

Since we try to let most of the objects die in Eden and the Survivor spaces, Major Collections are more rare and we can afford to perform expensive compacting algorithm here. While there are other reasons why managing Old Space is expensive, this is the most obvious and most important to know about. Any other reasons are beyond the scope of this article.

This is not important for a developer, but very interesting to think about: How much memory does a Major Collection have at its disposal? Think about it, a major collection is called when the Heap has almost run out of free space. The Major Collection has almost no Memory to work with.

OutOfMemoryException

Now the final question, when does the heap actually run out of memory? Obviously, we would like the heap to be full when, well duh, it is full, when we can't allocate any more space. Sadly, this isn't the case. Let us make a freak example: Every object you allocate is 1 MiB in size, you have 4 Mib in Eden, 2* 0.5 MiB for Survivors and 5 MiB for Old Space. In total, your heap could hold 10 objects.

Your program needs 7 objects at once in the heap, it regularly allocates a new 1 Mib object. Before it does it, your program removes the reference to an old object. Can the program run without an OutOfMemoryException?

The answer is a clear yes and no! In theory at thee time of the crash, the Eden Space is full with 4 objects. The Old Space contains 3 garbage and 2 objects alive. A survivor space can not hold even a single object. The Generational GC has to clean up the Eden Space completely, but there is no space for all 4 objects, even after a Major Collection. So the Garbage Collector has no other choice but to hoist the white flag, to throw an OutOfMemoryException.

But it CAN work, the Oracle implementation for example has a smarter Eden Space, it doesn't clean up the whole Eden Space because it would throw an OutOfMemoryException. So it can work, but don't count on it.

Common Misconceptions

Especially newer programmers seem to struggle with this. They hear about Garbage Collectors and the overhead. They think that good code therefore generates as less garbage as possible. They try to keep objects longer in memory than they should and maybe even dislike the Immutable Pattern.

It is true that if you are using an object anyways later on, that you should probably reuse it, but don't keep objectsyou might use at some point in the future in memory. With modern garbage collectors, short lived objects produce much less overhead than long lived ones. The Collector might need to swap it around in the Survivor spaces or if you are unlucky, it might even get promoted. Then you really produced unnecessary overhead. So don't hesitate to throw away what you don't need, it will only matter if you are allocating and throwing away megabytes. If you are doing that, no matter if you are using a Garbage Collector or not, you should rethink your program logic.

Performance

When I read people arguing about why C++ has the better performance than higher-level languages, I always read one sentence that says: "because it doesn't use a Garbage Collector". Which I find a bit irritating, because it is in general not true. Let us discuss Garbage Collectors and Performance.

First, we should think about what we mean when we say performance, there are mainly four factors when we talk about memory management:

- Memory Footprint

- Stop the World Phases

- Allocation Overhead

- Throughput

Memory Footprint

The Memory Footprint is generally worse when we use a Garbage Collector. As we concluded previously, in extreme cases both Survivor Spaces and big parts of the Eden Space are free but the Garbage Collector still runs out of memory. In other words, it uses more memory than it could.

Stop The World Phases

Here too the Garbage Collector performs worse than the simple Reference Counting strategy. This is neglectible for Applications like E-Banking Servers or the average desktop program. For time critical programs like Streaming Services or Games, this can be a dealbreaker.

That doesn't mean Garbage Collectors are unsuitable for time critical applications. Thanks to multicore processors, tuning possibilities and cheap RAM, Stop the World Phases can be reduced to unnoticable lengths.

Allocation Overhead

Thanks to Generational GC, we don't need to search through a Freelist of an adequate sized memory block. We always know where to allocate the next object, no matter how big it is. Allocation is as cheap as it gets.

Throughput

First of all, throughput is measured in how much time the program spends cleaning up memory compared to how much time it is actually running. I think this is the most counter-intuitive point of all, but a Garbage Collector tends to spend less time cleaning up than the accumulated time in a Reference Counting program.

A Garbage Collector has a favorable memory access pattern, plus the more garbage there is, the less work has to be done. A Garbage Collector gets more and more efficient the more memory it should take care of. Throughput tends to scale with the memory available, which makes it very favorable for Service Providers. When the load increases, you can add memory and handle it to some degree.

Standard Configuration

This is Java implememtation-specific stuff, I don't know about anything about the standard configuration from other Garbage Collectors, but they very likely have a similar strategy behind it:

If you have installed Java on a desktop computer, the JVM sets the ratio Old Generation/New Generation to 1/3. The Old Space makes up to 3/4 of the total heap size, while the Eden Space and the two Survivor Spaces only get 1/4. Why is that the case? For throughput, the Eden Space should be well sized, to keep track of short lived objects, to reduce promotions in the more expensive Old Space.

As we saw before, the Collector throws an OutOfMemoryException if it doesn't have enough space to clean out the Eden Space completely. On the average desktop, memory tends to be more scarce, since it has to be shared between many other programs. With a big Old Generation, the JVM sacrifices speed for a more efficient memory footprint. This is something you don't have to worry about if you are writing an everyday application. But if you are implementing a Server Application or a

Computer Game, fiddling with the Garbage Collector settings may be worth your while.

The End

That's about it, these are the basics of modern Garbage Collectors. This is all you need to know for most of your programming life. But the road doesn't end here, there are many more advanced topics to talk about: programming pitfalls with Garbage Collection, paralell and concurrent implementations and maybe most interesting of all; Garbage Collector Tuning.

I am certainly going expand on this topic at a later time and date. But first I want to write on a different topic and give Garbage Collection a small rest.

References

Java Garbage Collector Tuning

Article Update Log

6 Jun 2013: Revised "OutOfMemoryException"

9 May 2013: Revised "Reference Counting, or why would I need a Garbage Collector?" and Introduction.

30 Apr 2013: Initial release

What does this mean?

The average computer program tends to have three different classes of objects. By far the largest amount of objects die shortly after they were created. Then follows the middle class, still moderately in size and stays useful for a little while. At last, we have the old class. Not as many objects as the other classes, but they stay in memory for a long while, some even as long as the program runs.

Now it becomes clear why the simple Mark and Sweep GC fragmented even worse. At the start of the program, short, middle and long lived objects get allocated at roughly the same time. After a second, a big amount of bytes became garbage already, but they are all spread out between medium and long lived objects. In C++ for example, short lived objects could at least sometimes be allocated on the stack.

As you see, in order to make a "good" Garbage Collector, we need to handle the classes differently. It makes sense to regularly clean up the short lived objects and make space for new ones. On the other hand we should be able to let the long lived objects alone as long as possible, only few objects die when they are already old. It may not surprise you, but modern Collectors can do that!

When Java only cleans up young objects, it calls it a Minor Collection and when it cleans up the young and the old objects, it calls it a Major/Full Collection. But how do we only search through young objects? And how can we take care of fragmentation?

What does this mean?

The average computer program tends to have three different classes of objects. By far the largest amount of objects die shortly after they were created. Then follows the middle class, still moderately in size and stays useful for a little while. At last, we have the old class. Not as many objects as the other classes, but they stay in memory for a long while, some even as long as the program runs.

Now it becomes clear why the simple Mark and Sweep GC fragmented even worse. At the start of the program, short, middle and long lived objects get allocated at roughly the same time. After a second, a big amount of bytes became garbage already, but they are all spread out between medium and long lived objects. In C++ for example, short lived objects could at least sometimes be allocated on the stack.

As you see, in order to make a "good" Garbage Collector, we need to handle the classes differently. It makes sense to regularly clean up the short lived objects and make space for new ones. On the other hand we should be able to let the long lived objects alone as long as possible, only few objects die when they are already old. It may not surprise you, but modern Collectors can do that!

When Java only cleans up young objects, it calls it a Minor Collection and when it cleans up the young and the old objects, it calls it a Major/Full Collection. But how do we only search through young objects? And how can we take care of fragmentation?

The red squares are objects, the black arrow are refrences to other objects and the bright red triangle is the ominous free pointer.

New objects are always allocated in the Eden Space, unless they are too big for it, then the JVM allocates it directly in the Old Space.

Minor Collection

Now, let us assume that the Eden Space is getting full. The Garbage Collector has to perform a Minor Collection:

The red squares are objects, the black arrow are refrences to other objects and the bright red triangle is the ominous free pointer.

New objects are always allocated in the Eden Space, unless they are too big for it, then the JVM allocates it directly in the Old Space.

Minor Collection

Now, let us assume that the Eden Space is getting full. The Garbage Collector has to perform a Minor Collection:

Each green diamond means that this object is part of the root set. Now, the Garbage Collector goes through the root set and marks all reachable objects. In this case, it marks objects 1, 3 and 5. It then copies the still-reachable objects in one of the survivor spaces:

Each green diamond means that this object is part of the root set. Now, the Garbage Collector goes through the root set and marks all reachable objects. In this case, it marks objects 1, 3 and 5. It then copies the still-reachable objects in one of the survivor spaces:

After each Minor Collection, the Eden Space and one of the Survivor Spaces have to be empty. This gets important when we have a look at when the Generational GC runs out of memory.

All references of the surviving objects have to be adjusted, otherwise the reference in object 1 would point to something completely different, which we obviously don't want to happen.

After each Minor Collection, the Eden Space and one of the Survivor Spaces have to be empty. This gets important when we have a look at when the Generational GC runs out of memory.

All references of the surviving objects have to be adjusted, otherwise the reference in object 1 would point to something completely different, which we obviously don't want to happen.

The mark phase now reaches object 1,3,5,7. All surviving objects are now copied in the free Survivor Space and all other objects get cleaned out.

The mark phase now reaches object 1,3,5,7. All surviving objects are now copied in the free Survivor Space and all other objects get cleaned out.

This can take a while, the Garbage Collector can perform serveral Minor Collections, swap the objects from one space to the other. This sounds like a very expensive operation. It can get out of hand when objects get promoted too late, or the Eden Space is too small and Minor Collections happen often. So aging is a good optimization, if the Garbage Collector has enough memory to work with. We can start to see a trend in this Generational strategy: The more memory we have, the less overhead it has to do for the memory management.

If an object can't fit in a survivor space, it gets promoted directly to the Old Generation.

Promotion to Old Generation

There are two reasons for a Garbage Collector to promote objects:

This can take a while, the Garbage Collector can perform serveral Minor Collections, swap the objects from one space to the other. This sounds like a very expensive operation. It can get out of hand when objects get promoted too late, or the Eden Space is too small and Minor Collections happen often. So aging is a good optimization, if the Garbage Collector has enough memory to work with. We can start to see a trend in this Generational strategy: The more memory we have, the less overhead it has to do for the memory management.

If an object can't fit in a survivor space, it gets promoted directly to the Old Generation.

Promotion to Old Generation

There are two reasons for a Garbage Collector to promote objects:

The mark phase reaches object 1,3,5,7 and 10. But you'll see quickly that they don't fit in one Survivor space. Somebody has to get promoted. The Generational GC always promotes the oldest objects first. In this example, object 1 and 3 are moved to the old space.

The mark phase reaches object 1,3,5,7 and 10. But you'll see quickly that they don't fit in one Survivor space. Somebody has to get promoted. The Generational GC always promotes the oldest objects first. In this example, object 1 and 3 are moved to the old space.

It seems to be a great article, I have just read some paragraphs, I will read it entirely later at home! But you say:

As far as I know C/C++ does not have Garbage Collector. This will simply crash after many iterations: