Once, tasked with making the best game ever, a young programmer spent the day getting the game window to display on-screen. Once displayed, he was eager to know how fast it was rendering at and so decided to display the number of frames-per-second in the upper-left corner of the screen. "Ah, three thousand FPS", he said, licking his lips with glee, "that will be plenty for my game."

The next day, he displayed his first triangle on the screen. A single white triangle, untextured, unlit, unshaded, against a black background... and he stared in horror as his FPS dropped by over 1000 FPS. This article will explain how it happened, and why our young programmer friend shouldn't be worried.

Different forms of measurement

Many programmers coming from a gaming background quickly adopt FPS as the familiar method used to measure performance - but FPS measurements can be deceptive if we're not careful, as we'll see in a bit. But first, let's remember how FPS is measured, what it really is, and the alternative we have in our toolbox.

Frames per second

A common videogame performance measurement that gamers use is measuring the number of times in a single second a game updates the graphics it is displaying. Each update is called a 'frame'.

To get the number of frames, you can use a variable and add one to that variable every time the game is done displaying the graphics for that frame. Then, after a full second of frames, you can display the value, and reset the variable to begin counting again.

One of the problems is that you aren't measuring individual frames, but are measuring groups of frames at a time. Those groups don't have a fixed size - sometimes you measure 59 frames before one second is up, sometimes you measure 73 frames - and the more frames that you are measuring at once, the less each frame actually 'weighs' in performance costs. Your 50th frame is less valuable than your 5th frame. One frame gained in one location is not equal to another frame gained in another location.

Microseconds per frame

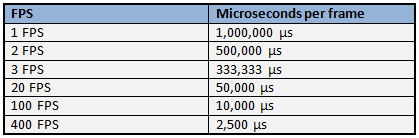

The common alternative to FPS is to measure each frame individually. Because each frame takes less than one second, you need a unit of time measurement that is small enough to accurately measure frames. Milliseconds (which are 1/1000th of a second) are often used, but computers run so fast that even milliseconds can't accurately measure frames - though they do give a decent estimate. Instead, microseconds, being 1/1000th of a millisecond (i.e. 1/1,000,000th of a second), provide accurate enough measurements to let us make informed decisions about the speeds of our games.

How it's measured

At the end (or beginning) of every frame, get the current time and compare it to the time gotten at the end (or beginning) of the previous frame. The difference between the two times is how long this frame took.

Because frames occur potentially hundreds of times a second, you'll want to update the displayed frame-time only about once or twice a second. Normally, you average the cost of the frames over a period of time and display that average. However, it's beneficial as a debugging and optimizing tool to also display the highest and lowest frame-times of the past few seconds.

One difference between FPS and microseconds-per-frame is that microseconds measure the cost of your frames. With FPS, higher FPS is the goal. With microseconds you want the cost to be as low as possible, so lower numbers are desired.

Comparisons in measurement

('us' is the symbol/abreviation for microseconds. 'mu' is also an accepted spelling)

('us' is the symbol/abreviation for microseconds. 'mu' is also an accepted spelling)

You can see that the more frames you have, the less every frame costs in microseconds. This means that each frame gained from a lower framerate is more valuable in performance than each frame gained from a higher framerate. This is sometimes a source of confusion. When your FPS is only 20, losing 1 frame means taking 2631 microseconds longer per frame. But when your FPS is 200, taking 2631 microseconds per frame longer means you lose almost 70 FPS (!), which can be startling and seem more dramatic despite it being the exact same amount of time.

Let's revisit our young programmer friend from the beginning of this article. His framerate dropped from 3000 FPS to 2000 FPS from one triangle. If we convert our FPS to microseconds-per-frame, 3000 FPS becomes 333 microseconds, and 2000 FPS becomes 500 microseconds, making it actually an increase of 166 microseconds per frame, rather than the more dramatic and scary loss of one thousand FPS.

But wait, one triangle still costs too much!

We only have 1,000,000 microseconds per second of time. The young programmer drew one triangle, and it cost him 166 microseconds. "That's terrible!", he moans, "That means if I want to run at 60 FPS, my one million microseconds will only be able to draw 100 triangles a frame..."

Not exactly. Computer graphics require a lot of work transmitting information to the video card, but much of this work doesn't need to be repeated for every triangle or sprite you draw.

'Economies of Scale'

An example of an economy of scale in real-life is when a car company builds a new car factory. The factory might cost $50,000,000 to build. Additionally, it might cost $200 for each car produced. If that factory is only used to produce one single car, the total amount spent for that car (when you include the cost of the factory) is $50,000,200. If two cars are produced, the total cost for each car is $25,000,200. If fifty cars are built, the total cost for each car is $1,000,200.

Some software work in a similar manner: there might be high one-time costs for the first operation, but each additional operation costs only a meager amount.

With rendering triangles onscreen, or displaying 2D sprites, the videocard has to reconfigure different states whenever the settings being used to draw the objects change. If, when drawing a single sprite, the videocard spends most its time binding the texture and shader to use, drawing multiple sprites that share the same shader or texture or both, saves most the time spent drawing each sprite.

Theoretical example: What's the difference between drawing 1 sprite verses drawing 5 sprites? One sprite: - Bind shader (let's pretend it takes 20 imaginary time units). - Bind texture (let's pretend it takes 10 ITUs). - Translate to the location (1 ITUs) - Render the sprite (3 ITUs) Total cost: 34 ITUs. Average cost per sprite: 34 ITUs.

Five sprites: - Bind shader (all five of these draws share the same shader, so it only needs to be calculated once) - Bind texture for sprite A - Translate to location sprite A - Render the sprite A - Sprite A and B share the same texture, so we skip rebinding the texture. - Translate to the location B - Render the sprite B - Bind texture for sprite C - Translate to sprite location C - Render the sprite C - Bind texture for sprite D - Translate to sprite location D - Render the sprite D - Sprite D and E share the same texture, so we skip rebinding the texture. - Translate to sprite location E (1 ITUs) - Render the sprite E (3 ITUs) Total cost for 5 sprites: 70 ITUs. Average cost per sprite: 14 ITUs.

There are more setup costs than just textures and shaders, and actual costs vary depending on the hardware as different hardware has different bottlenecks. For some videocards, it might be more expensive to change textures then to change shaders - but both will still have costs that can be avoided by using the same texture or shader for multiple sprites in a row.

Conclusion

Gamers care about how fast the game runs overall, or compared to other games, and not about the speed of individual frames. FPS is a good tool for gamers to use to measure general performance of the game as a whole. Developers working to improve their games' speeds need to measure the average performance of the individual frames. This lets developers see improvements in an unchanging way, where 1 microsecond is always 1 microsecond.

Unless your FPS drops below your target framerate, you have nothing to worry about. In the meantime, consider switching your measurements to microseconds-per-frame to have more detailed information about how fast your game is actually running.

Article Update Log

June 22nd, 2013: Initial release

Good article. This is something that frequently sends beginners into fits.

Just a minor point - there's a problem with your math in the 5 sprites example. The total cost should be 70, not 50 so the average should be 14, not 10:

Shader - 20

Texture - 10

Translate - 1

Render - 3

Translate - 1

Render - 3

Texture - 10

Translate - 1

Render - 3

Texture - 10

Translate - 1

Render - 3

Translate - 1

Render - 3

Total - 70