Skeletal animation plays an important role in video games. Recent games are using many characters within. Processing huge amount of animations can be computationally intensive and requires much memory as well. To have multiple characters in a real time scene, optimization is highly needed. There exists many techniques which can be used to optimize skeletal animations. This article tends to address some of these techniques. Some of the techniques addressed here have plenty of details, so I just try to define them in a general way and introduce references for those who are eager to learn more.

This article is divided into two main sections. The first section is addressing some optimization techniques which can be used within animation systems. The second section is from the perspective of the animation system users and describes some techniques which can be applied by users to leverage the animation system more efficiently. So if you are an animator/technical animator you can read the second section and if you are a programmer and you want to implement an animation system you may read the first section.

This article is not going to talk about mesh skinning optimization and it is just going to talk about skeletal animation optimization techniques. There exists plenty of useful articles about mesh skinning around the web.

1.Skeletal Animation Optimization Techniques

I assumed that most of the audiences of this article know the basics of skeletal animation, so I'm not going to talk about the basics here. To start, let's have a definition for a skeleton in character animation. A skeleton is an abstract model of a human or animal body in computer graphics. It is a tree data structure. A tree which its nodes are called bones or joints. Bones are just some containers for transformations. For each skeletal animation, there exists animation tracks. Each track has the transformation info of a specific bone. A track is a sequence of keyframes. A keyframe is transformation of a bone at a specific time. The keyframe time is specified from the beginning of the animation. Usually the keyframes are stored relative to a pose of the bone named

binding pose. These animation tracks and skeletal representation can be optimized in different ways. In the following sections, I will introduce some of these techniques. As stated before, the techniques are described generally and this article is not going to describe the details here. Each of which can be described in a separated article.

Optimizing Animation Tracks

An animation consists of animation tracks. Each animation track stores the animation related to one bone. An animation track is a sequence of keyframes where each keyframe contains one of the translation, rotation or scale info. Animation tracks are one thing that can be optimized easily from different aspects. First we have to note that most of the bones in character animations do not have translation. For example we don't need to move fingers or hands. They just need to be rotated. Usually the only bones that need to have translation are the root bone and the props (weapons, shields and so on). The other body organs do not move and they are just being rotated. Also the realistic characters usually do not need to be scaled. Scale is usually applied to cartoony characters. One other thing about the scale is that animators mostly use uniform scale and less non-uniform scale.

So based on this information, we can remove scale and translation keyframes for those animation tracks that do not own them. The animation tracks can become light weighted and allocate less memory and calculation by removing unnecessary translation and scale keyframes. Also if we use uniform scale, the scale keyframes can just contain one float instead of a Vector3.

Another technique which is very useful for optimization of animation tracks, is animation compression. The most famous one is curve simplification. You may know it as keyframe reduction as well. It reduces the keyframes of an animation track based on a user defined error. With this, the consecutive keyframes which have small differences can be omitted. The curve simplification should be applied for translation, rotation and scale separately because each of which has its own keyframes and different values. Also their difference is calculated differently. You may read

this paper about curve simplification to find out more about it.

One other thing that can be considered here, is how you store rotation values in the rotation keyframes. Usually the rotations are stored in unit quaternion format because quaternions have some

good advantages over Euler Angles. So if you are storing quaternions in your keyframes, you need to store four elements. But in unit quaternions the scalar part can be obtained easily from the vector part. So the quaternions can be stored with just 3 floats instead of four. See

this post from my blog to find out how you can obtain the scalar part from the vector part.

Representation of a Skeleton in Memory

As mentioned in previous sections, a skeleton is a tree data structure. As animation is a dynamic process, the bones may be accessed frequently while the animation is being processed. So a good technique is to keep the bones sequentially in the memory. They should not be separated because of the

locality of the references. The sequential allocation of bones in memory can be more cache-friendly for the CPU.

Using SSE Instructions

To update a character animation, the system has to do lots of calculations. Most of them are based on linear algebra. This means that most of calculations are with vectors. For example, the bones are always being interpolated between two consecutive keyframes. So the system has to LERP between two translations and two scales and SLERP between two quaternion rotations as well. Also there might be animation blending which leads the system to interpolate between two or more different animations based on their weights. LERP and SLERP are calculated with these equations respectively:

LERP(V1, V2, a) = (1-a) * V1 + a * V2

SLERP(Q1, Q2, a) = sin((1-a)*t)/sin(t) * Q1 + sin(a*t)/sin(t) * Q2

Where 't' is the angle between Q1 and Q2 and 'a' is interpolation factor and it is a normalized value. These two equations are frequently used in keyframe interpolation and animation blending. Using SSE instructions can help you to achieve the results of these equations efficiently. I highly recommend you check the hkVector4f class from

Havok physics/animation SDK as a reference. The hkVector4f class is using SSE instructions very well and it's a very well-designed class. You can define translation, scale and quaternion similar to hkVector4f class.

You have to note that if you are using SSE instructions, then your objects which are using it have to be memory aligned otherwise you will run into traps and exceptions. Also you should consider your target platform and see that how it supports these kind of instructions.

Multithreading the Animation Pipeline

Imagine you have a crowded scene full of NPCs in which each NPC has a bunch of skeletal animations. Maybe a herd of bulls. The animation can take a lot of time to be processed. This can be reduced significantly if the computation of crowds becomes multithreaded. Each entity's animations can be computed in a different thread.

Intel introduced a good solution to achieve this goal in this

article. It defines a thread pool with worker threads which their count should not be more than CPU cores otherwise the application performance decreases. Each entity has its own animation and skinning calculations and it is considered as a job and is placed in a job queue. Each job is picked by a worker thread and the main thread calls render functions when the jobs are done. If you want to see this computation technique more in action, I suggest you to have a look at Havok animation/physics documentation and study the multithreading in the animation section. To have the docs you have to download the whole SDK

here. Also you can find that Havok is handling synchronous and asynchronous jobs there by defining different job types.

Updating Animations

One important thing in animation systems is how you manage the update rate of a skeleton and its skinning data. Do we always need to update animations each frame? If true do we need to update each bone every frame? So here we should have a LOD manager for skeletal animations. The LOD manager should decide whether to update hierarchy or not. It can consider different states of a character to decide about its update rate. Some possible cases to be considered are listed here:

1-

The Priority of The Animated Character: Some characters like NPCs and crowds do not have very high degree of priority so you may not update them in every frame. At most of the times, they are not going to be seen clearly so they can be ignored to be updated every frame.

2-

Distance to Camera: If the character is far from the camera, many of its movements cannot be seen. So why should we just compute something that cannot be seen? Here we can define a skeleton map for our current skeleton and select more important bones to be updated and ignore the others. For example when you are far from the camera you don't need to update finger bones or neck bone. You can just update spines, head, arms and legs. These are the bones which can be seen from far. So with this you have a light weighted skeleton and you are ignoring many bones to update. Don't forget that human hands have 28 bones for fingers and 28 bones for a small portion of a mesh is not very efficient.

3-

Using Dirty Flags For Bones: In many situations, the bone transformation is not changed in two consecutive frames. For example the animator himself didn't animate that bone in several frames or the curve simplification algorithm reduced consecutive keyframes which are more similar. In these kind of situations, you don't need to update the bone in its local space again. As you might know the bones are firstly calculated in their local space based on animation info and then they will be multiplied by their binding pose and parent transformation to be specified in world or modeling space. Defining dirty flags for each bone can help you to not calculate bones in their local space if they are not changed between two consecutive frames. They can be updated in their local space if they are dirty.

4-

Update Just When They Are going To Be Rendered: Imagine a scene in which some agents are following you. You try to run away from them. The agents are not in the camera frustum but their AI controller is monitoring and following you. So do you think we should update the skeleton while the player can't see them? Not in most cases. So you can ignore the update of skeletons which are not in the camera frustum. Both Unity3D and Unreal Engine4 have this feature. They allow you to select whether the skeleton and its skinned mesh should be updated or not if they are not in the camera frustum.

You might need to update skeletons even if they are not in the camera frustum. For example you might need to shoot an object to a character's head which is not in the camera. Or you may need to read the root motion data and use it for locomotion extraction. So you need the calculated bone positions. In this kind of situations you can force the skeleton to be updated manually or not using this technique.

2.Optimized Usage of Animation Systems

So far, some techniques have been discussed to implement an optimized animation system. As a user of animation systems, you should trust it and assume that the system is well optimized. You assume that the system has many of the techniques described above or even more. So you can produce animations which can be friendlier with an optimized animation system. I'm going to address some of them here. This section is more convenient for animators/technical animators.

Do Not Move All Bones Always

As mentioned earlier the animation tracks can be optimized and their keyframes can be reduced easily. So by knowing this, you can create animations which are more suitable for this kind of optimization. So do not scale or move the bones if it is not necessary. Do not transform bones that cannot be seen. For example while you are making a fast sword attack, not all of the facial bones can be seen. So you don't need to move them all.

In the cutscenes where you have a predefined camera, you know which bones are in the camera frustum. So if you have zoomed the camera on the face of your character, you don't need to move the fingers or hands. With this you will save your own time and will let the system to save much memory by simplifying the animation tracks for bones.

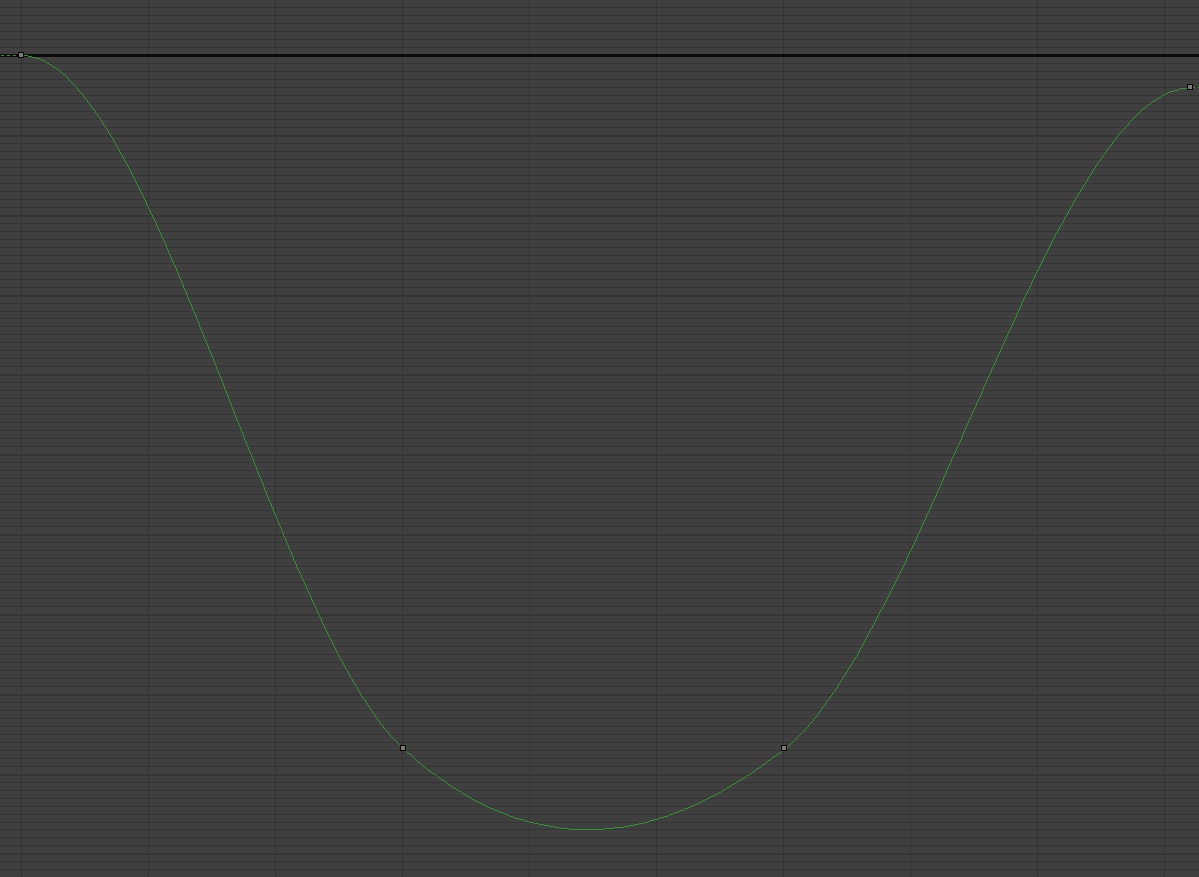

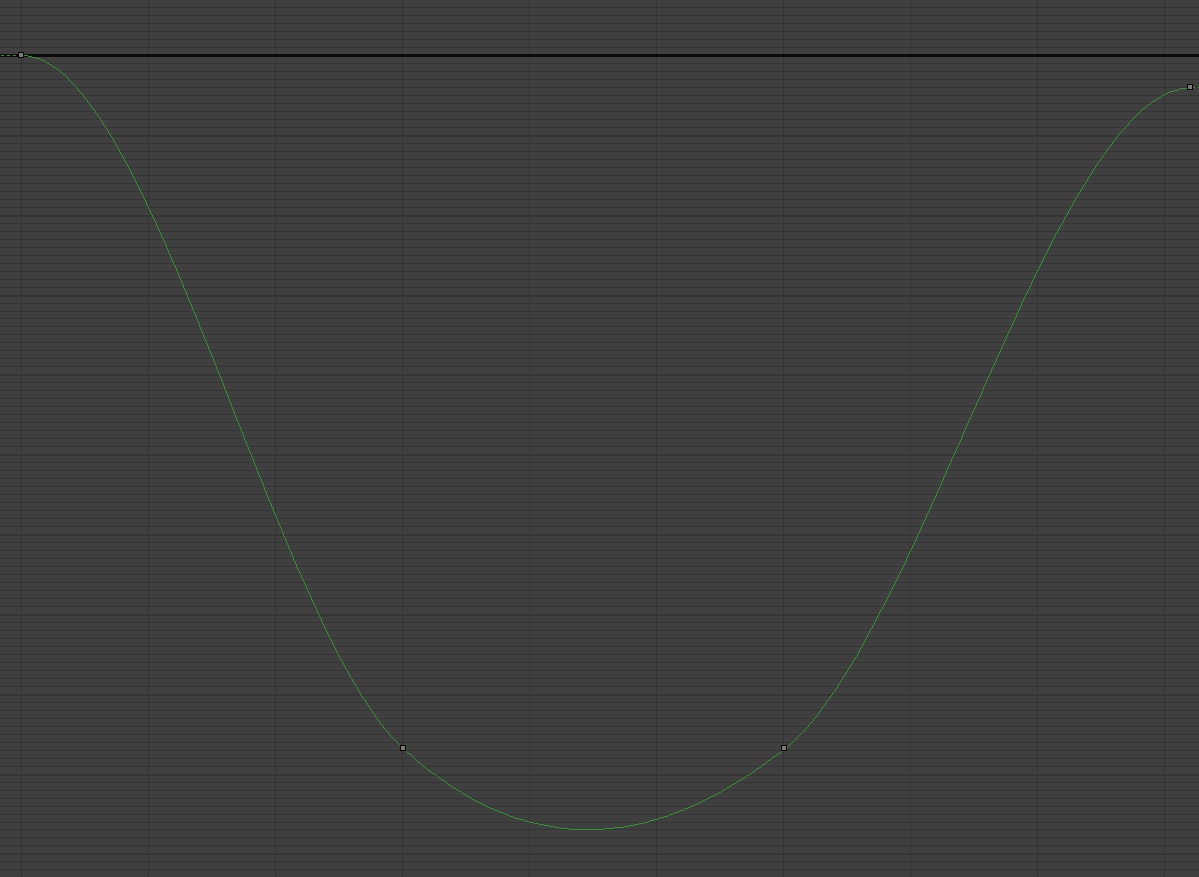

One other important thing is duplicating two consecutive keyframes. This occurs frequently in blocking phase of animation. For example, you move fingers in frame 1 and again move them frame 15 and you copy the keyframe 15 to frame 30. Keyframe 15 and 30 are the same. But the default keyframe interpolation techniques are set to make the animation curves smooth. This means that you might get an extra motion between frame 15 and 30. Figure1 shows a curve which is smoothed with keyframe interpolation techniques.

Figure1: A smoothed animation curve

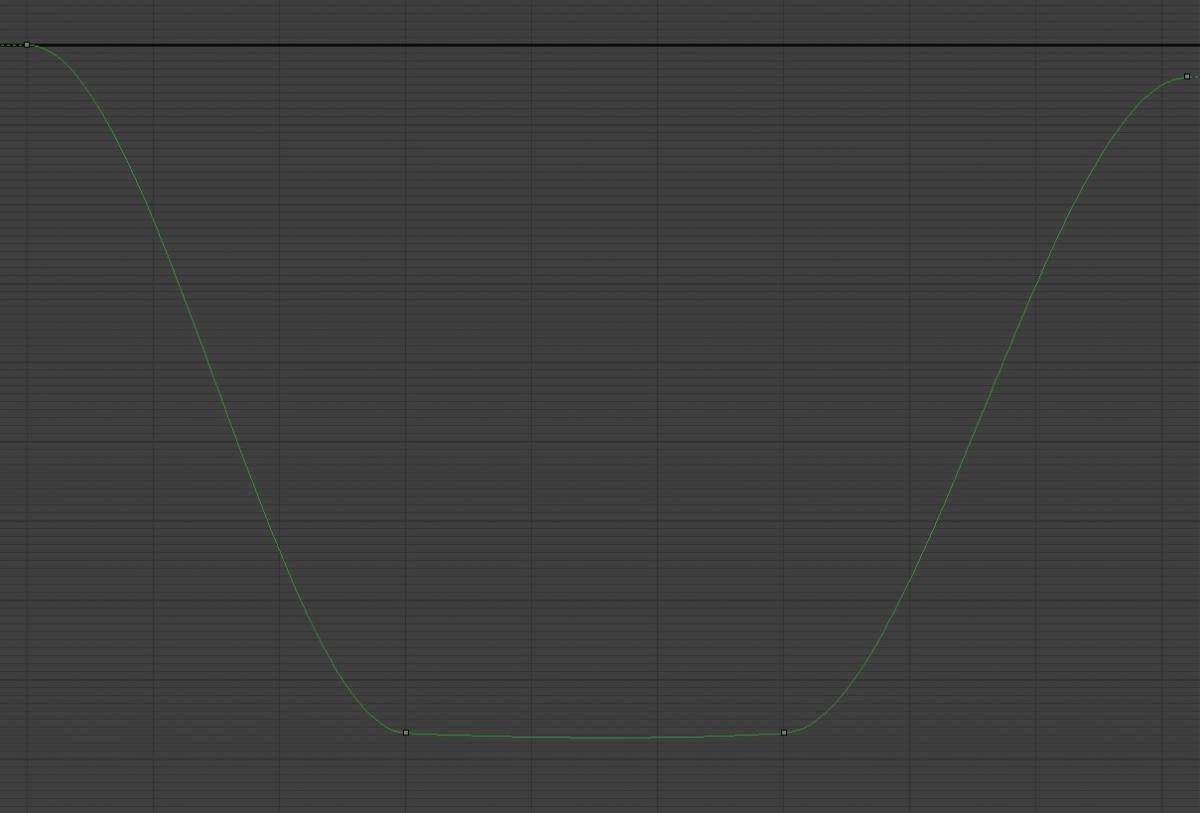

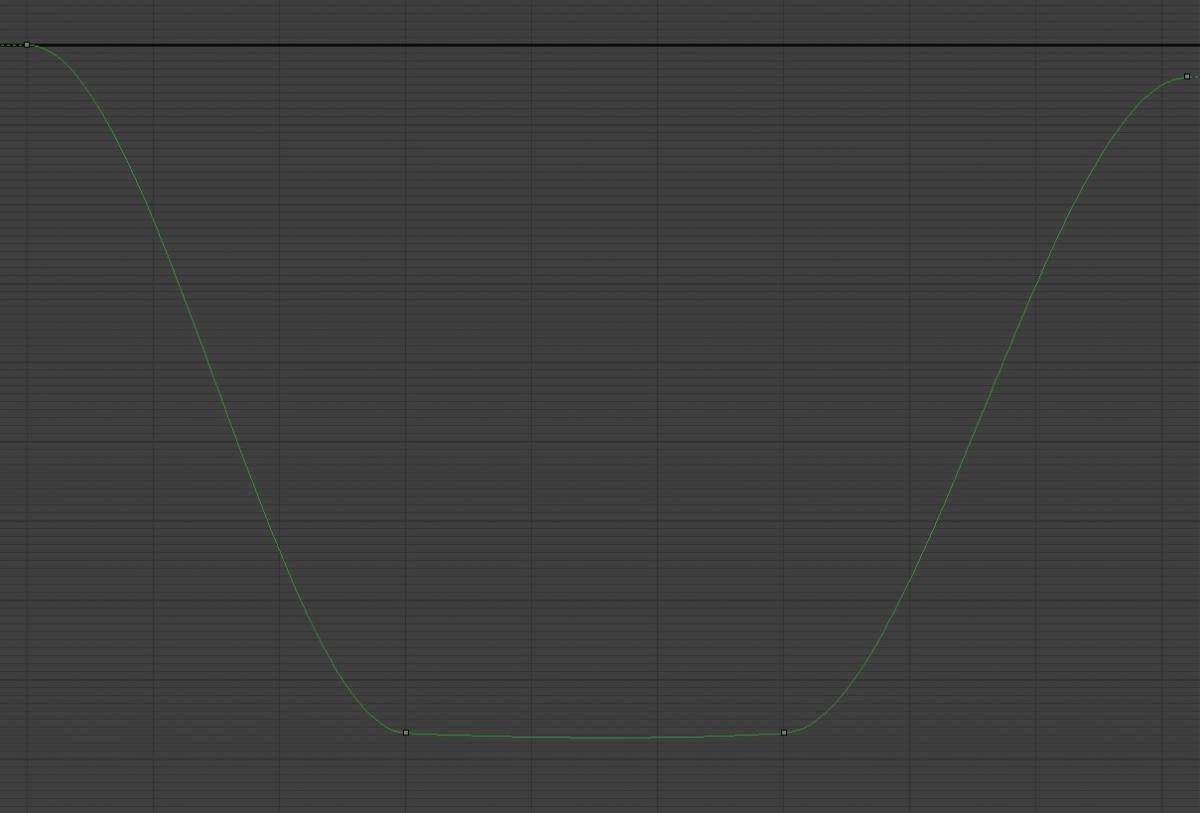

As you can see in Figure1, the keyframe number 2 and 3 are the same. But there is an extra motion between them. You might need this smoothness for many bones so leave it be if you need it. But if you don't need it make sure to make the two similar consecutive keyframes more linearly as shown in figure 2. With this, the keyframe reduction algorithm can reduce the keyframe samples.

Figure2: Two linear consecutive keyframes

You should consider this case for finger bones more carefully. Because fingers can be up to 28 bones for a human skeleton and they are showing a small portion of the body but they take much memory and calculation. In the previous example if you make the two similar consecutive keyframes linear, there would be no visual artifact for finger bones and you can drop 28 * (30 - 15 + 1) keyframe samples. Where 28 is the number of finger bones and 30 and 15 are the frames in which keyframes are created by the animator. The sampling rate is one sample per frame in this example. So by setting two consecutive similar keyframes to linear for finger bones, you will save much memory. This amount of memory can't be very huge for one animation but it can become huge when your game has many skeletal animations and characters.

Using Additive and Partial Animations instead of Full Body Animation

Animation blending has different techniques. Two of them which are very good at both functionality and performance are additive and partial animation blending. These two blending schemes are usually used for asynchronous animation events. For example when you are running and decide to shoot. So lower body continues to run and the upper body blends to shoot animation.

Using additive and partial animations can help you to have less animations. Let me describe this with an example. Imagine you have a locomotion animation controller. It blends between 3 animations (walk, run and sprint) based on input speed. You want to add a spine lean animation to this locomotion. So when your character is accelerating the character leans forward for a period of time. First you can make 3 full body walk_lean_fwd, run_lean_fwd and sprint_lean_fwd animations which are blending synchronously with walk, run and sprint respectively. You can change the blend weight to achieve a lean forward animation. Now you have three full body animations with several frames. This means more keyframes, more memory usage and more calculation. Also your blend tree gets more complicated and high dimensional. Imagine that you are adding 6 more animations to your current locomotion system. Two directional walks, two directional runs and two directional sprints. Each of them have to be blended with walk, run and sprint respectively. So with this, if we want to have leaning forward, we have to add two directional walk_lean_fwd, two directional run_fwd and two directional sprint_fwd and blend them respectively with walk, run and sprint blend trees. The blend tree is going to be high dimensional and needs too much full body animations and too much memory and calculation. It even becomes hard for the user to manipulate.

You can handle this situation more easily by using a light weighted additive animation. An additive animation is an animation that is going to be added to current animations. Usually it's a difference between two poses So first your current animations are calculated then the additive is going to be multiplied to the current transforms. Usually the additive animation is just a single frame animation which does not really need to affect all of the body parts. In our example the additive animation can be a single frame animation in which spine bones are rotated forward, the head bone is rotated down and the arms are spread a little. You can add this animation to the current locomotion animations by manipulating its weight. You can achieve the same results with just one single frame and half body additive animation and there is no need to produce different lean forward full body animations. So using additive and partial animation blending can reduce your workload and help you to achieve better performance very easily.

Using Motion Retargeting

A motion retargeting system promises to apply a same animation on different skeletons without visual artifacts. By using it you can share your animations between different characters. For example you make a walk for a specific character and you can use this animation for other characters as well. By using motion retargeting you can save your memory by preventing animation duplication. But just note that a motion retargeting system has its own computations. So it is not just the basic skeletal animation and it needs many other techniques like how to scale the positions and root bone translation, how to limit the joints, ability to mirror animations and many other things. So you may save animation memory and animating time, but the system needs more computation. Usually this computation should not become a bottleneck in your game.

Unity3D, Unreal Engine4 and Havok animation all support motion retargeting. If you do not need to share animations between different skeletons, you don't need to use motion retargeting.

Conclusion

Optimization is always a serious part of video game development. Video games are among the soft real time software so they should respond in a proper time. Animation is always an important part of a video game. It is important from different aspects like visuals, controls, storytelling, gameplay and more. Having lots of character animations in a game can improve it significantly, but the system should be capable of handling too much character animations. This article tried to address some of the techniques which are important in the optimization of skeletal animations. Some of the techniques are highly detailed and they were discussed generally here. The discussed techniques were reviewed from two perspectives. First, from the developers who want to create skeletal animation systems and second, from the users of animation systems.

Article Update Log

13 Feb 2015: Initial release

21 Feb 2015: Rearranged some parts.

Figure1: A smoothed animation curve

As you can see in Figure1, the keyframe number 2 and 3 are the same. But there is an extra motion between them. You might need this smoothness for many bones so leave it be if you need it. But if you don't need it make sure to make the two similar consecutive keyframes more linearly as shown in figure 2. With this, the keyframe reduction algorithm can reduce the keyframe samples.

Figure1: A smoothed animation curve

As you can see in Figure1, the keyframe number 2 and 3 are the same. But there is an extra motion between them. You might need this smoothness for many bones so leave it be if you need it. But if you don't need it make sure to make the two similar consecutive keyframes more linearly as shown in figure 2. With this, the keyframe reduction algorithm can reduce the keyframe samples.

Figure2: Two linear consecutive keyframes

You should consider this case for finger bones more carefully. Because fingers can be up to 28 bones for a human skeleton and they are showing a small portion of the body but they take much memory and calculation. In the previous example if you make the two similar consecutive keyframes linear, there would be no visual artifact for finger bones and you can drop 28 * (30 - 15 + 1) keyframe samples. Where 28 is the number of finger bones and 30 and 15 are the frames in which keyframes are created by the animator. The sampling rate is one sample per frame in this example. So by setting two consecutive similar keyframes to linear for finger bones, you will save much memory. This amount of memory can't be very huge for one animation but it can become huge when your game has many skeletal animations and characters.

Figure2: Two linear consecutive keyframes

You should consider this case for finger bones more carefully. Because fingers can be up to 28 bones for a human skeleton and they are showing a small portion of the body but they take much memory and calculation. In the previous example if you make the two similar consecutive keyframes linear, there would be no visual artifact for finger bones and you can drop 28 * (30 - 15 + 1) keyframe samples. Where 28 is the number of finger bones and 30 and 15 are the frames in which keyframes are created by the animator. The sampling rate is one sample per frame in this example. So by setting two consecutive similar keyframes to linear for finger bones, you will save much memory. This amount of memory can't be very huge for one animation but it can become huge when your game has many skeletal animations and characters.

There are issues I have with this article, not that anything is wrong, just that it seems a bit misleading in a couple areas due to not being complete. The issues start with the description of animations as being channels, while correct, that is generally the view from the DCC tool and definitely not the best representation for a game engine. The problem with channels is that during update you have 1-2k worth of channel data likely linear in memory and for each channel you are going to jump into the middle of that memory to read 2-4 pieces of data to compute a single quaternion or matrix depending on what you are doing. Over 30+ channels per animation you start exponentially slowing down as you destroy the cache in the CPU. This issue becomes even more major when you start distributing across multiple cores.

So, in the process of discussing animation optimization I would start by mentioning that no matter what you do there is going to be a balancing act between compression and performance. Channel based animation provides the best compression at the cost of pretty notable performance issues. Collapsing channels to full skeletal rig key frames costs compression since you can't adapt the individual curves to their needed details as easily, but performance is often nearly unbeatable.

Another item to consider is that very often, using piece wise linear approximations works for animation compression just as well at most curve fitting solutions. It uses more keys to get the same error rates but the per-key data requirement is reduced such that it generally comes out pretty similar in storage size. But, the final math used is greatly simplified and as such performance is gained. Again though, there is some balancing to be looked at here, really high quality low error rates (cinematics for instance) typically want the curves for C1 or C2 continuity reasons which linear starts failing to compress well.

Finally, when discussing curve compression you can't look at it as a single joint error rate. You need to take into account for the full skeletal chain from the bone to any end points effected by the error. I.e. you might be able to compress channels on the shoulder exceptionally well but you start noticing the hand at the end of the chain under the shoulder jittering. You need to compute the error as the means of all the chain end points in order to prevent hierarchical error accumulation from creating crappy looking animation.

Again though, the article is fine in general I'd just really like to see some of these points mentioned in order to make it clear that this is a fairly high level overview of a single direction of optimization and not a broad coverage of the subject.