ray picking help

The aim is to create a 3d tile editor i want to use ray tracing to select tiles within a grid of 2d tiles and let the camera move around.

Ive looked around on google and here but theres so many different ways of doing it and i cant find a site that explains the basic principles without using API's to do the legwork.

I dont know anything about ray tracing so at the moment i just want to figure out how i get from image coords to camera space so i can create the ray to test against the tile grid.

Hoping someone can point me to a good starting point. I will be using Opengl but i want the functions to be independant from the API for now till i grasp the basics.

From what ive gathered i convert the mouse2d coords from Image - World - View space to get the 3d mouse coordinates but to be honest im not entired sure how i do this. Then from there i need to calculate a direction for the ray (view_posxyz - mouse_3dxyz).

Thx alot,

Fishy

It's better to operate on original projection coefficients, than on projection matrix (which is a secondary object to those coeefficients).

So, how do you create projection matrix?

Given: surface width & height (in pixels), angle of Field Of Vision (FOV), you get something in the shape of:

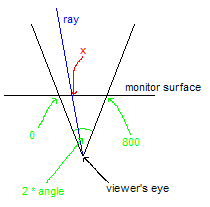

The above scheme shows the resulting projection setting from top-down point of view (width == 800 here).

Your Z is your Near Plane Depth (NPD).

Your X needs to be rescaled from 0<=x<800 (in pixels) to -tx*NPD<=x<=tx*NPD, where tx is tangent of horizontal FOV angle.

Same for Y.

You only have to know the values of horizontal and vertical angles (actually, tangets of those angles). APIs creating projection matrices usually take one of those angles (usually Y FOV) as input parameter and calculate the other one by comparing width and height of the viewport (that are also parameters).

Sample code (from my "engine"):

Having ray direction in View Space - you have to transform it to World Space by multilying by inverse view matrix (which is orthonormal in MOST cases, so transposition should be sufficient) - but that should belong to camera class, IMO.

[Edited by - deffer on October 20, 2006 5:04:03 PM]

So, how do you create projection matrix?

Given: surface width & height (in pixels), angle of Field Of Vision (FOV), you get something in the shape of:

The above scheme shows the resulting projection setting from top-down point of view (width == 800 here).

Your Z is your Near Plane Depth (NPD).

Your X needs to be rescaled from 0<=x<800 (in pixels) to -tx*NPD<=x<=tx*NPD, where tx is tangent of horizontal FOV angle.

Same for Y.

You only have to know the values of horizontal and vertical angles (actually, tangets of those angles). APIs creating projection matrices usually take one of those angles (usually Y FOV) as input parameter and calculate the other one by comparing width and height of the viewport (that are also parameters).

Sample code (from my "engine"):

void CProjectionController::SetData(float fovAngleY, int sizeX, int sizeY){ // Consume. m_fFovAngleY = fovAngleY; m_nSizeX = sizeX; m_nSizeY = sizeY; // Recompute. m_fAspectXY = ((float)sizeX) / ((float)sizeY); m_fTanY = tanf(fovAngleY/2); m_fTanX = m_fTanY * m_fAspectXY;}// x,y in pixelsxvec CProjectionController::GetRayDir(int x, int y) const{ // Screen coordinates to [-1, 1). float xf = 2.0f * ((float)(x - (m_nSizeX/2))) / ((float)m_nSizeX); float yf = 2.0f * -((float)(y - (m_nSizeY/2))) / ((float)m_nSizeY); return GetRayDir(xf, yf);}// x,y in (-1,1)xvec CProjectionController::GetRayDir(float xf, float yf) const{ // To world coordinates on the Near plane. float dx = xf * m_fNear * m_fTanX; float dy = yf * m_fNear * m_fTanY; return norm( xvec(dx, dy, m_fNear) );};Having ray direction in View Space - you have to transform it to World Space by multilying by inverse view matrix (which is orthonormal in MOST cases, so transposition should be sufficient) - but that should belong to camera class, IMO.

[Edited by - deffer on October 20, 2006 5:04:03 PM]

That makes perfect sense to me except these two lines.

Silly question but ive never really played with tangent angles so what does this part get you number wise ?

m_fTanY = tanf(fovAngleY/2);

m_fTanX = m_fTanY * m_fAspectXY;

Then how does multiplying our normalised screen coords by the tangents of camera's x,y angles get us a direction?

float dx = xf * m_fNear * m_fTanX;

float dy = yf * m_fNear * m_fTanY;

What if the near plane was 0.0 ?

Just not sure how them two lines above work really..

Thx again

Fishy

Silly question but ive never really played with tangent angles so what does this part get you number wise ?

m_fTanY = tanf(fovAngleY/2);

m_fTanX = m_fTanY * m_fAspectXY;

Then how does multiplying our normalised screen coords by the tangents of camera's x,y angles get us a direction?

float dx = xf * m_fNear * m_fTanX;

float dy = yf * m_fNear * m_fTanY;

What if the near plane was 0.0 ?

Just not sure how them two lines above work really..

Thx again

Fishy

Quote:Original post by fishleg003

Silly question but ive never really played with tangent angles so what does this part get you number wise ?

m_fTanY = tanf(fovAngleY/2);

m_fTanX = m_fTanY * m_fAspectXY;

Normally, you're giving a Y FOV angle to a projection-matrix-constructing function. That's "fovAngleY" here. Usually, it's about 60 degrees (pi/3). I need tangent to get range of "monitor surface" from Near Plane Depth. Trigonemetry.

Quote:Original post by fishleg003

Then how does multiplying our normalised screen coords by the tangents of camera's x,y angles get us a direction?

float dx = xf * m_fNear * m_fTanX;

float dy = yf * m_fNear * m_fTanY;

Those lines calculate the red "x" point from my pictar. Viewer's Eye is always at (0,0,0). Monitor surface is always at NPD. (Ok, this whole NPD thing is't really important, since it's unnoticable, but it's handy to thing that way. In reality you could use 1 instead of NPD here. The calculations would become:

float dx = xf * m_fTanX;

float dy = yf * m_fTanY;

return norm( xvec(dx, dy, 1.0f) );

).

Quote:Original post by fishleg003

What if the near plane was 0.0 ?

1. That's really not important here, as I wrote above.

2. If NPD was 0, then creating Projection Matrix would be mathematically impossible. IOW it can't be 0.

Ok ok let me see if ive got this correct.

m_fTanY = tanf(fovAngleY/2);

m_fTanX = m_fTanY * m_fAspectXY;

So these two here are giving me the max tan range for height and width of the near plane ?

Once ive got my normalised image coords i can now map this onto the near plane using those two max ranges,

float dx = xf * m_fTanX;

float dy = yf * m_fTanY;

This last part im not too sure on is this normalising our new coordinates or is this returning the direction between the camera eye pos and our new mouse pos on the near plane ?

return norm( xvec(dx, dy, m_fNear) );

Thx again,

Fishy

[Edited by - fishleg003 on October 21, 2006 8:07:12 AM]

m_fTanY = tanf(fovAngleY/2);

m_fTanX = m_fTanY * m_fAspectXY;

So these two here are giving me the max tan range for height and width of the near plane ?

Once ive got my normalised image coords i can now map this onto the near plane using those two max ranges,

float dx = xf * m_fTanX;

float dy = yf * m_fTanY;

This last part im not too sure on is this normalising our new coordinates or is this returning the direction between the camera eye pos and our new mouse pos on the near plane ?

return norm( xvec(dx, dy, m_fNear) );

Thx again,

Fishy

[Edited by - fishleg003 on October 21, 2006 8:07:12 AM]

Quote:Original post by fishleg003

Im not sure why we now normalise these new coordinates ?

return norm( xvec(dx, dy, m_fNear) );

No magic here. I just wanted to have _normalized_ direction vector, that's all. After all, the function name is "GetRayDir"

Quote:Original post by fishleg003

Maybe im thinking about rays differently i always gathered they would consist of a point in space on the camera plane and a direction pointing away from the camera to the point of interest.

That's correct, but we also do know, that each ray is coming through Viewer's Eye (0,0,0) - so having a point on camera plane and point(0,0,0), we can alreasy claculate ray direction (which is: planePoint - (0,0,0) == planePoint). No need for some other point in front of the camera plane.

Thx so much for explaining all this its quite big leaps for me but i think im with you now :).

One last thing though about the very final step you mention just so i can view this in my head.

You multiply the ray direction by the inverse view matrix is this so it takes into account camera rotation, position etc ?

Then we use the current Cam_pos in world as the start pos of the ray and the ray direction rotated translated etc to make our ray ?

thx again,

Fishy

One last thing though about the very final step you mention just so i can view this in my head.

You multiply the ray direction by the inverse view matrix is this so it takes into account camera rotation, position etc ?

Then we use the current Cam_pos in world as the start pos of the ray and the ray direction rotated translated etc to make our ray ?

thx again,

Fishy

Quote:Original post by fishleg003

You multiply the ray direction by the inverse view matrix is this so it takes into account camera rotation, position etc ?

Then we use the current Cam_pos in world as the start pos of the ray and the ray direction rotated translated etc to make our ray ?

Only rotated ;)

But yeah, as I already said:

Quote:Original post by deffer

Having ray direction in View Space - you have to transform it to World Space by multiplying by inverse view matrix (which is orthonormal in MOST cases, so transposition should be sufficient) - but that should belong to camera class, IMO.

I know that you said that you don't want to use the API but with the simple function

gluUnProject you give the window coords as input and get the mouse's world position as output. getting the ray after this is trivial.

gluUnProject you give the window coords as input and get the mouse's world position as output. getting the ray after this is trivial.

Quote:Original post by fishleg003

gluPerspective(60, aspect, 0.5, 100);

Here is how i work out the dir vector from what you described,

float m_fAspectXY = (800.0f / 600.0f);

float m_fTanY = tanf(60.0f);

float m_fTanX = m_fTanY * m_fAspectXY;

Window Coords

MP.Reset(2.0f * ((float)(x - (800/2))) / ((float)800),

2.0f * -((float)(y - (600/2))) / ((float)600),

1);

Window Normalised Coords

MP.m_x *= 1 * m_fTanX;

MP.m_y *= 1 * m_fTanY;

MP.m_z = 1;

MP = MP.Unit_Vec();

MP = MP * Inverse;

First of all: No good deed goes unpunished, part 2

But if we're still here:

1. gluPerspective(60, aspect, 0.5, 100);

I don't know parameters to this particular API function. I can only assume that 60 is FOV angle.

But if it's X angle or Y angle is completely unknown to me.

And why this stupid API want's you to pass the angle in degrees (as opposed to radians)? // flamebait[grin]

2. float m_fTanY = tanf(60.0f);

(I hope you didn't realy write "float" here, as "m_fTanY" is a member variable...)

Your 60deg is 2*angle on my diagram. So you should be using 30deg instead (as in: 30 up, 30 down).

You should be using angles in radians, not in degrees. (30deg == pi/6)

3. Try to debug the intermediates of your program. To immediately see if they are correct. Like:

- the (-1,1)x(-1,1) screen coordinates

- the MP vector, just after it gets normalized (still in camera space).

HTH.

~def

This topic is closed to new replies.

Advertisement

Popular Topics

Advertisement