Debugging printf-style or PIX is a good start. If you're sending those values through to the PS then PIX's "pixel history" feature should give you some data to work with, or you could just output them from the PS in colour channels.

If you change

color.rgb *= tex2D(ScreenMapSampler, input.screenPos);

to be

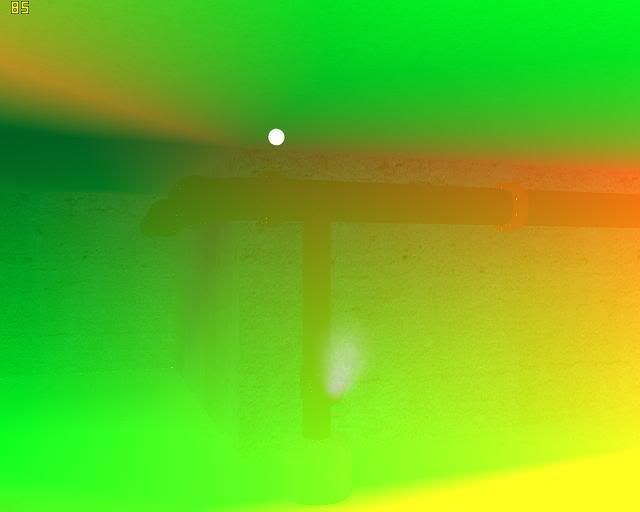

color.rgb = float3( input.screenPos.x, input.screenPos.y, 0.0f );

You should end up with black in the top-left, red in the top-right, green in the bottom-left and yellow in the bottom-right.

Quote:output.screenPos.x = output.pos.x / output.pos.w / 2.0f plus 0.5f;

output.screenPos.y = -output.pos.y / output.pos.w / 2.0f plus 0.5f;

Off the top of my head:

output.screenPos.x = 0.5f * (output.pos.x + 1.0f);

output.screenPos.y = 1.0f - (0.5f * (output.pos.y + 1.0f));

I'm pretty sure you don't need to divide by W for this part...

hth

Jack