Light pre-pass HDR

Hey guys,

Here are some screenshots comparing RGB and LUV accumulation.

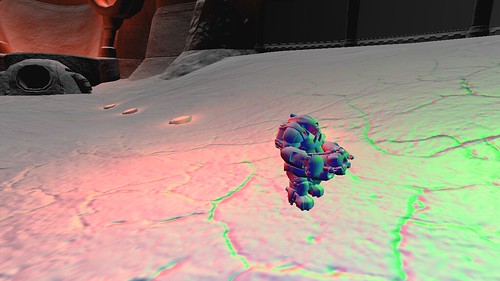

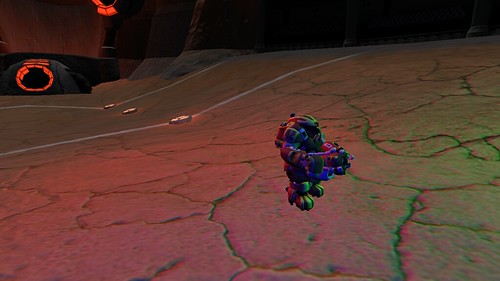

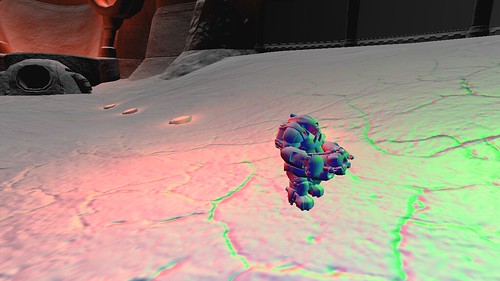

a) RGB Light Accumulation Buffer

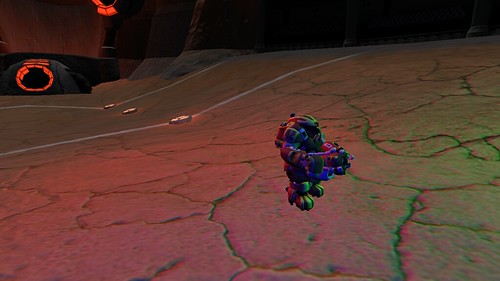

b) RGB Light Accumulation Result

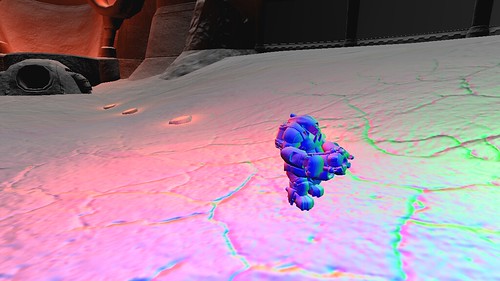

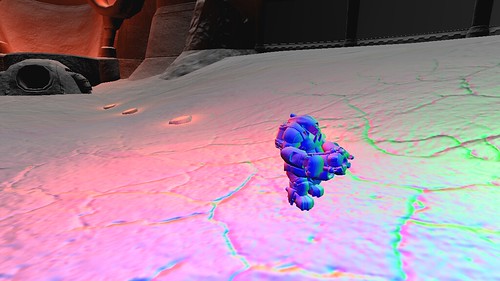

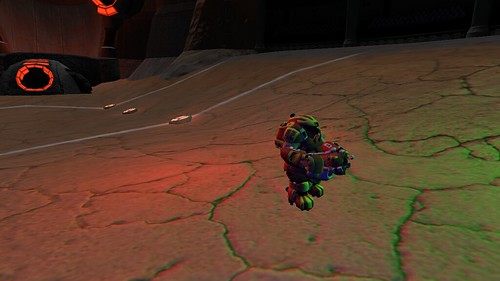

c) LUV Light Accumulation Buffer

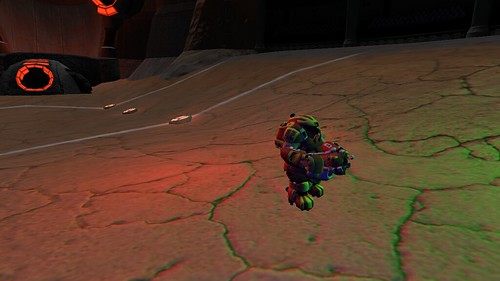

d) LUV Light Accumulation Result

As Wolf notes, using LUV accumulation means you can't benefit from free alpha blending during the light pass. My article describes some optimizations that help to alleviate the cost. Because it is based on LUV, which tries to model human perception of light, the luminance values for blue are significantly lower than in RGB. I don't think that's a downside, but it's something to be aware of.

I like this method because it preserves color values no matter how many lights are applied to an area. RGB always saturates at some point, and scaling RGB values alters colors, not just their brightness.

Here are some screenshots comparing RGB and LUV accumulation.

a) RGB Light Accumulation Buffer

b) RGB Light Accumulation Result

c) LUV Light Accumulation Buffer

d) LUV Light Accumulation Result

As Wolf notes, using LUV accumulation means you can't benefit from free alpha blending during the light pass. My article describes some optimizations that help to alleviate the cost. Because it is based on LUV, which tries to model human perception of light, the luminance values for blue are significantly lower than in RGB. I don't think that's a downside, but it's something to be aware of.

I like this method because it preserves color values no matter how many lights are applied to an area. RGB always saturates at some point, and scaling RGB values alters colors, not just their brightness.

Cool beans!

Thanks for showing actual shots.

EDIT: Not technically part of my original question, but what coordinate space do you guys recommend for the normal buffer? For purposes of support for multiple platforms, I can only use RGBA8 or RGB10_A2 buffers. I've been considering clip-space, since I can easily recover clip-space position from the depth buffer. Any thoughts?

Thanks for showing actual shots.

EDIT: Not technically part of my original question, but what coordinate space do you guys recommend for the normal buffer? For purposes of support for multiple platforms, I can only use RGBA8 or RGB10_A2 buffers. I've been considering clip-space, since I can easily recover clip-space position from the depth buffer. Any thoughts?

I used view space (is this clip space?) normals. Encoding-wise, my buffer is RGBA8888, where R = Normal.x, G = Normal.Y, the high bit of B = Sign(Normal.Z), and the rest of B combined with A are 15 bits of depth. For the scenes in my game, 15 bits is perfectly fine for depth information.

Normally, people simply reconstruct normals so that Z is always pointing towards the camera, but because normal mapping can modify normals, sometimes Z could be pointing away, which is why I spent a bit on the sign for the Z component.

The code to pack/unpack this format is as follows:

Normally, people simply reconstruct normals so that Z is always pointing towards the camera, but because normal mapping can modify normals, sometimes Z could be pointing away, which is why I spent a bit on the sign for the Z component.

The code to pack/unpack this format is as follows:

float4 PackDepthNormal(float Z, float3 normal){ float4 output; // High depth (currently in the 0..127 range Z = saturate(Z); output.z = floor(Z*63); // Low depth 0..1 output.w = frac(Z*63); // Normal (xy) output.xy = normal.xy*.5+.5; // Encode sign of 0 in upper portion of high Z if(normal.z < 0) output.z += 64; // Convert to 0..1 output.z /= 255; return output;}void UnpackDepthNormal(float4 input, out float Z, out float3 normal){ // Read in the normal xy normal.xy = input.xy*2-1; // Compute the (unsigned) z normal normal.z = 1.0 - sqrt(dot(normal.xy, normal.xy)); float hiDepth = input.z*255; // Check the sign of the z normal component if(hiDepth >= 64) { normal.z = -normal.z; hiDepth -= 64; } Z = (hiDepth + input.w)/63.0;;}

Drilian,

I started off with that encoding, but I switched to spherical co-ordinates.

I am storing: { Normal.Theta, Normal.Phi, DepthHi, DepthLo }

Having that extra bit for depth can make all the difference.

atan2 (and sincos for the g-buffer read) can be encoded into a texture lookup for lower-end cards. I do dev on an x1300 (horrible) and a 8800GT. The x1300 benefits from this optimization, the 8800 does not. Since you are in view-space, you should be able to roll in range-reduction into the trig-lookup textures if you chose to go that route.

I wrote a blog entry about this, initially looking for help. I found my bug and the solution is in the comments.

http://www.garagegames.com/index.php?sec=mg&mod=resource&page=view&qid=15340

Here is some shader code for encoding/decoding spherical:

[Edited by - patw on November 14, 2008 2:45:52 PM]

I started off with that encoding, but I switched to spherical co-ordinates.

I am storing: { Normal.Theta, Normal.Phi, DepthHi, DepthLo }

Having that extra bit for depth can make all the difference.

atan2 (and sincos for the g-buffer read) can be encoded into a texture lookup for lower-end cards. I do dev on an x1300 (horrible) and a 8800GT. The x1300 benefits from this optimization, the 8800 does not. Since you are in view-space, you should be able to roll in range-reduction into the trig-lookup textures if you chose to go that route.

I wrote a blog entry about this, initially looking for help. I found my bug and the solution is in the comments.

http://www.garagegames.com/index.php?sec=mg&mod=resource&page=view&qid=15340

Here is some shader code for encoding/decoding spherical:

inline float2 cartesianToSpGPU( in float3 normalizedVec ){ float atanYX = atan2( normalizedVec.y, normalizedVec.x ); float2 ret = float2( atanYX / PI, normalizedVec.z ); return POS_NEG_ENCODE( ret );}inline float2 cartesianToSpGPU( in float3 normalizedVec, in sampler2D atan2Sampler ){#ifdef NO_TRIG_LOOKUPS return cartesianToSpGPU( normalizedVec );#else float atanYXOut = tex2D( atan2Sampler, floor( POS_NEG_ENCODE(normalizedVec.xy ) * 255.0 ) / 255.0 ).a; float2 ret = float2( atanYXOut, POS_NEG_ENCODE( normalizedVec.z ) ); return ret;#endif}inline float3 spGPUToCartesian( in float2 spGPUAngles ){ float2 expSpGPUAngles = POS_NEG_DECODE( spGPUAngles ); float2 scTheta; sincos( expSpGPUAngles.x * PI, scTheta.x, scTheta.y ); float2 scPhi = float2( sqrt( 1.0 - expSpGPUAngles.y * expSpGPUAngles.y ), expSpGPUAngles.y ); // Renormalization not needed return float3( scTheta.y * scPhi.x, scTheta.x * scPhi.x, scPhi.y );}inline float3 spGPUToCartesian( in float2 spGPUAngles, in sampler1D sinCosSampler ){#ifdef NO_TRIG_LOOKUPS return spGPUToCartesian( spGPUAngles );#else float2 scTheta = POS_NEG_DECODE( tex1D( sinCosSampler, spGPUAngles.x ) ); float2 expSpGPUAngles = POS_NEG_DECODE( spGPUAngles ); float2 scPhi = float2( sqrt( 1.0 - expSpGPUAngles.y * expSpGPUAngles.y ), expSpGPUAngles.y ); // Renormalization not needed return float3( scTheta.y * scPhi.x, scTheta.x * scPhi.x, scPhi.y );#endif}[Edited by - patw on November 14, 2008 2:45:52 PM]

Quick question, back on topic, if I were to go with an RGBA16F target, would the luma extraction trick work for values above the range (0, 1)? If not, then that pretty much decides the matter for me.

EDIT:

Another possible normal encoding scheme I've been considering that would be low on the storage, but high on the math would be the one outlined in these slides (pg. 40-51):

http://developer.nvidia.com/object/nvision08-DemoTeam.html

Has anyone here ever implemented this style of bump-mapping, who can comment on it's performance and drawbacks? Would it only be viable for high-end cards, or could it also run efficiently on early SM3.0 cards? Any comments in general, even from those who haven't implemented it?

[Edited by - n00body on November 18, 2008 11:52:29 PM]

EDIT:

Another possible normal encoding scheme I've been considering that would be low on the storage, but high on the math would be the one outlined in these slides (pg. 40-51):

http://developer.nvidia.com/object/nvision08-DemoTeam.html

Has anyone here ever implemented this style of bump-mapping, who can comment on it's performance and drawbacks? Would it only be viable for high-end cards, or could it also run efficiently on early SM3.0 cards? Any comments in general, even from those who haven't implemented it?

[Edited by - n00body on November 18, 2008 11:52:29 PM]

I went through the slides but I did not see how they compress the normals .. I probably just missed it. Can you outline how they do this?

n00body:

That trick is basically the sRGB->XYZ matrix row for the 'Y' component of XYZ color. If I remember, the sRGB->XYZ transform is only valid if all components of the RGB color are in the range [0..1], so I do not believe that the result is "correct", however it may be "correct enough".

That trick is basically the sRGB->XYZ matrix row for the 'Y' component of XYZ color. If I remember, the sRGB->XYZ transform is only valid if all components of the RGB color are in the range [0..1], so I do not believe that the result is "correct", however it may be "correct enough".

I should have clarified, I meant that if he used an R16G16B16A16F target with HDR values, I am not sure if that specular trick would work, since the conversion from RGB->XYZ relies on RGB values being in the range [0..1].

This topic is closed to new replies.

Advertisement

Popular Topics

Advertisement