[/EDIT]

I started trying out a bokeh idea last night inspired by Lost Planet. The approach I came up with is really fast compared to non-fake bokeh effects, but I'm facing some stability/flickering problems -- if anyone's got any hints/advice/ideas of their own, I'm all ears ;)

I'm not actually doing any DOF-blurring at the moment, I'm just trying to isolate bright pixels and apply a fake out-of-focus bokeh effect to these bright pixels only (i.e. the flaring in the shape of the camera's aperture). I should be able to add a traditional blurred-DOF effect on top of this later.

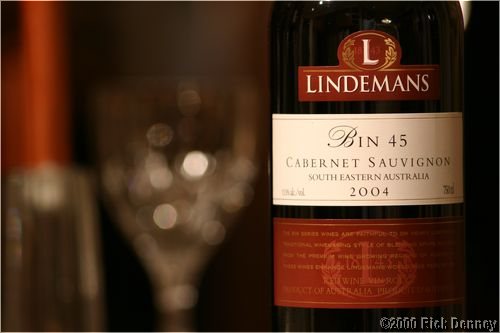

In other words, I'm not doing the blur demonstrated by this picture - I'm just trying to capture the effect that happens to the specular highlights on the glass when it goes out of focus:

Here's my recipe:

Short version:

1) Find bright pixels

2) Draw quads over them textured with hexagons/circles (multiplied with the bright pixel's colour).

Long version:

1) Render the scene.

2) Downsize the frame-buffer 16 times. Each down-sized pixel covers 256 (16x16) original pixels.

2.1) Each pixel in the down-sized buffer samples an 8x8 grid (offset half a pixel so that each sample is the average of 2x2 original pixels -- 8x8x4==256).

2.2) These 64 samples are compared to find the brightest one

2.3) The brightest sample's RGB, and it's x/y sub-offset are written to the downsized buffer (x/y are range 0-15, or 4 bits each, so they're packed into the 8 bit alpha channel).

2.4) Some kind of 'threshold' is applied (see below recipe) to determine if we actually want to apply fake bokeh to this bright pixel.

3) For each down-sized pixel over a certain brightness threshold, draw a quad at that pixel's center position, offset by it's packed x/y offset value.

3.1) The quad is textured with a "bokeh texture" - e.g. a circle/hexagon/octagon, depending on your "camera".

3.2) The quad can be scaled by the luminosity of the sample, or based on depth so out-of-focus points create bigger bokeh circles.

3.3) The "bokeh texture" is modulated with the samples RGB value and blended with the frame-buffer.

My "threshold" is currently:

1) The entire 16x16 block isn't bright (just a small bright point is present) - the brightest in the block is a certain luminosity-distance from either the dimmest or the average (not sure which to use yet).

2) The brightest is actually quite bright.

or in GLSL:

color.rgb = colorMax.rgb * step( 0.75, lumMax-lumMin ); color.rgb = color.rgb * step( 0.95, lumMax );Here's some programmer-art pictures, using additively-blended pentagons and a white border so you can easily see the quads:

My problem is that it's too unstable - you can get good results in still pictures, but when the camera moves, different pixels are chosen to 'flare up' each frame, resulting in horrible flickering of these "bokeh sprites". You can see above that as I rotate the camera, the guy's white collar goes from getting 3 flares, to none, back to 2.

[Edited by - Hodgman on July 18, 2010 8:41:46 AM]