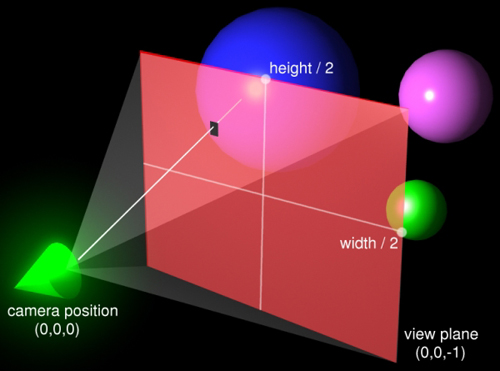

I am using the following code to generate rays from the camera center to each pixel on its orthogonally oriented screen:

Ray.Origin = CamPos;float3 XTerm = PixelWidth * (-ResWidth/2 + PixelCoord.x + 0.5) * normalize( RightVector );float3 YTerm = PixelHeight * (ResHeight/2 - PixelCoord.y - 0.5) * normalize( UpVector );float3 ZTerm = normalize( LookVector ) * CamLength;Ray.Direction = normalize(ZTerm + YTerm + XTerm);return Ray;This works well for when the camera is aligned with the world axes, ( LookVector(0,0,1); UpVector(0,1,0); RightVector(1,0,0); ) and produces the following picture:

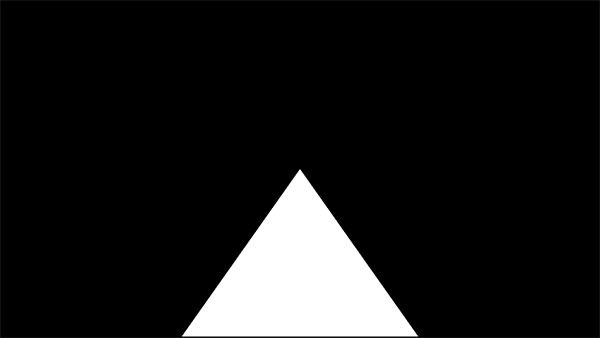

But when I change the orientation vectors to represent a 45 degree rotation around the y axis, (i.e. LookVector(1,0,1); UpVector(0,1,0); RightVector(1,0,-1); ), it does not work as intended and produces the following aberration:

I have been banging my head against this problem all day. I've tried generating the rays with the orientation centered and then applying the appropriate rotation matrix to the direction, but I always get the same messed up result! The system seems so easy to visualise and the method I'm using seems so intuitively correct! I have no idea what I'm doing wrong. Please help.