Hello,

How would you define a hidden unit and their purpose in an artificial neural network? I know that they're connected and separate from input or output units but I'm not sure I could describe in plain English what they are used for.

Thanks.

Definition of Hidden Unit

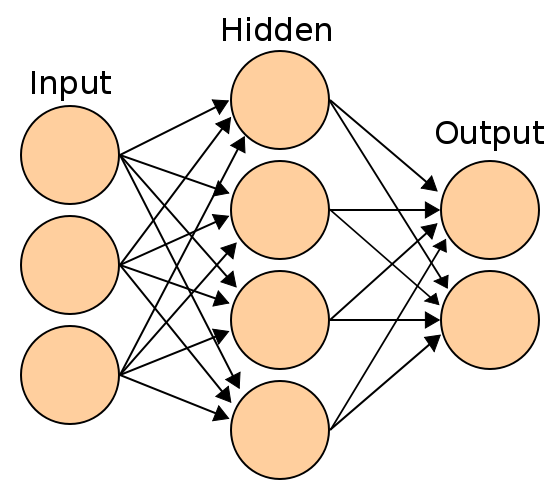

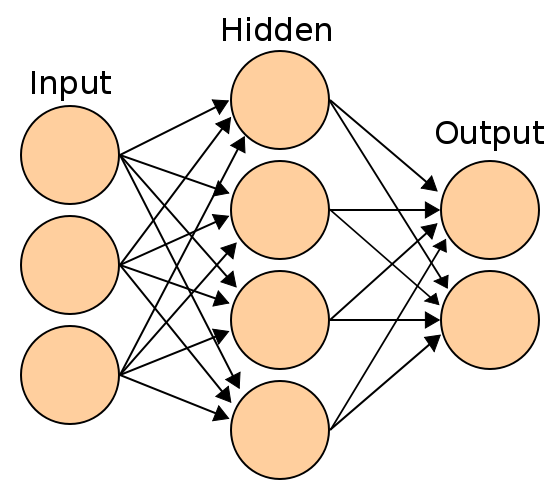

[font="verdana,sans-serif"][font="arial"]Visual definition:[/font][/font][font="arial"][font="verdana,sans-serif"]

[/font][/font]

[font="verdana, sans-serif"]

[/font][font="arial"][font="verdana,sans-serif"]

[/font][/font][font="arial"][font="verdana,sans-serif"]Purpose: [/font][/font][font="arial"][font="verdana,sans-serif"]An artificial neural network is a computational model. [/font][font="verdana, sans-serif"]Adding hidden units allows you to complexify it so as it can solve more difficult problems. As an analogy, it's like increasing the degree of a polynomial.[/font][/font]

[/font][/font]

[font="verdana, sans-serif"]

[/font][font="arial"][font="verdana,sans-serif"]

[/font][/font][font="arial"][font="verdana,sans-serif"]Purpose: [/font][/font][font="arial"][font="verdana,sans-serif"]An artificial neural network is a computational model. [/font][font="verdana, sans-serif"]Adding hidden units allows you to complexify it so as it can solve more difficult problems. As an analogy, it's like increasing the degree of a polynomial.[/font][/font]

Hidden LAYER is the term Ive always heard.

Anyway it allows an additional recombination of input factors for more indirect reasoning patterns).

Some NN have more than one hidden layer though I recall it complcates the learning strategy (back propagation) used and

makes the teaching prpcess more difficult (but yet might be nessessary for the problem space).

Its notr uncommon for a Neural Net to fail its learning process and have to be redone with a different random start patter or to require more

training data to properly learn.

Anyway it allows an additional recombination of input factors for more indirect reasoning patterns).

Some NN have more than one hidden layer though I recall it complcates the learning strategy (back propagation) used and

makes the teaching prpcess more difficult (but yet might be nessessary for the problem space).

Its notr uncommon for a Neural Net to fail its learning process and have to be redone with a different random start patter or to require more

training data to properly learn.

Without the hidden unit all you are ever doing is constructing a linear equation. (i1 * w1 + b).

If the problem at hand has a non linear response (like a sine wave, or the exclusive-or function) then you can't accurately model it using a linear equation.

The hidden node allows the networks to produce a non linear response to the inputs.

Hidden LAYER is the term Ive always heard.

In many NN topologies hidden units can be grouped in hidden layers, but it isn't always the case; layers are only a convenient abstraction of the actual computation that takes place in units.

For example, there could be a small number of acyclical, arbitrary connections between a small number of hidden units that are processed one or a few at a time rather than collectively a whole layer at a time; it's the usual style for networks obtained by genetic programming, which grow and mutate by adding or altering individual units and connections.

Hello

The aim of the hidden layers is to introduce non-linearity in input combination

Therefore, the activation function of a hidden unit must be non-linear (sigmoid for instance)

Otherwise, (linear activation in hidden layers) the ANN can be replaced with an equivalent single layer ANN

(since a composition of linear combinations can be replaced with an equivalent single linear combination)

btw :

unit = 'artificial neuron'

layer = set of units at the same depth

The aim of the hidden layers is to introduce non-linearity in input combination

Therefore, the activation function of a hidden unit must be non-linear (sigmoid for instance)

Otherwise, (linear activation in hidden layers) the ANN can be replaced with an equivalent single layer ANN

(since a composition of linear combinations can be replaced with an equivalent single linear combination)

btw :

unit = 'artificial neuron'

layer = set of units at the same depth

This topic is closed to new replies.

Advertisement

Popular Topics

Advertisement