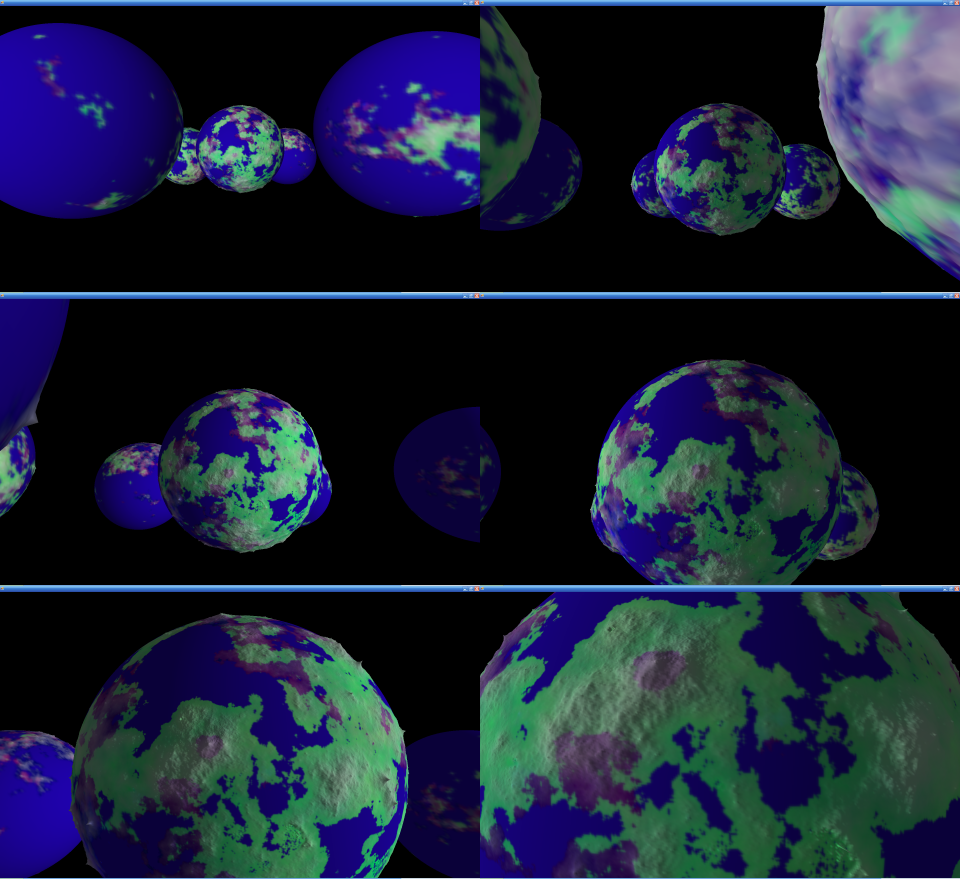

Here is a live example:

http://dl.dropbox.co...in/terrain.html

Separate topic: I am showing the vertex normals to prove they are reasonably sane and they appear to be. I tried to draw lines by drawing triangles where two points are identical, but nothing was displayed at all, so I offset one of the points by a small amount, which is why the lines look like needles. Is that normal? What is the correct way to render lines?

My main issue is that the linear interpretation of the light weighting between vertices just seems ugly. Will interpolating the normals and calculating the light weighting per pixel improve things at all for a directional light?

Here's my glsl:

<script id="shader-fs" type="x-shader/x-fragment">

precision mediump float;

varying vec4 vColor;

varying vec3 vLightWeighting;

void main(void) {

gl_FragColor = vec4(vColor.rgb * vLightWeighting, vColor.a);

}

</script>

<script id="shader-vs" type="x-shader/x-vertex">

attribute vec3 aVertexPosition;

attribute vec3 aVertexNormal;

attribute vec4 aVertexColor;

uniform mat4 uMVMatrix;

uniform mat4 uPMatrix;

uniform mat3 uNMatrix;

uniform vec3 uAmbientColor;

uniform vec3 uLightingDirection;

uniform vec3 uDirectionalColor;

varying vec4 vColor;

varying vec3 vLightWeighting;

void main(void) {

gl_Position = uPMatrix * uMVMatrix * vec4(aVertexPosition, 1.0);

vColor = aVertexColor;

vec3 transformedNormal = uNMatrix * aVertexNormal;

float directionalLightWeighting = max(dot(transformedNormal, uLightingDirection), 0.0);

vLightWeighting = uAmbientColor + uDirectionalColor * directionalLightWeighting;

}

</script>

I did something similar to this with per-vertex lighting (although using the standard pipeline, not glsl) a few years ago and didn't see these kinds of artifacts:

http://claritydevjou...ss-week-11.html