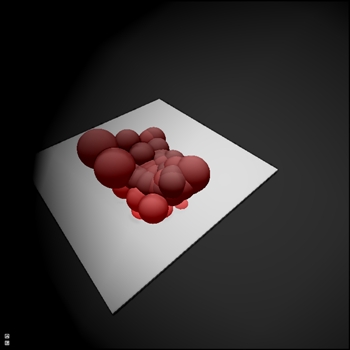

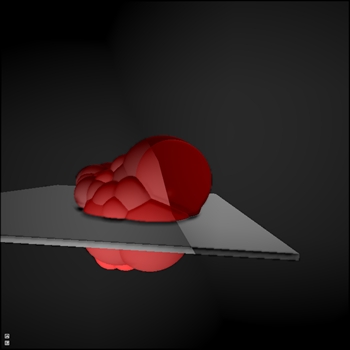

My goal is to draw a ton of lights in my scene. When I add a second light, it occludes the first (see fig. 2). I've tried using [font=courier new,courier,monospace]glAccum()[/font] to no avail. When I render a second light which overlaps the first in screen space (see fig. 3 and fig. 4), that pixel is overwritten. I'm having trouble understanding how to create and display a light accumulation buffer in a single shader pass. I could ping-pong FBOs between lights, but that seems like it would be really slow, and has not been suggested in any material I've read.

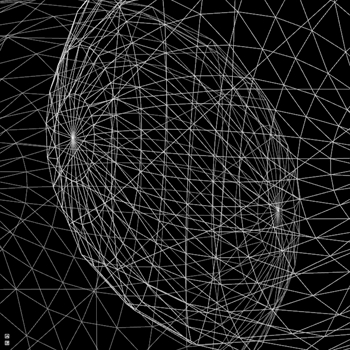

My vertex shader (see fig. 5) and fragment shader (see fig. 6) in my lighting pass are below.

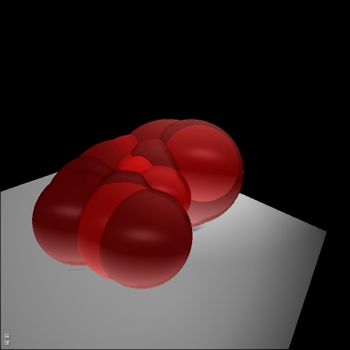

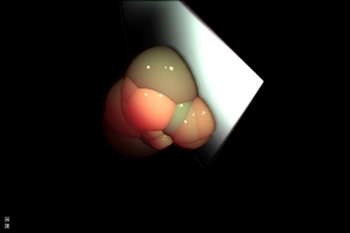

Fig 1. One light

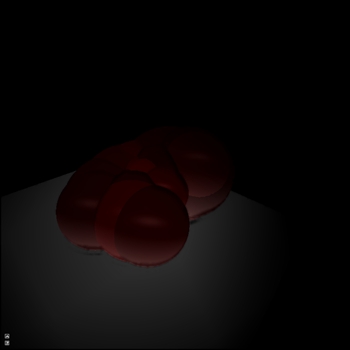

Fig 2. Two lights, occluding instead of accumulating

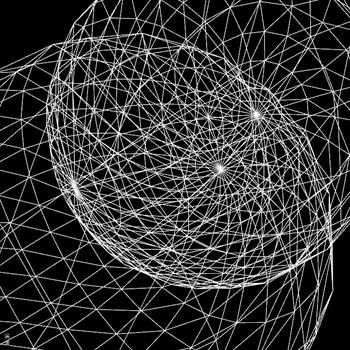

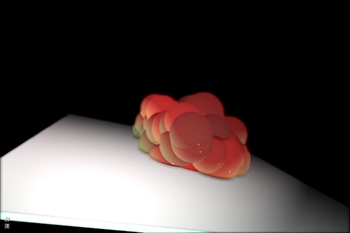

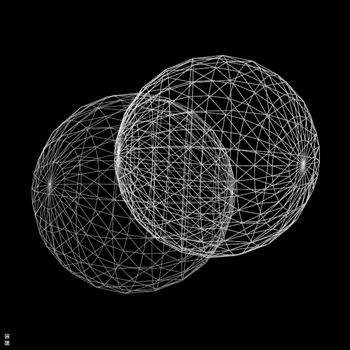

Fig 3. Spheres representing lights

Fig 4. Zoom out of fig. 3

Fig. 5 Lighting pass vertex shader

varying vec4 lightPosition;

void main( void )

{

lightPosition = gl_ModelViewProjectionMatrix * gl_Vertex;

gl_Position = lightPosition;

}

Fig. 6 Lighting pass fragment shader

uniform vec3 eyePoint; // Camera eye point

uniform vec4 lightAmbient; // Light ambient color

uniform vec3 lightCenter; // Center of light shape

uniform vec4 lightDiffuse; // Light diffuse color

uniform float lightRadius; // Size of light

uniform vec4 lightSpecular; // Light specular color

uniform vec2 pixel; // To convert pixel coords to [0,0]-[1,1]

uniform sampler2D texAlbedo; // Color data

uniform sampler2D texMaterial; // R=spec level, G=spec power, B=emissive

uniform sampler2D texNormal; // Normal-depth map

uniform sampler2D texPosition; // Position data

uniform sampler2D texSsao; // SSAO

varying vec4 lightPosition; // Position of current vertex in light

void main()

{

// Get screen space coordinate

vec2 uv = gl_FragCoord.xy * pixel;

// Sample G-buffer

vec4 albedo = texture2D( texAlbedo, uv );

vec4 material = texture2D( texMaterial, uv );

vec4 normal = texture2D( texNormal, uv );

vec4 position = texture2D( texPosition, uv );

vec4 ssao = texture2D( texSsao, uv );

// Calculate reflection

vec3 eye = normalize( -eyePoint );

vec3 light = normalize( lightCenter.xyz - position.xyz );

vec3 reflection = normalize( -reflect( light, normal.xyz ) );

// Calculate light values

vec4 ambient = lightAmbient;

vec4 diffuse = clamp( lightDiffuse * max( dot( normal.xyz, light ), 0.0 ), 0.0, 1.0 );

vec4 specular = clamp( material.r * lightSpecular * pow( max( dot( reflection, eye ), 0.0 ), material.g ), 0.0, 1.0 );

vec4 emissive = material.b;

// Combine color and light values

vec4 color = albedo;

color += ambient + diffuse + specular + emissive;

// Apply light falloff

float falloff = 1.0 - distance( lightPosition, position ) / lightRadius;

color *= falloff;

// Apply SSAO

color -= vec4( 1.0 ) * ( 1.0 - ssao.r );

// Set final color

gl_FragColor = color;

}