Hi,

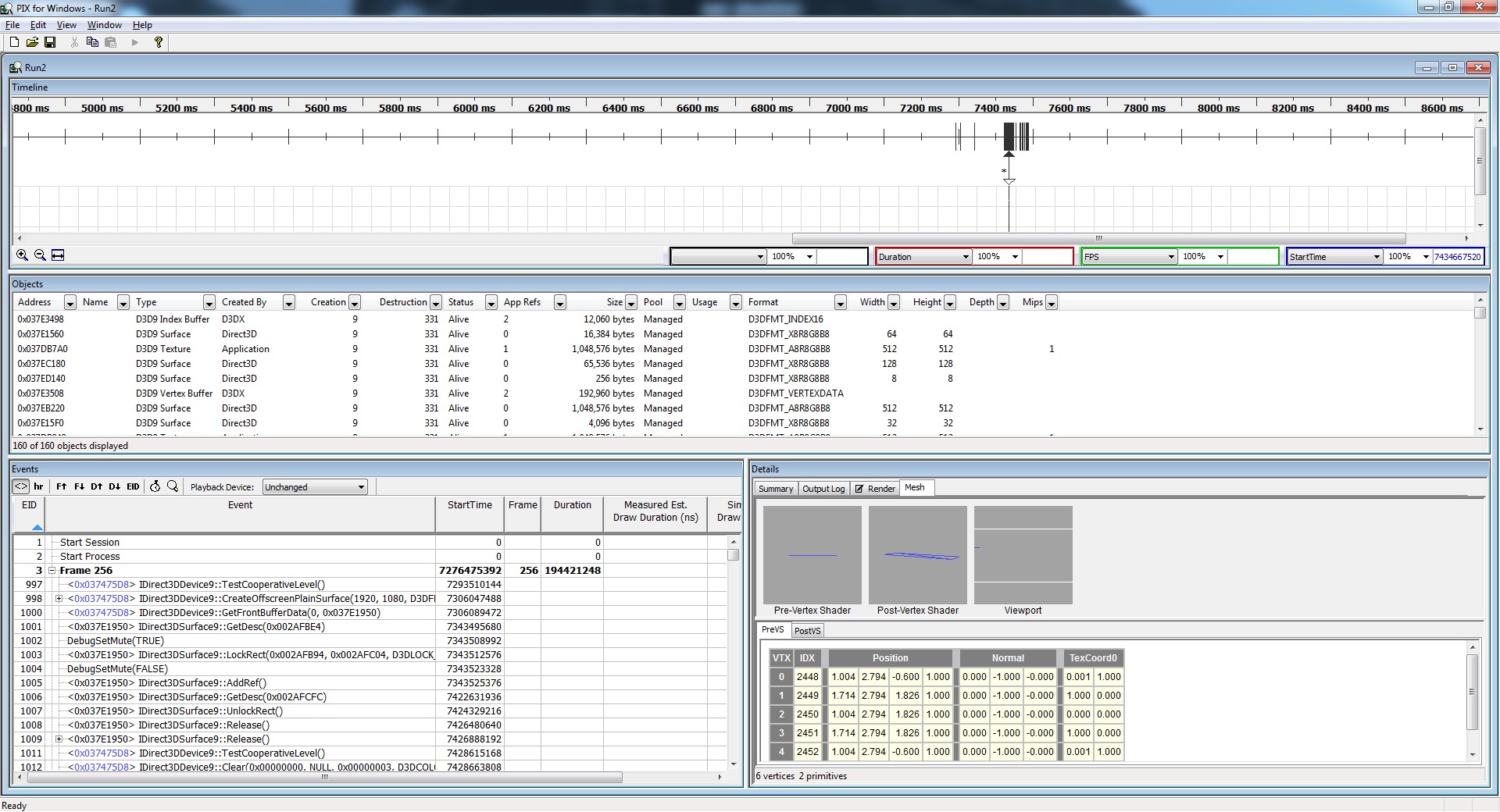

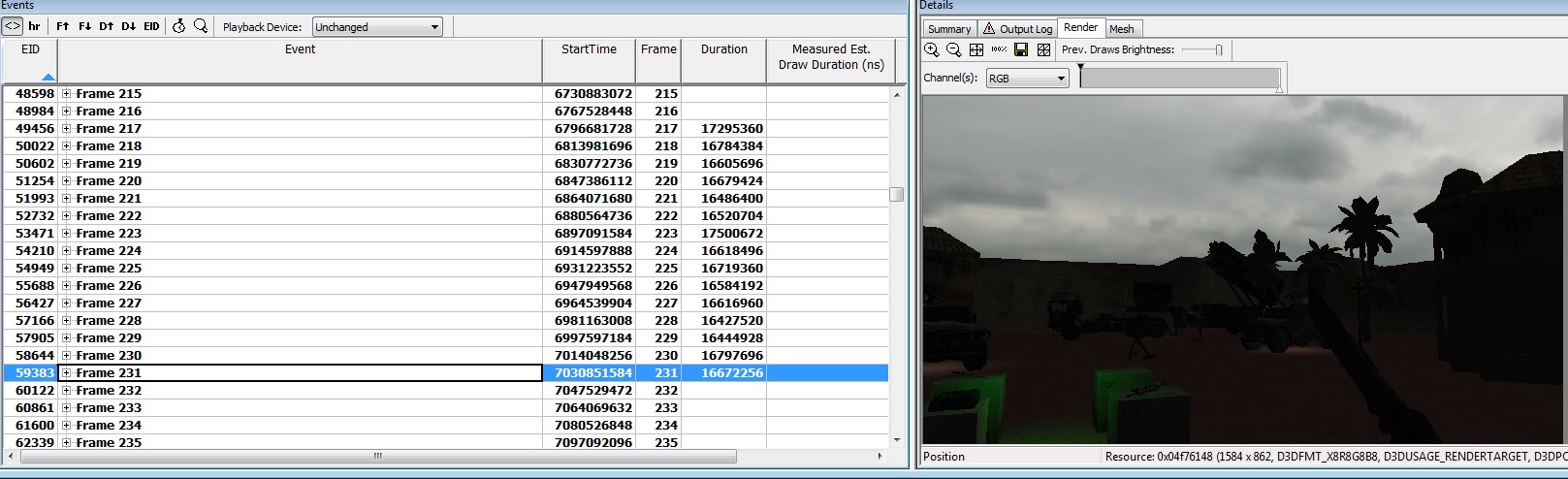

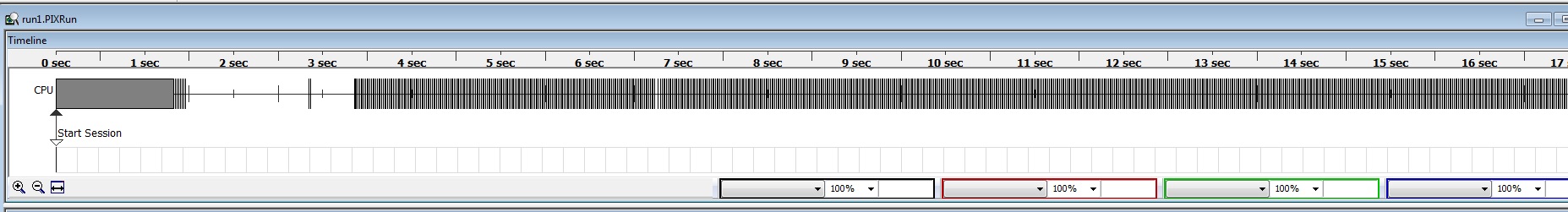

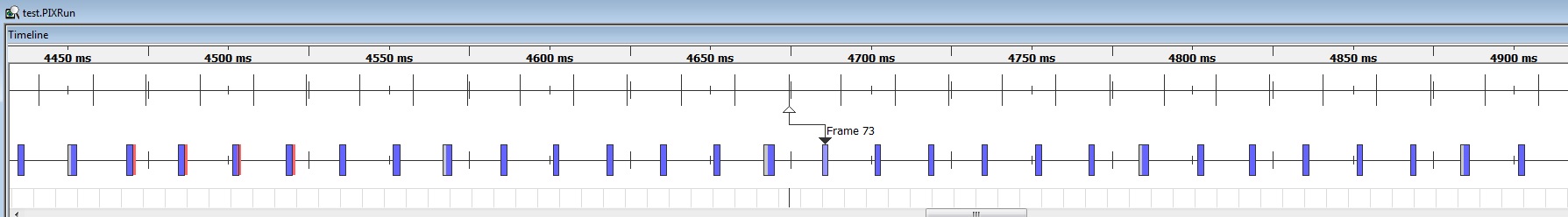

I've been profiling my DIY 3d engine after doing some major improvements on my renderqueue. With this I'm using pix.

The results per frame are somewhere between:

100.000.000 and 200.000.000 duration (FPS 5.1?)

I'm assuming that these are nano seconds, coming down to 0.1 to 0.2 seconds per frame.

With 60 fps I'd assume without skipping frames, a frame should take 1 / 60 = 0.016 second.

A few questions:

- are these assumptions correct?

- if so, why does it seem to run so 'flawless' with somewhere to 6 FPS?

- how can I see in PIX if I might be CPU versus GPU bound?

- how lang are your average frames taking, with similar measurements?

Ps.; I'm not aiming to start off and go optimizing, because I don't have performance issues with the current scenes and things I'm doing. Just curious and wanting to understand the measurements. Here's a screenshot of a captured frame in PIX.