Hi all.

Buffer Creation:

//Create LLSEB

D3D11_BUFFER_DESC llsebBD;

llsebBD.BindFlags = D3D11_BIND_UNORDERED_ACCESS | D3D11_BIND_SHADER_RESOURCE;

llsebBD.ByteWidth = sizeof(UINT) * totalTiles * 2; //2 valus per tile (start and end)

llsebBD.CPUAccessFlags = 0;

llsebBD.MiscFlags = 0;

llsebBD.StructureByteStride = 0;

llsebBD.Usage = D3D11_USAGE_DEFAULT;

//Create buffer - no initial data again.

HR(d3dDevice->CreateBuffer(&llsebBD, 0, &lightListStartEndBuffer));UAV Creation:

//UAV

D3D11_UNORDERED_ACCESS_VIEW_DESC llsebUAVDesc;

llsebUAVDesc.Format = DXGI_FORMAT_R32G32_UINT;

llsebUAVDesc.Buffer.FirstElement = 0;

llsebUAVDesc.Buffer.Flags = 0;

llsebUAVDesc.Buffer.NumElements = totalTiles;

llsebUAVDesc.ViewDimension = D3D11_UAV_DIMENSION_BUFFER;

HR(d3dDevice->CreateUnorderedAccessView(lightListStartEndBuffer, &llsebUAVDesc,

&lightListStartEndBufferUAV));

SRV Creation:

D3D11_SHADER_RESOURCE_VIEW_DESC llsebSRVDesc;

//2 32 bit UINTs per entry (xy)

llsebSRVDesc.Format = DXGI_FORMAT_R32G32_UINT;

llsebSRVDesc.Buffer.FirstElement = 0;

llsebSRVDesc.Buffer.ElementOffset = 0;

llsebSRVDesc.Buffer.NumElements = totalTiles;

llsebSRVDesc.Buffer.ElementWidth = sizeof(UINT) * 2;

llsebSRVDesc.ViewDimension = D3D11_SRV_DIMENSION_BUFFER;

HR(d3dDevice->CreateShaderResourceView(lightListStartEndBuffer, &llsebSRVDesc,

&lightListStartEndBufferSRV));

The idea here is to create a Light List Start End Buffer (llseb) for a tile based forward renderer. In my solution, I have a buffer of 32 bit uints (2 per tile). Creating the buffer works fine, as does the UAV (Writes in the compute shader seem to work perfectly fine). It is, however, with the SRV I have problems (reading the buffer in a pixel shader in order to visualise how many lighs affect a tile). Especially, the following line:

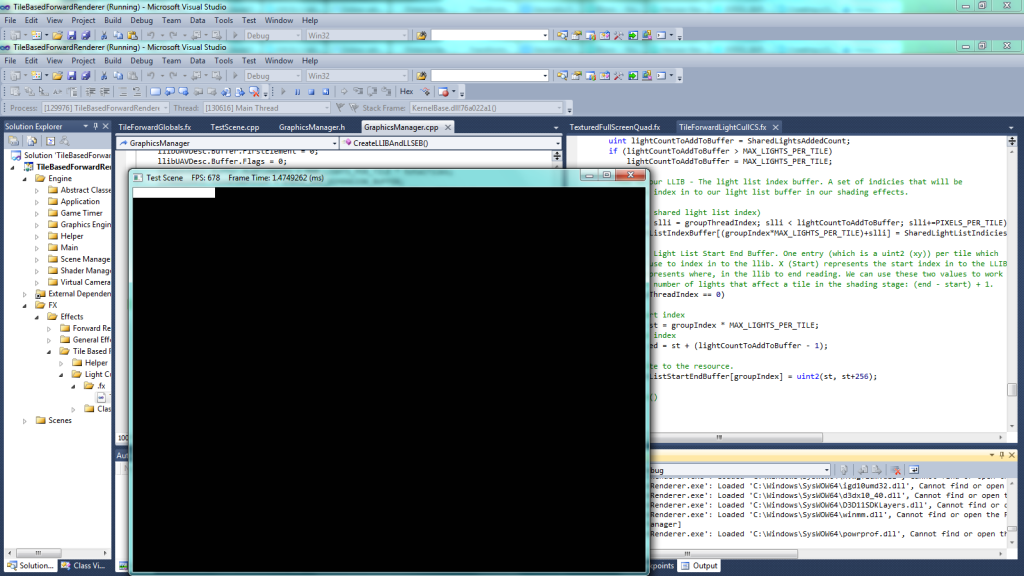

llsebSRVDesc.Buffer.ElementWidth = sizeof(UINT) * 2;With this configuration, it produces the following output when we want to visualise this buffer (note that I have faked the output from the compute shader - I'm also having some issues with lights not passing the frustum/sphere intesection test, but i'll be working on that later).

Now, this clearly isnt what we want. :/

However, if we change the questioning line to:

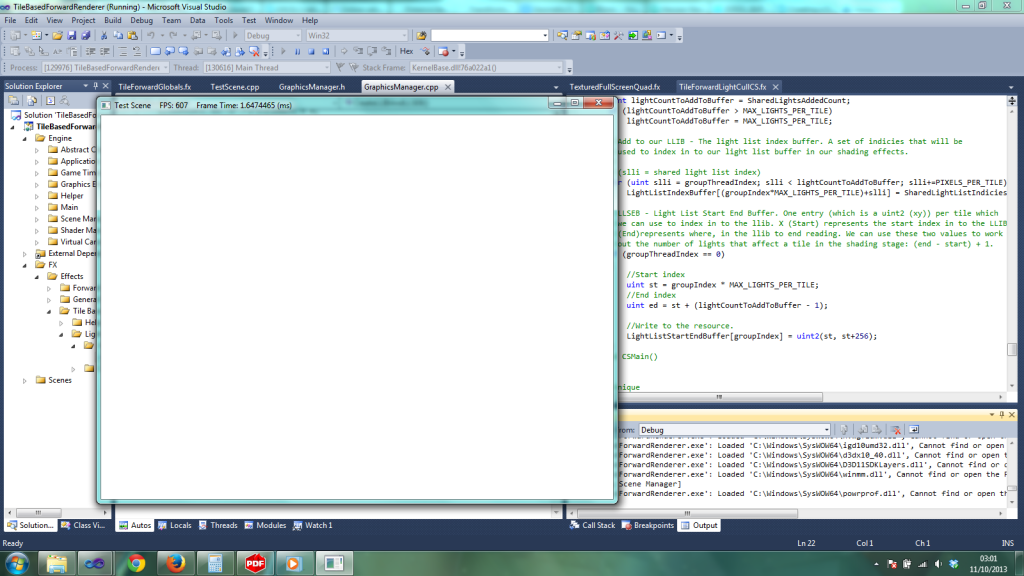

llsebSRVDesc.Buffer.ElementWidth = totalTiles;We get the following output indicating that we are reading the buffer correctly:

This seems correct. But I am seriously at a loss as to why this works. According to MSDN:

ElementWidth

Type: UINT

The width of each element (in bytes). This can be determined from the format stored in the shader-resource-view description.

Which seems to indicate that I was right in my first example (Or, at least, sort of correct). Or am I miss understanding this completly?

Many thanks.

Dan