Isn't Translation in global/world space?

Translation is relative to a reference. Whether this is the world space or any other space is not explicitly stated by the transformation but by the context it is used in. The same is true for any other transformation, inclusive any composed one. There is nothing like a tag on a "translation" that tells whether it is in model space, world space, view space, an object's local space, tangent space, or-what-not space.

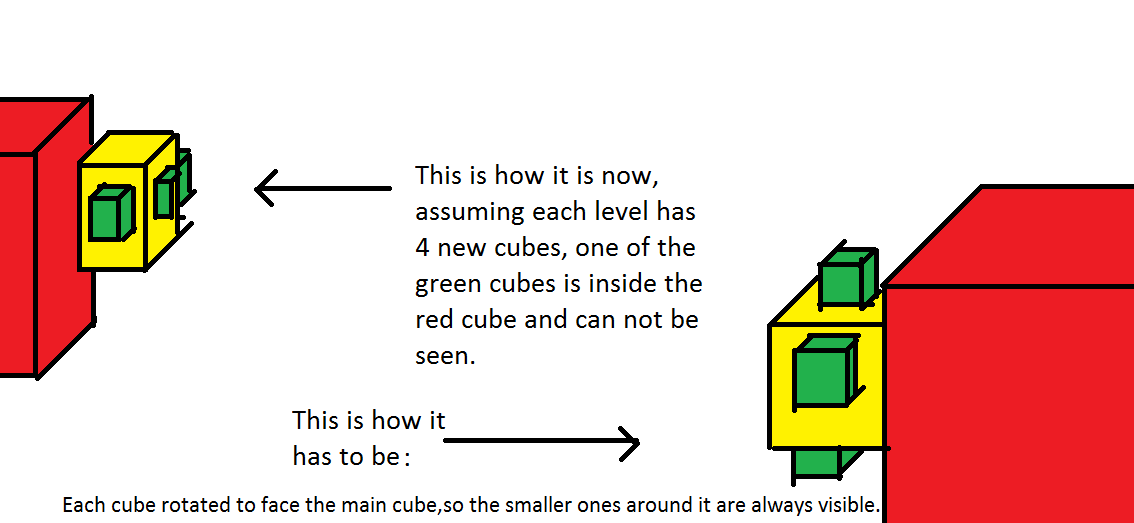

An example: An entity has a position, orientation, and size (what all are better names compared to translation, rotation, and scaling, w.r.t. what they describe). You, as the programmer, define that they are always to be interpreted w.r.t. the world space. That is fine because so the entities' transforms all have a defined common space. Now, if an entity should follow forward kinematics by attaching it to a parent entity, the child entity gets both a pointer to the parent and another tuple of position, orientation, and perhaps scaling, but this time as localPosition, localOrientation, localScaling defined to be relative to the parent entity.

If you now want to compute a geometrical relation of those child entity to some other entity, you can do so only if all transforms involved are given w.r.t the same reference. That means to first have to ensure that the world position (and so on) of the child entity are up-to-date, what may mean to request the world position (and so on) from the parent entity and re-compute the own world position (and so on) from it, e.g. when using row vectors:

this->transform = this->localTransform * parent->transform()

where each transform is expected to be computed as product

matrix(size) * matrix(orientation) * matrix(position)

If done so, then a relation can be computed

facing_matrix = facingFromTo(source.transform, target.transform);

where it is still to be remembered that facing_matrix is given w.r.t. the world space.

The HasParent() branch simply updates the entity's transform to be relative to the one of the parent, so if the parent moves, the entity will be moved with it.

Yes, but it does so at the wrong moment. See above.