Hi,

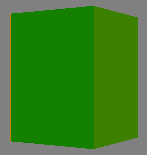

For about a week now I've been learning deferred shading. Things were running smooth until I ran into a couple snags. while implementing my shaders(3.0) I have been getting strange lighting artifacts and I managed to narrow it down to the normal map/buffer of the g-buffer:

If you look along the left edge of the model you'll see the artifact( orangish color ). I've notice that this is a common problem with deferred shaders and have not mange to find any way to resolve the issue. I've tried disabling multi-sampling/anti-aliasing, adjusting the filters to none, point, linear, antistropic, and adjusting the pixel cooridantes to match the texel offset( -=.5/screenWidth, -=.5/screenHeight). Different techniques only minimize the artifacts.

So my first question is, how do you combat lighting artifacts.

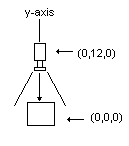

Another problem that I have is that when the camera sits above or below the model, along the y-axis, the model is not rendered. Why is this?

If I adjust the camera's x or y value the slightest, say .0001f, then the model is rendered. Is this another down sides of deferred shading?

shader code:

[g-buffer]

///////////////////////////////////////////

// G L O B A L V A R I A B L E S

///////////////////////////////////////////

float4x4 gWorld;

float4x4 gWorldViewProjection;

float gSpecularIntensity;

float gSpecularPower;

sampler2D gColorMap;

///////////////////////////////////////////

vsOutput vsDeferredShaderGeometryBuffer( vsInput IN )

{

vsOutput OUT = (vsOutput)0;

OUT.position = mul( float4(IN.position,1.f), gWorldViewProjection );

OUT.texcoord = IN.texcoord;

OUT.normal = normalize(mul( IN.normal, (float3x3)gWorld ));

OUT.depth.x = OUT.position.z;

OUT.depth.y = OUT.position.w;

return OUT;

}

///////////////////////////////////////////

psOutput psDeferredShaderGeometryBuffer( vsOutput IN )

{

psOutput OUT = (psOutput)0;

OUT.color.rgb = tex2D( gColorMap, IN.texcoord );

OUT.color.a = 1;

OUT.normal.xyz = IN.normal * .5f + .5f;

OUT.normal.z = 0;

OUT.depth = IN.depth.x / IN.depth.y;

return OUT;

}

[g-buffer]

[lighting shader]

///////////////////////////////////////////

// G L O B A L V A R I A B L E S

///////////////////////////////////////////

//float4 gAmbient;

//float4 gLightAmbient;

//float4 gMaterialAmbient;

float4x4 gInverseViewProjection;

float4 gLightDiffuse;

float4 gMaterialDiffuse;

float3 gLightDirection;

float3 gCameraPosition;

float gSpecularIntensity;

float gSpecularPower;

sampler2D gColorMap;

sampler2D gNormalMap;

sampler2D gDepthMap;

///////////////////////////////////////////

vsOutput vsDeferredShaderDirectionalLighting( vsInput IN )

{

vsOutput OUT = (vsOutput)0;

OUT.position = float4( IN.position, 1.f );

OUT.texcoord = IN.texcoord;// - float2( .5/800, .5/600 );

return OUT;

}

///////////////////////////////////////////

float4 psDeferredShaderDirectionalLighting( vsOutput IN ) : COLOR

{

float4 pixel = tex2D( gColorMap, IN.texcoord );

if( (pixel.x+pixel.y+pixel.z) <=0 ) return pixel;

float3 surfaceNormal = (tex2D( gNormalMap, IN.texcoord )-.5f)*2.f;

float4 worldPos = 0;

worldPos.x = IN.texcoord.x * 2.f - 1.f;

worldPos.y = -( IN.texcoord.y * 2.f - 1.f );

worldPos.z = tex2D( gDepthMap, IN.texcoord ).r;

worldPos.w = 1.f;

worldPos = mul( worldPos, gInverseViewProjection );

worldPos /= worldPos.w;

//if( surfaceNormal.r + surfaceNormal.g + surfaceNormal.b <= 0 )

// return 0 ;

float lightIntensity = saturate( dot( surfaceNormal, -normalize(gLightDirection) ) );

float specularIntensity = saturate( dot( surfaceNormal, normalize(gLightDirection)+(gCameraPosition-worldPos)));

float specularFinal = pow( specularIntensity, gSpecularPower ) * gSpecularIntensity;

//float4 ambient = ((gAmbient+gLightAmbient)*gMaterialAmbient);

return float4( ( gMaterialDiffuse * gLightDiffuse * lightIntensity).rgb, specularFinal);

};[lighting shader]

Thanks in advance