I want to start implementing a game engine, but I don't have a clear understanding of how it should be designed. I've read much on the topic and it would seem the best way should be creating standalone generic components that are then used to create various objects. Would it look something like this?

class CMaterial

{

// texture, diffuse color...

};

class CMesh

{

CMaterial mMaterial;

vertex buffer

index buffer

// each mesh has exactly 1 material when using assimp (I think)

};

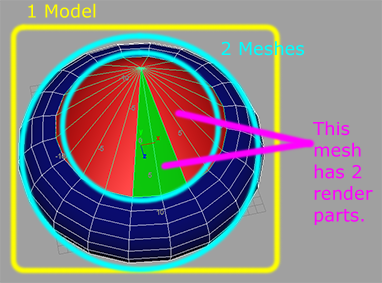

class CModel

{

constant buffer per model

std::vector<CMesh>mMeshes;

};

Then one would create a generic model for a humanoid:

class CHumanoid

{

CModel mModel;

CPhysics mPhysics;

CInputHandler mInputHandler;

};

Which would include draw,create and destroy functions.

You see, I'm not sure how I could implement the different components that I could use to make up some sort of a complete structure, such as a human (it needs to be rendered, physics needs to have effect on it, it need to be able to move, wield weapons ...).

Then it would need to have a rendering class that uses dx11 to draw it, would I make this a static/global class or what?

I'm very lost here as to how one should create a game engine from generic components that handle themselves and are as decoupled as possible.