Hi,

After some refactoring and adding new features in my engine, I've been doing some profiling.

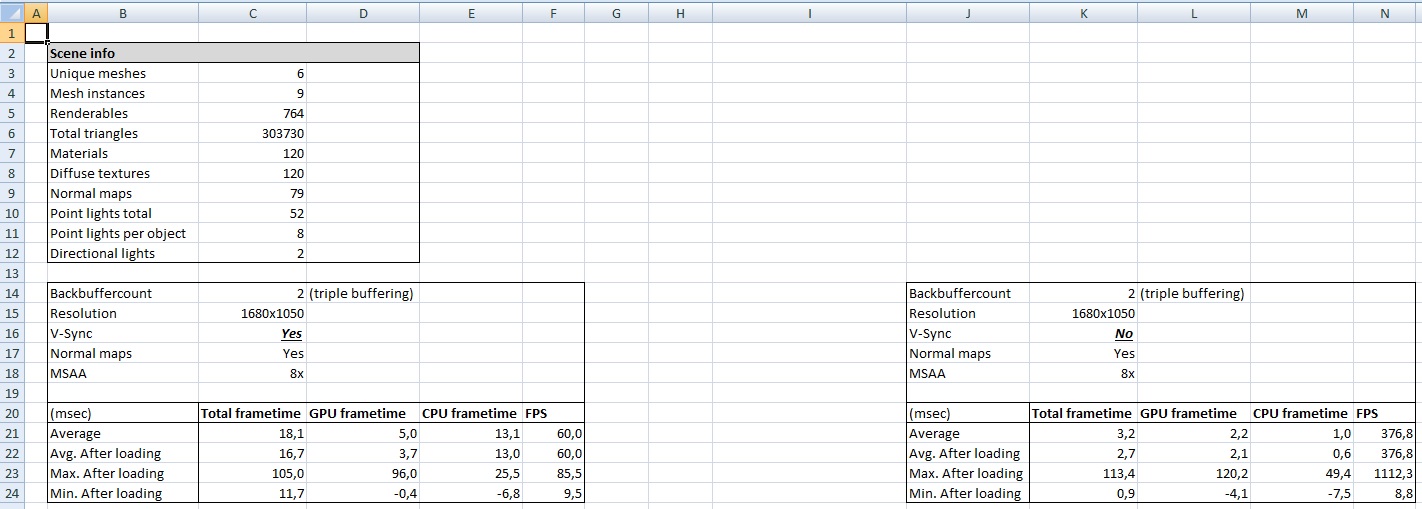

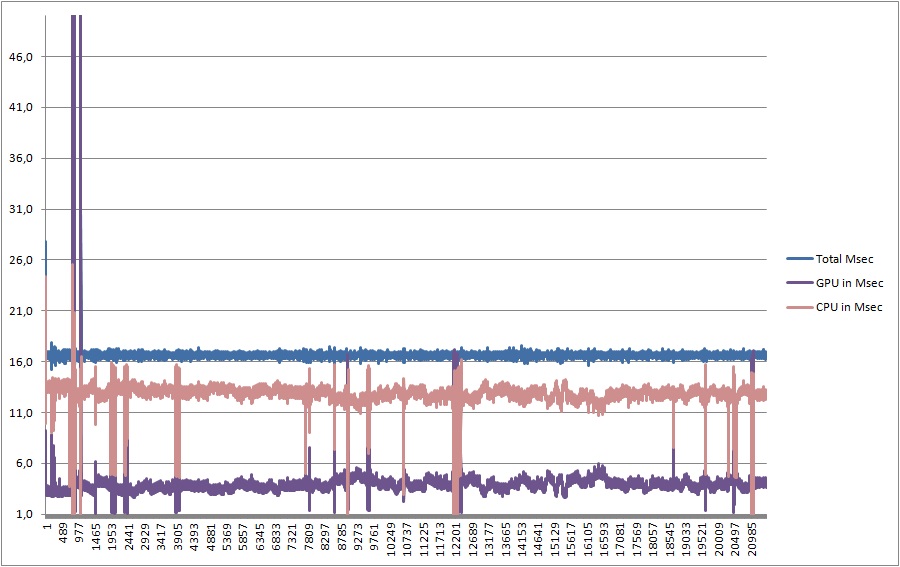

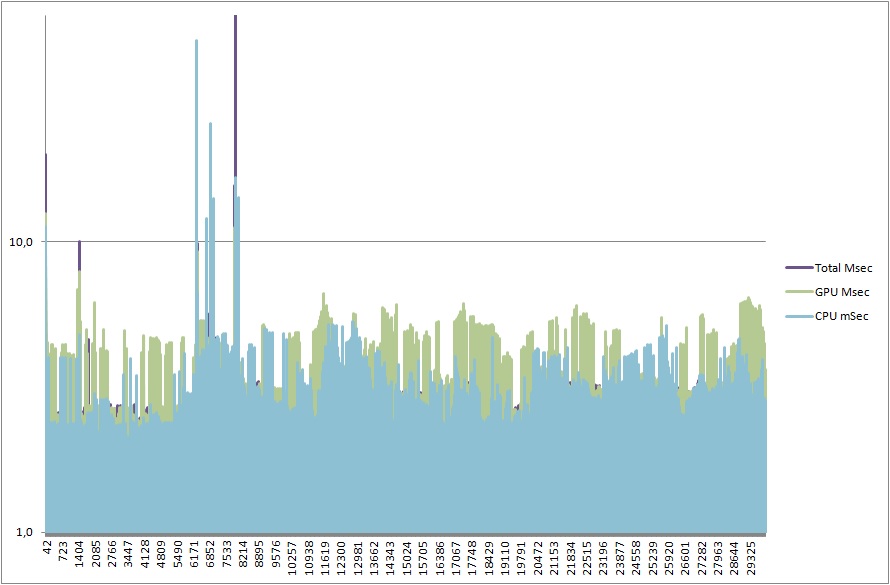

I did it on both a simple scene and a more complex scene.

You can see all results here:

http://www.sierracosworth.nl/gamedev/2014-07-29_profiling/

It's actually quite fun to check your code against one full frame and all 'underlying' d3d calls that are done.

But now you think, what's the question?

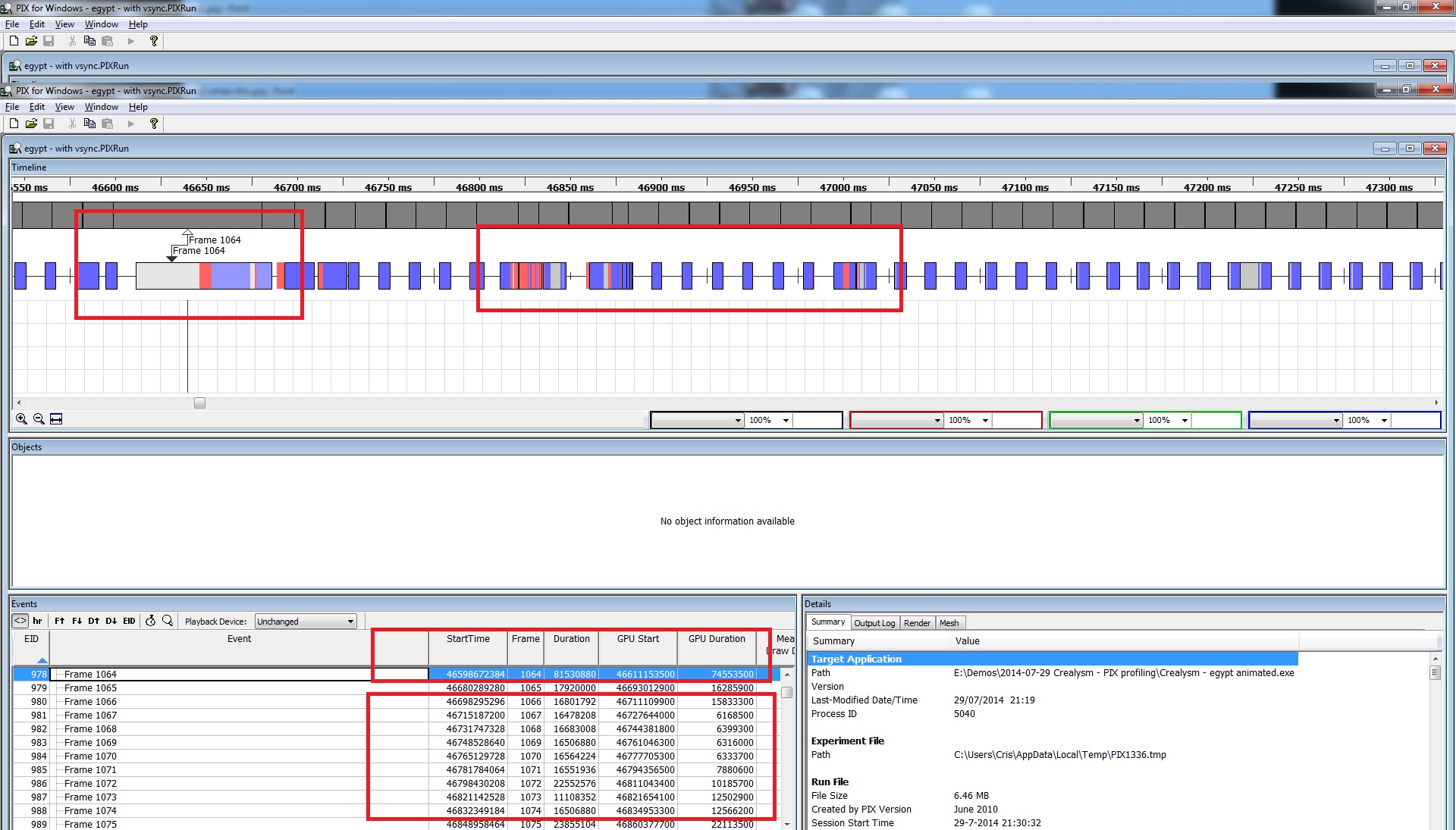

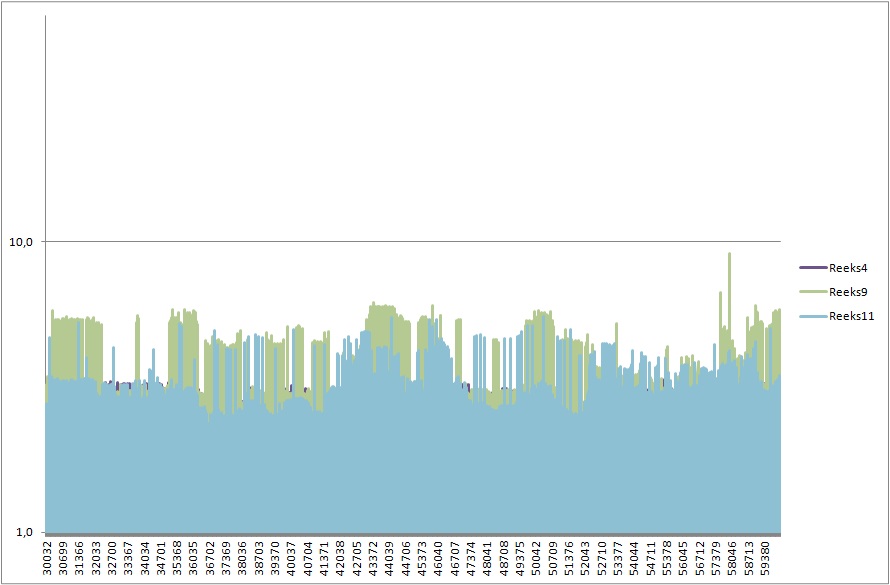

In the screenshot below you'll see an example of 'peaks' I'm getting (Stutter) in total frametime. Now I'm trying to find out it they are cased by either too much work for the GPU (CPU is waiting) or is the GPU waiting because the CPU isn't delivering enough. My first thought would be that the GPU is too busy to handle everything the CPU delivers.

What are your thoughts?