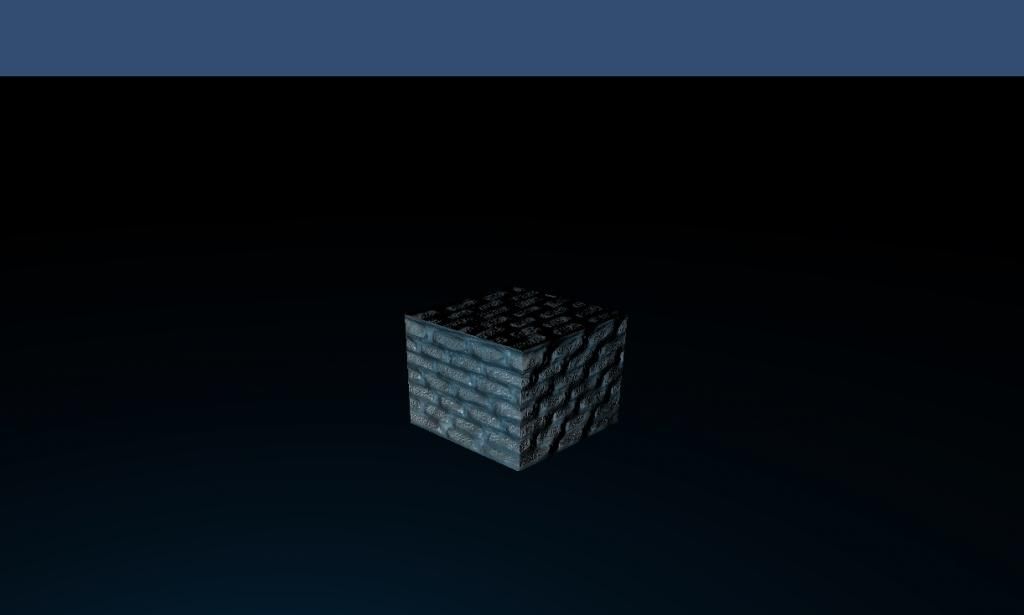

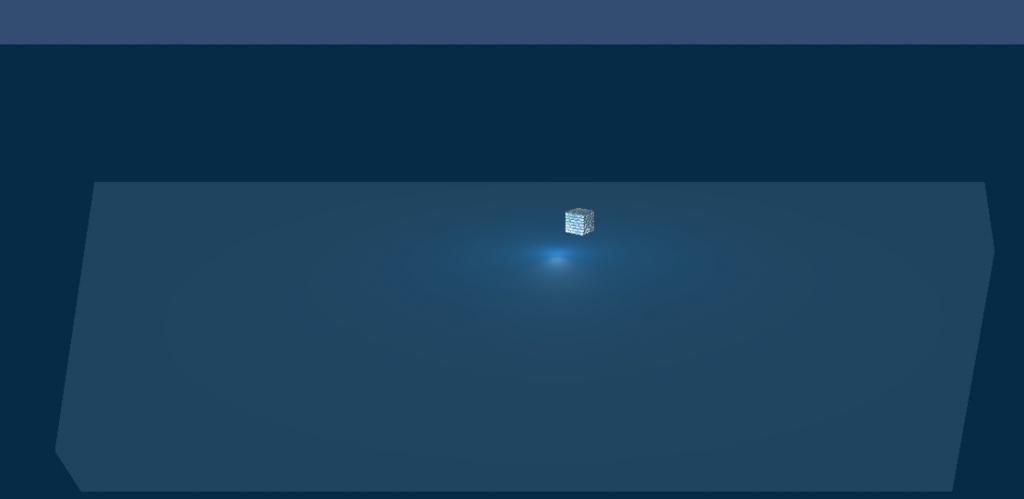

The remaining hurdle involves the negative normals of my cube being lit when the light is hitting the back of the surface (as if the normal is the inverse of the way I would like it to be)

Remember, that this is to some degree expected behavior. Imagine a surface like this:

x <-- Light source

--------- -------------

\ /

\ /

\ /

\ back side /

---------------

front side

I have tried many permutations of NBT on the cube face I have left empty but even with no NBT data the flat lighting still behaves as though the face was facing the other way, I am lead to believe it has to a bug in my lighting.

It looks correct in the images. Lighting is the flat grey of the ambient light, with no influence from the light sources. What behavior are you expecting?

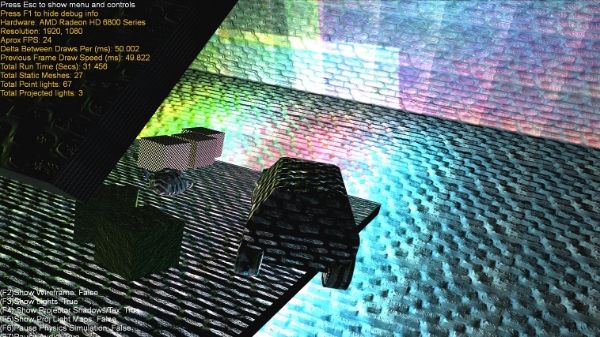

I am not sure if my home brew Cube is at fault or my shader code:

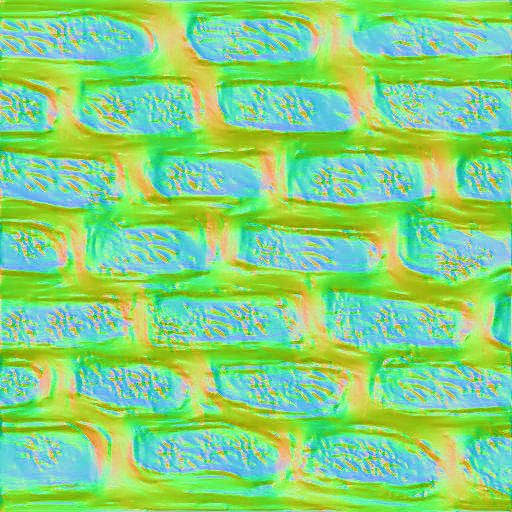

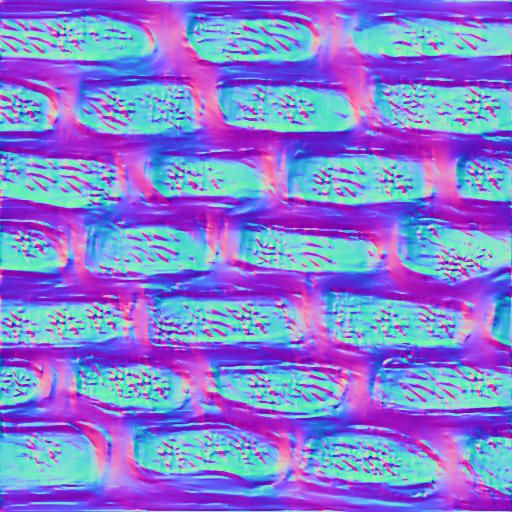

The shader code looks ok, the tangents of the cube look wrong. Actually, it kind of depends on your normal map, which you didn't post here.

A tangent space normal map encodes the normals relativ to ... well the tangent space. This is a fancy way of saying, that the red component encodes, how much the normal is bend towards the right in the texture, which is the direction of increasing X coordinates in the texture, or the direction of increasing u coordinates on the UV mapped model. Similarly, the green component encodes, how much the normal is bend towards the direction of increasing Y in the texture, or the direction of increasing V in the UV mapped moddel. So, the tangent associated with the red component (in your shader the "tangent") should point in the object space direction, in which the u part of the UV coordinates increases. The tangent associated with the green component (in your shader the "binormal") should point in the objects space direction, in which the v part of the UV coordinates increases. I hand checked a couple of your cube triangles, and sometimes, the tangents were negated.

Now, if you like brain teasers, you can obiously change the encoding of your normalmaps, for example by inverting the red or green channels, in which case your tangents would have to be inverted too. But IMO this only makes it more complicated to reason about it, and you should keep it as simple as possible.

If you are having trouble, computing consistent normals and tangents by hand, maybe you should write some code for that. You will need it eventually, when you load models from files.