Note: Changed the title of the topic as we were able to figure out what potential cause is (issue is still not fixed though.)

(Previously it was called "Texture judder on AMD Cards")

A little backstory before i start:

Up to this point i was using a very old PC (for todays standards) to work on my project.

It had a Nvidia Geforce 9600GT (512MB GDDR3) in it.

Now i build a new PC Rig with an AMD GPU (Radeon RX 480) and here is where the problem starts.

I experience a very weird texture-judder problem on my new Rig which wasn't really present on my old Geforce 9600GT (and neither on my Laptop with an IntelHD 4000)

Note: I'm using DX9 (limited to that as that's what the engine is using.)

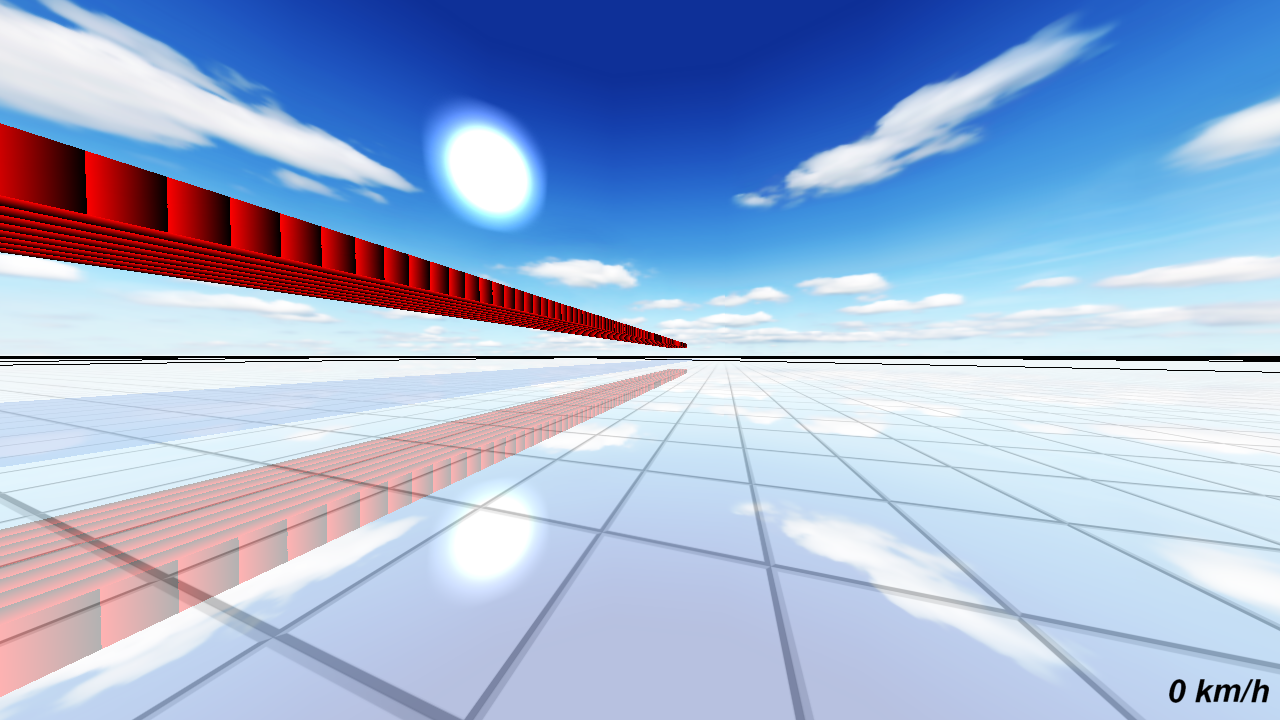

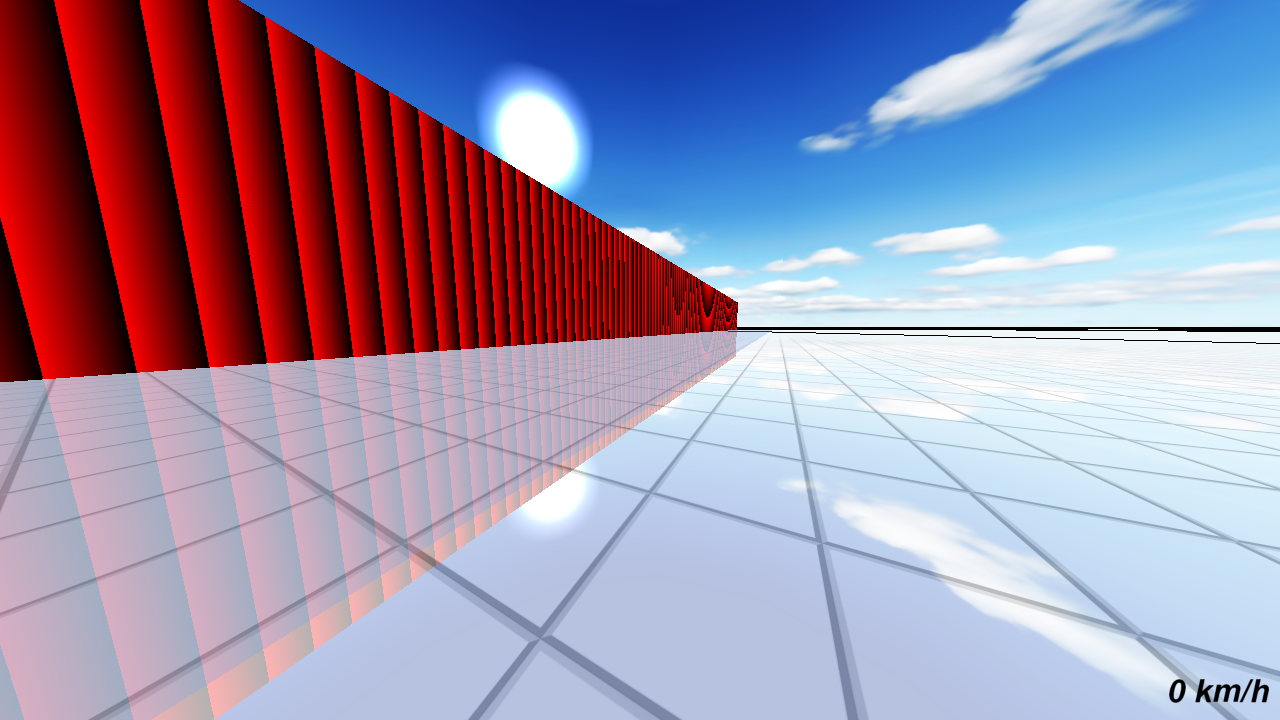

I made a short video showing this issue: (Play it in fullscreen to see it properly)

[media]https:

Note that the white wall is literally one qube (no subdivides) while each "tile" is the texture (using texture repeat on this wall)

The floor on the other hand is subdivided.

I know that issues like that can occur if you use very high uv-coordinates (lots of repeats) and if your vertices of one polygon are very far away. (Floating point precision issues.) This can also happen on my old Geforce GPU but i really had to push it in order to achieve this effect. (Scaling a polygon by nearly a million and using A LOT of repeats.)

Other than that my Geforce didn't had this issues at all.

On my AMD rig this issue crops up everywhere. I can even notice it with smaller polygons and less repeats (it's enough to do like 100-200 repeats on a smaller qube and you can still notice minor judder.)

I tested it on other rigs (one also had an RX 480 and another one had a GPU from the Radeon 7xxx Series) and both had the exact same issue (both were AMD cards.)

What's weird is that the judder is completely gone when facing it up front. (Maybe AMD is having issues with texture filtering? I'm using Mipmapping with 16x anisotropic filtering.)

I tried to subdivide the geometry more, but it just reduces the judder (it's still noticeable and isn't eliminated completely.) It seems like i have to create a very high-poly mesh in order to get rid of it with somewhat "acceptable" results. (i literally subdivided the floor like a madman and it was still noticeable.)

Is this a known GPU or a Driver problem? (Are there any known AMD issues in that regard?)

Would appreciate any kind of help. :)

/Edit:

Just noticed that this is only really happening (at least on the floor) if you place the floor mesh and the camera away from the origin (0/0/0).

But still, the old Geforce doesn't have nearly the same issues compared to the AMD. (The precision problems start to appear waaayyyyyy sooner compared to the Nvidia Geforce.)

/Edit2:

Did a bit of digging and debugging. Seems that the UV-koordinates for the textures (when they arrive in the pixelshader stage) already seem to have the wrong/imprecise values which get fed into the texture function (so the texture rendering is not the cause, it's the attribute interpolation between the 3 vertices of the polygon which are calculated this way.)

No idea how to fix this though.