Oh no, no no no, don't you worry, I am NOT simulating a full universe!!!!

Yes, I didn't feel like you were planning that from your original post but the way the thread was going, it seemed like things were pointing that direction which I thought I should just point out right now is a very bad idea, unless you want to train to be an Astrophysicist yourself :-)

At the moment, I am trying to put the first tiny pieces into action, so to speak, and I just want to avoid wasting time on a lot of overly complicated math if there is one or more shortcuts that can be taken. I just got basic gravity (Newton's law of universal gravitation) working, for example. I know the games / simulations you list, and I doubt that the parts of mine that simulates the cosmos will be even that advanced! All I want is something that can claim to be based in science, not something that is scientifically accurate. I know (some of) my limitations

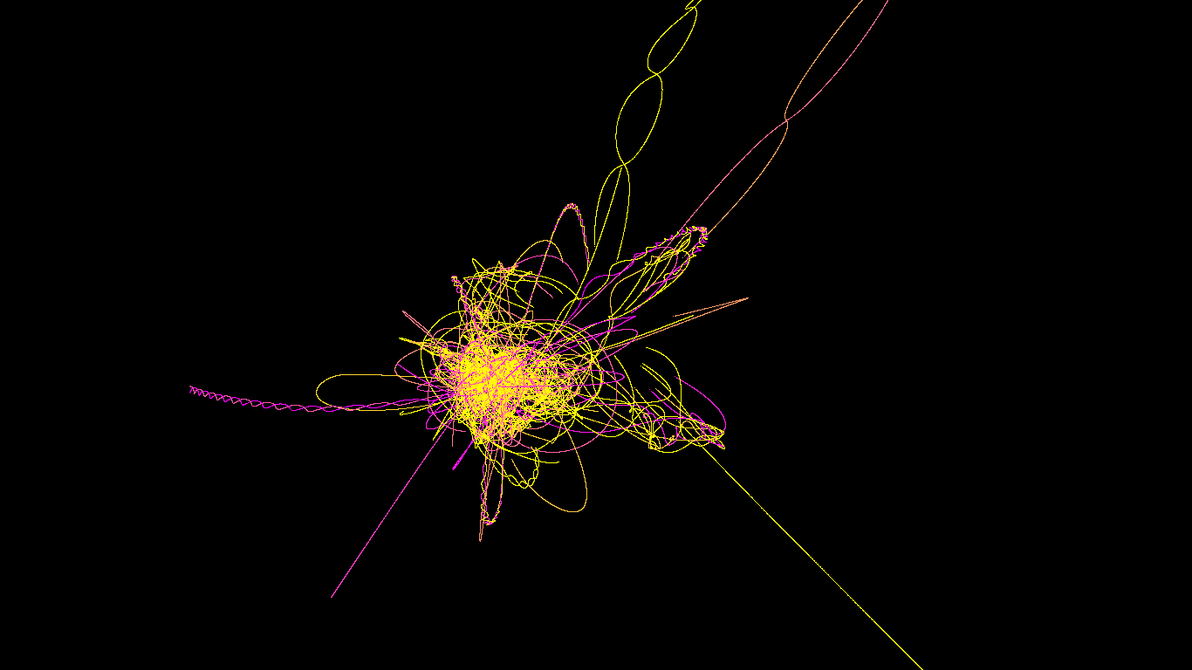

So, the Universe is a pretty big topic to discuss so where to begin :-) . Newton's law of gravity is certainly useful for very basic motions, such as how planets (or moons or rocket ships) may orbit around stars (or other planets) but will be too expensive if trying to model larger systems with more than a dozen (or perhaps a couple of hundred) objects. What you should be looking at is developing (or reading about) simple models that use simple mathematical equations to represent the gravitational potential field or simple combinations and then your physical objects (e.g. galaxies, stars, etc..) move as test particles (https://en.wikipedia.org/wiki/Test_particle) in these potential fields rather than computing the full insanely expensive gravitational. Let's take a basic galaxy as an example. It contains

- A spherically symmetric halo of dark matter. It doesn't do anything interesting really but holds the galaxy together gravitationally and can be approximated by a simple equation (e.g an NFW profile, https://en.wikipedia.org/wiki/Navarro%E2%80%93Frenk%E2%80%93White_profile)

- A halo of old stars, many contained in very old massive clusters called globular clusters. The globular clusters can be very easily modelled with something called a Plummer potential profile (https://en.wikipedia.org/wiki/Plummer_model)

- The gas in the galaxy (often called the Interstellar medium or ISM) lies in the disc of the galaxy but often concentrated in spiral arms, as like the classic picture of galaxies (There's no simple article for this sorry, but maybe this helps : https://en.wikipedia.org/wiki/Gravitationally_aligned_orbits)

- The gas is compressed in these arms leading to new stars forming from the gas. The density of gas and the formation of new stars has several empirical relations, like the Kennicutt-Schmidt relation, which allows you to approximately model how this happens (Sorry again, no simple article on this). These stars then spread into the disc of the galaxy and move around in (approximately) circular orbits around a spiral potential.

And that's just to model a typical everyday spiral galaxy that you might find. If you want to model how this forms (in a hand-wavy way) from the big bang, then you need to consider the large-scale picture like the formation of Galaxy clusters, how these protogalaxies merge and form the next generation of galaxies. That's not quite my own field but I know enough to know it's possible to do in some nice approximate way like you are probably suggesting. You could for example, model a Universe with various smaller galaxy seeds (but not too many unless you want the performance issues again). There would need to be some underlying filament potential which describes the large scale structure of the Universe (e.g. https://phys.org/news/2014-04-cosmologists-cosmic-filaments-voids.html) and then your test particles will move towards them to mimic the natural formation of the large-scale structure.

As you might guess, there is so much to go into this (and even I'm not 100% sure of all the steps) but I hope this little post has helped explain a possible path to modelling a Universe on the cheap! ;-)

Of course, now that you have 'outed' yourself as an astrophysicist, this debate might get a whole lot more interesting ;-)

Haha, guilty as charged! Never thought I was hiding though! :P

Incidentally, I can't find anything on a King Profile, do you have a good link explaining that?

Hmmm, unfortunately some of these 'basic' things don't have nice pages for the layman like wiki. There's a ton of astro articles, such as University pages, if you type 'King cluster profile' into google but not sure which one is good to recommend. But there's hopefully enough info and links here to help for now! ;-)