Hey folks,

Seeing some odd behaviour with a very simple OpenGL shader I'm trying to get working.

Here's the vertex shader:

#version 400

////////////////////

// Input Variables

////////////////////

in vec3 inputPosition;

in vec3 inputColor;

////////////////////

// Output Variables

////////////////////

out vec3 outputColor;

////////////////////

// Uniform Variables

////////////////////

uniform mat4 worldMatrix;

uniform mat4 viewMatrix;

uniform mat4 projectionMatrix;

////////////////////

// Vertex Shader

////////////////////

void main( void )

{

// calculate the position of the vertex using world/view/projection matrices

gl_Position = worldMatrix * vec4( inputPosition, 1.0f );

gl_Position = viewMatrix * gl_Position;

gl_Position = projectionMatrix * gl_Position;

// store the input colour for the fragment shader

outputColor = vec3( 1.0f, 1.0f, 1.0f );//inputColor;

}And this is the fragment shader:

#version 400

////////////////////

// Input Variables

////////////////////

in vec3 inputColor;

////////////////////

// Output Variables

////////////////////

out vec4 outputColor;

////////////////////

// Fragment Shader

////////////////////

void main( void )

{

outputColor = vec4( inputColor, 1.0f );//vec4( 1.0f, 1.0f, 1.0f, 1.0f);

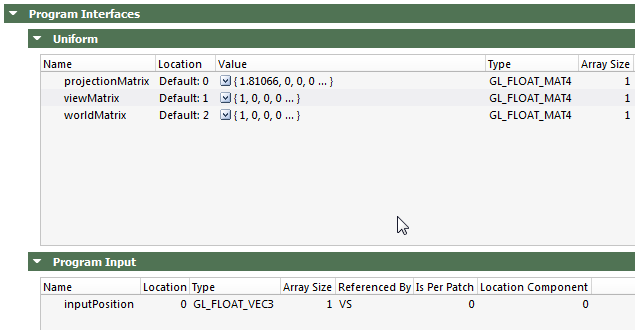

}If I try and use the 'inputColor' in the fragment shader everything is just rendered black... however if I explicitly set the colour (in the commented out code) everything renders correctly... so there's a breakdown in communication between my vertex and fragment shaders.

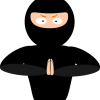

Is there anything obvious I'm missing in the shader code? I'm not seeing any compilation warnings from the C++ code side of things, and all of the world/view/projection matrices are being passed in correctly.

Thanks!