On 23/09/2017 at 7:01 PM, matt77hias said:So deferred shading is categorized under post-process (since you need to convert from NDC to view space or world space, depending on your shading space)? Although, I do not see material unpacking data for converting data from the GBuffer (assuming you do not use 32bit channels for your materials). And similar for sky domes, they are categorized under post-processing (since you need to convert back to world space which isn't handled by the camera)? If so, I understand the separation and I am thinking of using something similar for the camera (and move this upstream away from my passes). Originally, I thought you created FrameCB for custom (engine-independent) scripts as a kind of data API for the user.

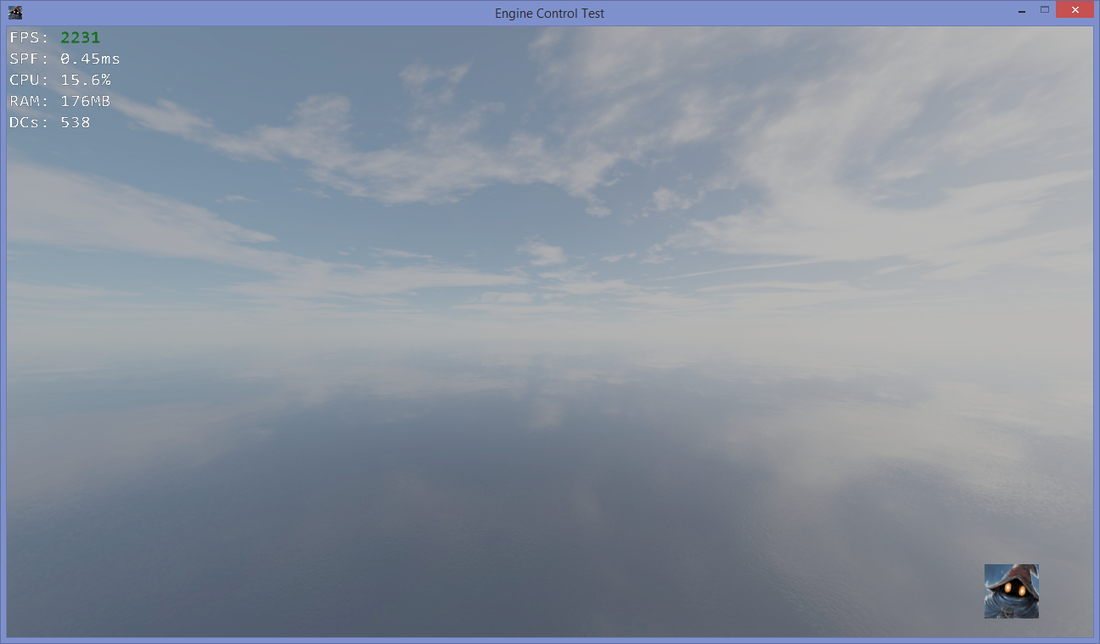

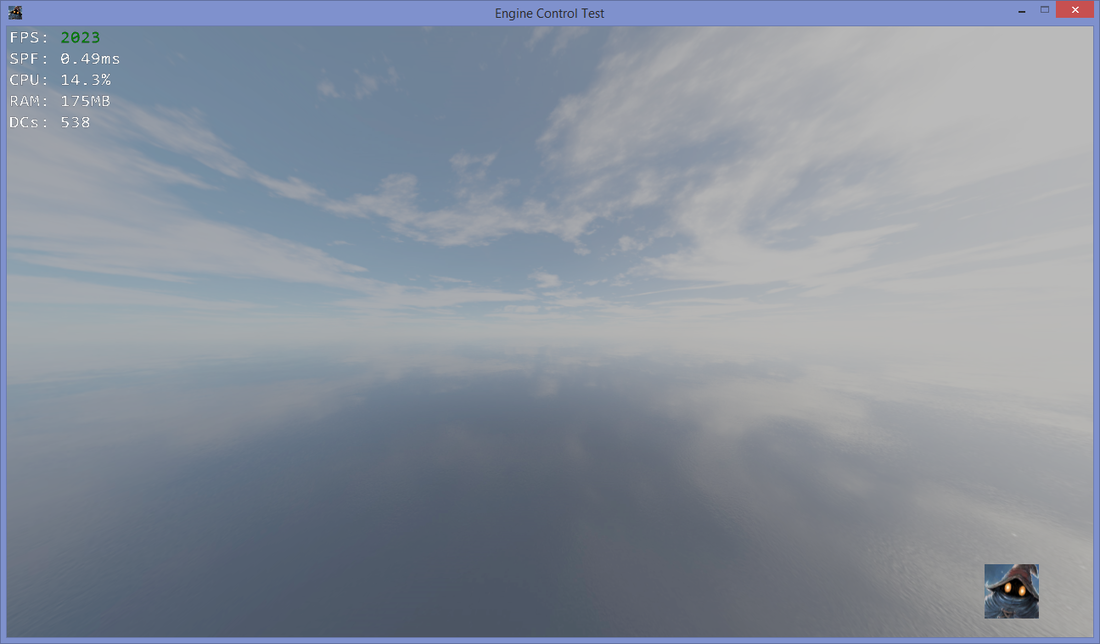

You are right, the deferred lighting can only be done to the main camera in my engine, and while the sky rendering can be performed for other passes, they don't meaningfully use the previous camera props in their pixel shaders. I didn't understand the last sentence, so you are probably right. ![]()