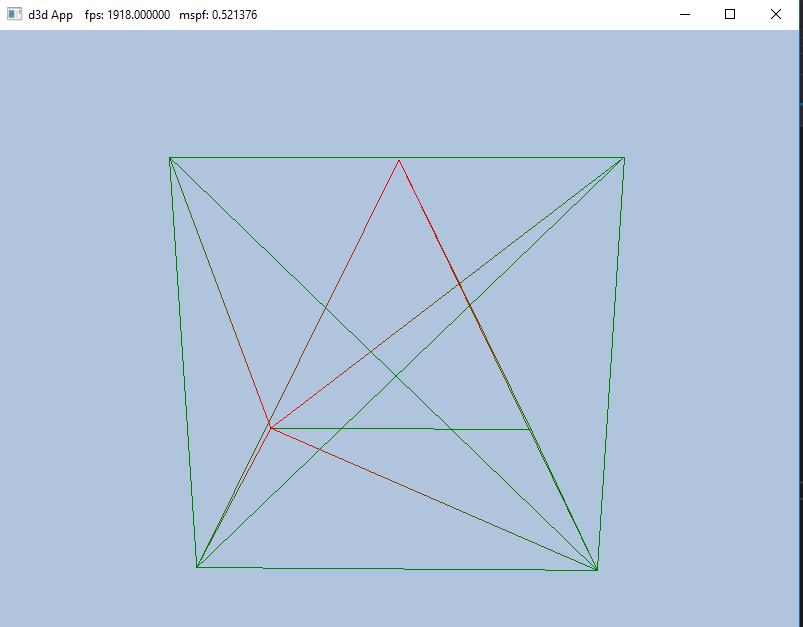

I'm currently learning how to store multiple objects in a single vertex buffer for efficiency reasons. So far I have a cube and pyramid rendered using ID3D12GraphicsCommandList::DrawIndexedInstanced; but when the screen is drawn, I can't see the pyramid because it is drawn inside the cube. I'm told to "Use the world transformation matrix so that the box and pyramid are disjoint in world space".

Can anyone give insight on how this is accomplished?

First I init the verts in Local Space

std::array<VPosData, 13> vertices =

{

//Cube

VPosData({ XMFLOAT3(-1.0f, -1.0f, -1.0f) }),

VPosData({ XMFLOAT3(-1.0f, +1.0f, -1.0f) }),

VPosData({ XMFLOAT3(+1.0f, +1.0f, -1.0f) }),

VPosData({ XMFLOAT3(+1.0f, -1.0f, -1.0f) }),

VPosData({ XMFLOAT3(-1.0f, -1.0f, +1.0f) }),

VPosData({ XMFLOAT3(-1.0f, +1.0f, +1.0f) }),

VPosData({ XMFLOAT3(+1.0f, +1.0f, +1.0f) }),

VPosData({ XMFLOAT3(+1.0f, -1.0f, +1.0f) }),

//Pyramid

VPosData({ XMFLOAT3(-1.0f, -1.0f, -1.0f) }),

VPosData({ XMFLOAT3(-1.0f, -1.0f, +1.0f) }),

VPosData({ XMFLOAT3(+1.0f, -1.0f, -1.0f) }),

VPosData({ XMFLOAT3(+1.0f, -1.0f, +1.0f) }),

VPosData({ XMFLOAT3(0.0f, +1.0f, 0.0f) })

}Then data is stored into a container so sub meshes can be drawn individually

SubmeshGeometry submesh;

submesh.IndexCount = (UINT)indices.size();

submesh.StartIndexLocation = 0;

submesh.BaseVertexLocation = 0;

SubmeshGeometry pyramid;

pyramid.IndexCount = (UINT)indices.size();

pyramid.StartIndexLocation = 36;

pyramid.BaseVertexLocation = 8;

mBoxGeo->DrawArgs["box"] = submesh;

mBoxGeo->DrawArgs["pyramid"] = pyramid;

Objects are drawn

mCommandList->DrawIndexedInstanced(

mBoxGeo->DrawArgs["box"].IndexCount,

1, 0, 0, 0);

mCommandList->DrawIndexedInstanced(

mBoxGeo->DrawArgs["pyramid"].IndexCount,

1, 36, 8, 0);

Vertex Shader

cbuffer cbPerObject : register(b0)

{

float4x4 gWorldViewProj;

};

struct VertexIn

{

float3 PosL : POSITION;

float4 Color : COLOR;

};

struct VertexOut

{

float4 PosH : SV_POSITION;

float4 Color : COLOR;

};

VertexOut VS(VertexIn vin)

{

VertexOut vout;

// Transform to homogeneous clip space.

vout.PosH = mul(float4(vin.PosL, 1.0f), gWorldViewProj);

// Just pass vertex color into the pixel shader.

vout.Color = vin.Color;

return vout;

}

float4 PS(VertexOut pin) : SV_Target

{

return pin.Color;

}