2 minutes ago, JoeJ said:I would download the voxelized data and visualize a cube for each voxel to be sure the bug is not just in your visualization.

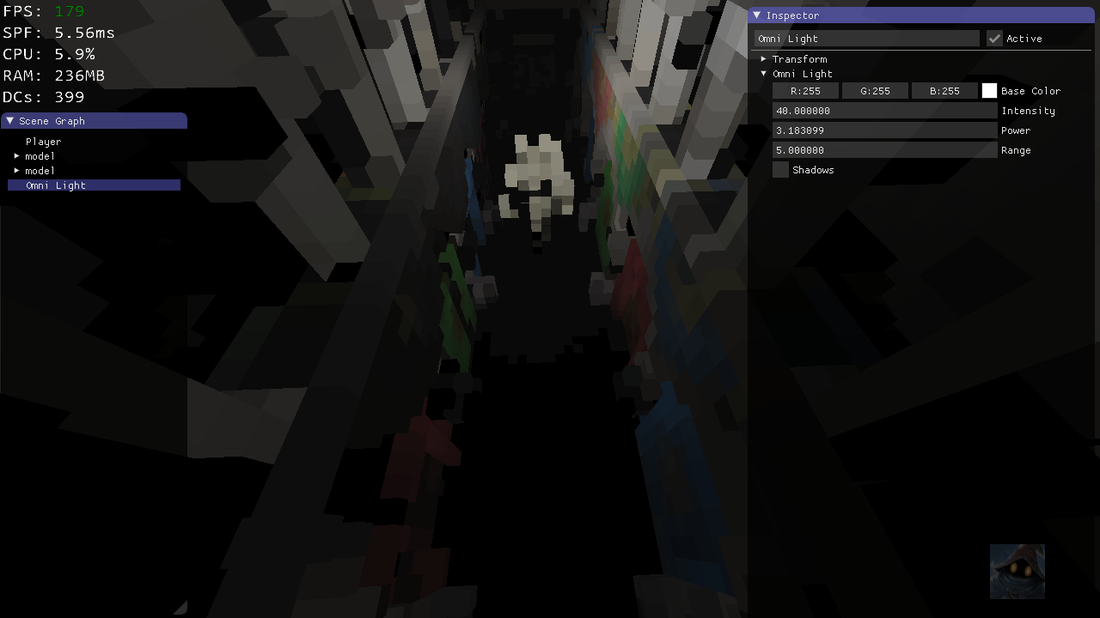

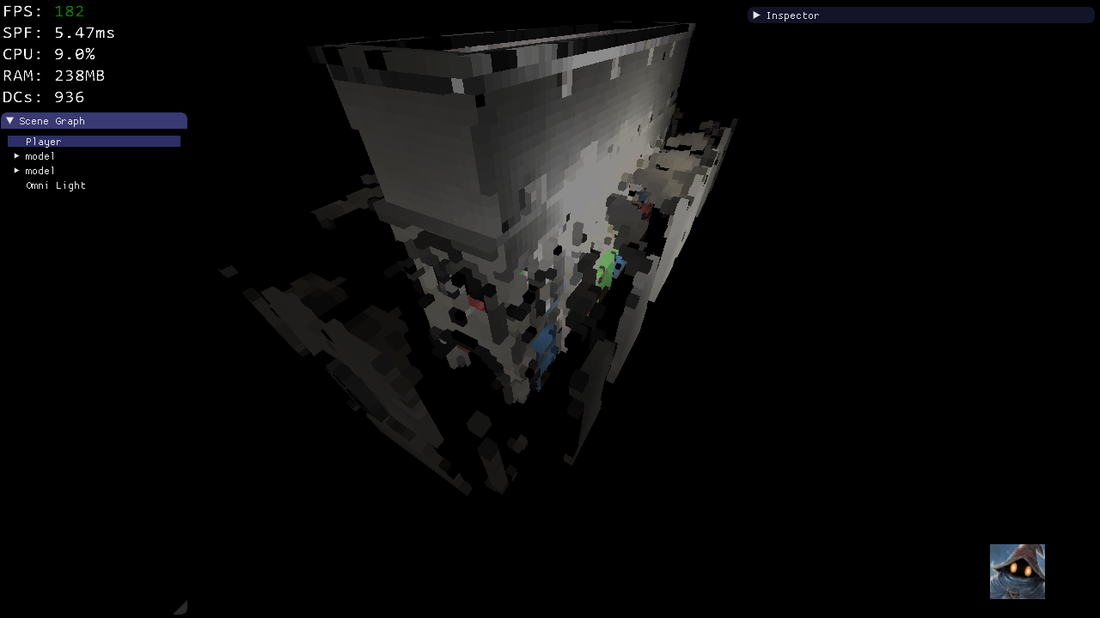

The voxels are occupied. Though, the filled voxels for a single plane occur in multiple consecutive slices (due to the slope of the plane)

4 minutes ago, JoeJ said:I assume aligning the grid to view direction is no good idea because voxels will pop like crazy.

I guess that the values stored in the voxels can indeed start fluctuating while moving and changing the camera orientation. Thought, indirect illumination is generally much smoother and low-frequency than direct illumination. But I am curious at the final results too.

For the current debug visualization, the popping is quite strange, since it becomes black. I wouldn't be surprised when the color changes, but black, however, indicates that the voxel is empty.