Hi , I was considering this start up http://adshir.com/, for investment and i would like a little bit of feedback on what the developers community think about the technology.

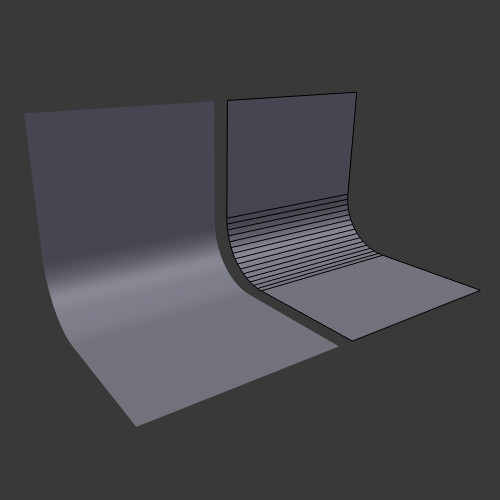

So far what they have is a demo that runs in real time on a Tablet at over 60FPS, it runs locally on the integrated GPU of the i7 . They have a 20 000 triangles dinosaur that looks impressive, better than anything i saw on a mobile device, with reflections and shadows looking very close to what they would look in the real world. They achieved this thanks to a new algorithm of a rendering technique called Path tracing/Ray tracing, that is very demanding and so far it is done mostly for static images.

From what i checked around there is no real option for real time ray tracing (60 FPS on consumer devices). There was imagination technologies that were supposed to release a chip that supports real time ray tracing, but i did not found they had a product in the market or even if the technology is finished as their last demo i found was with a PC. The other one is OTOY with their brigade engine that is still not released and if i understand well is more a cloud solution than in hardware solution .

Would there be a sizable interest in the developers community in having such a product as a plug-in for existing game engines? How important is Ray tracing to the future of high end real time graphics?