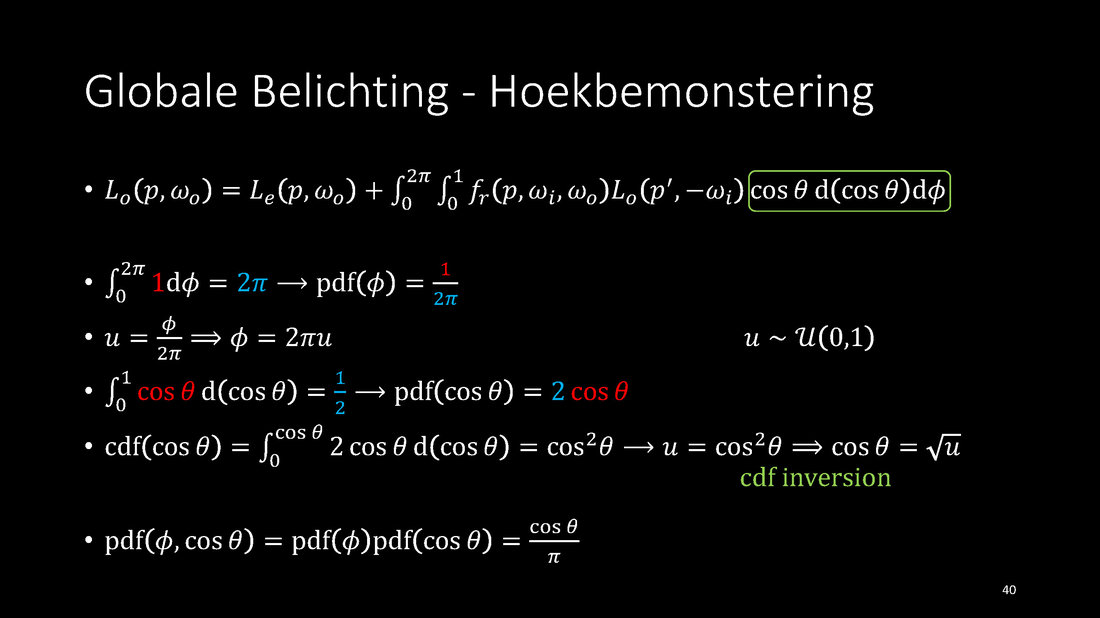

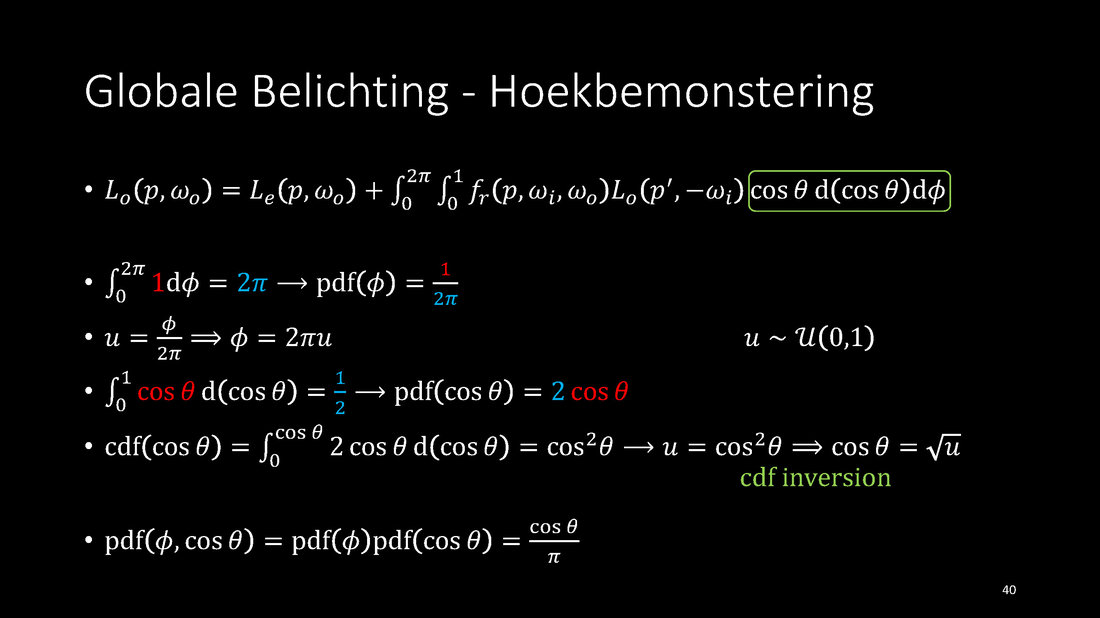

Brute-force path tracing using Cosine-Weighted Hemisphere Sampling applied to the Rendering Equation:

(P.S.: the title is in Dutch, since I need to teach this in a Dutch course, but is not really required to understand the big picture)

Once, you have sampled a vector over a standard hemisphere (e.g. hemisphere spanned about the z axis) using Cosine-Weighted Hemisphere Sampling, you need to construct an orthonormal base around the actual shading normal to transform your sampled vector from a standard hemisphere to the hemisphere spanned about the shading normal.

By including the cosine factor in the sampling strategy, you can completely eliminate noise caused by this factor. It is a common practice to include both the BRDF and cosine factor in the sampling strategy to reduce all noise caused by these factors, but that is a no-op for a Lambertian BRDF. Since, the cosine is part of the pdf, it cancels the cosine of the integrand. (You can even "cancel" the pi of your Lambertian BRDF.  )

)

To construct the orthonormal base starting with just one basis vector (i.e. shading normal), you can use one of the methods in this repository.

To combine all of this in a path tracer, you can even take a look at this repository, pick a language you like, and you can easily find the few statements related to these topics.