2 hours ago, ChenMo said:

But I have a confusion. Whether albedo should be took into the rendering equation. For an example, a ray hit a red area, the reflected color of it will be red too, but if I don't take albedo into the calculation,the resulting irradiance won't be red, so I lose the red bleeding when sampling lightmap.

I did not notice this is your problem yesterday.

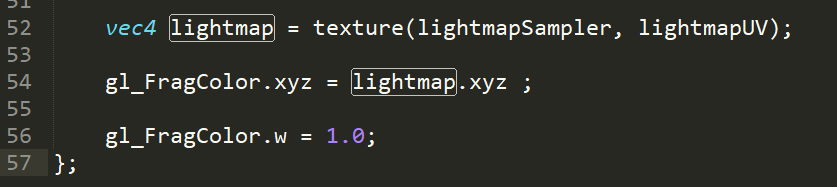

I the shader you do something like this for diffuse:

vec3 reflect = componentWiseMul (unlit aldebo from material texture, irradiance from light map texture)

vec3 emit = light emitted from material, if you have this

vec3 fragmentColor = reflect + emit

componentWiseMul (a,b) is to return vec3 (a.x*b.x, a.y*b.y, a.z*b.z).

Shading languages do this anyways if you multiply two vectors, but it clarifies a diffuse red surface color can only reflect red light, and only if the received light contains red light too.

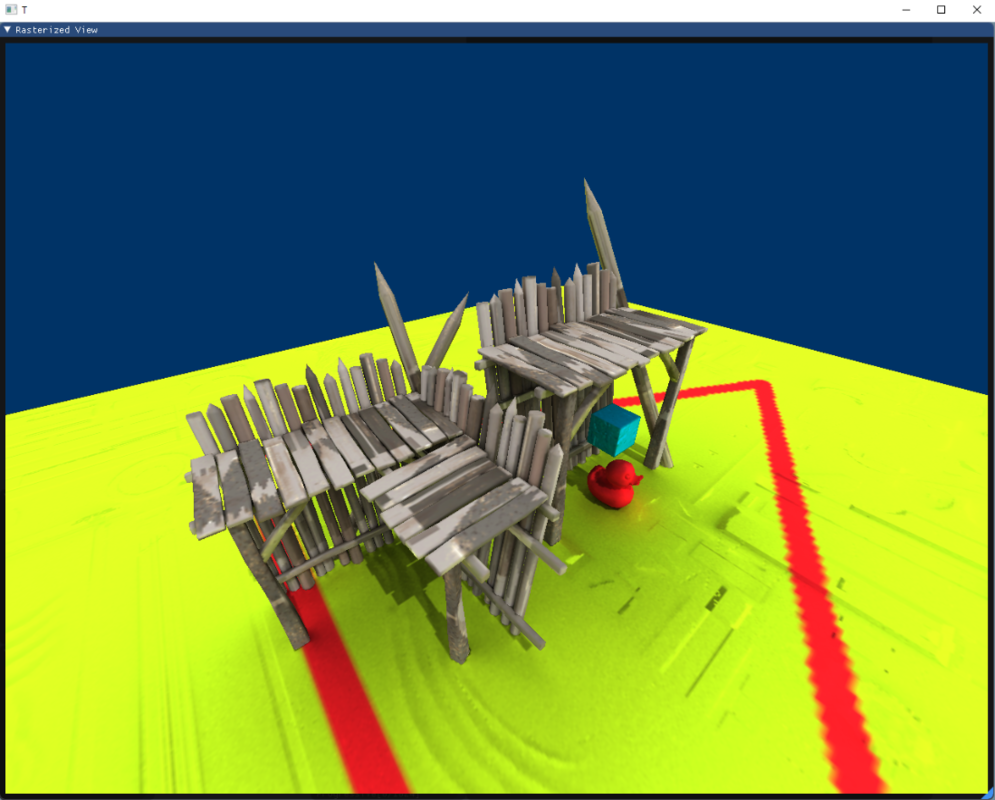

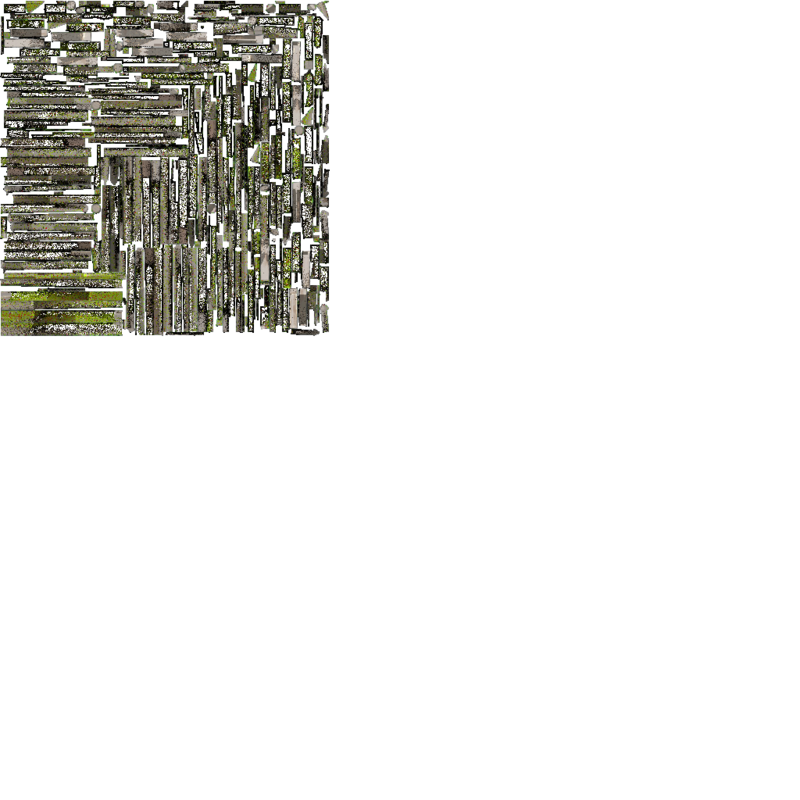

I wonder you ask because you obviously do this correctly in the path tracer. The idea always is to store only information independent of the surface data to be flexible with shading, resolution, reusing same texture on multiple surfaces etc. So here you store only the incoming light at the surface (irradiance) in the lightmap and calculate outgoing light on the fly.

2 hours ago, orange451 said:

Is there any information of why writing the final light color after dividing by PI?

I've struggled about this a decade ago and forgot the reason for confusion, but usually you integrate incoming light over the unit halfsphere projected to the unit disk to the normal plane. Unit disk has area of Pi so the division is necessary at some point because area is irrelevant when shading a pixel.

An example would be this small radiosity solver in this post: https://www.gamedev.net/projects/380-real-time-hybrid-rasterization-raytracing-engine/ But unfortunately the math is optimized and not clear here. Also ray tracing replaces the concept of sample area by ray density or weighting so the math looks different there, but it may help on how to separate surface and lighting data.