Hi all,

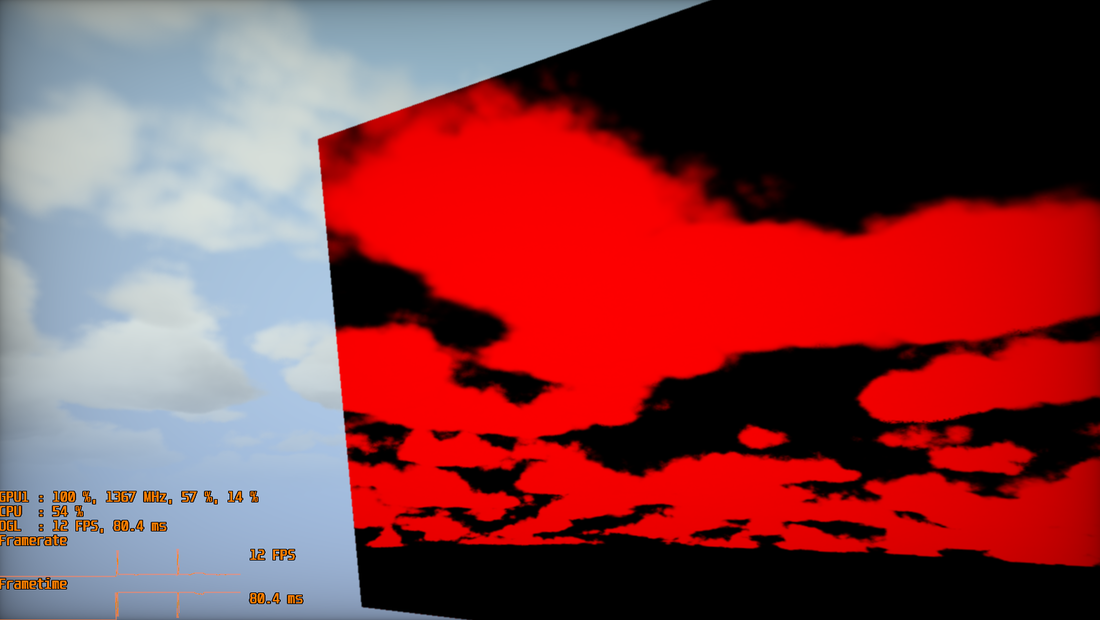

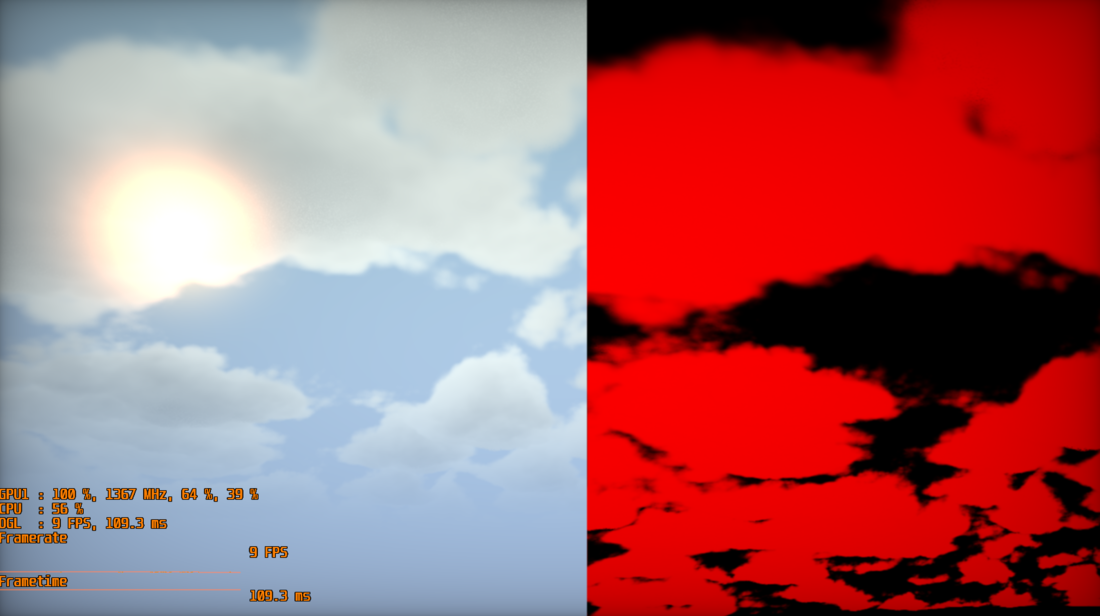

Recently I've been working (as hobbyist) to Volumetric Clouds rendering (something we've discussed in the other topic). I reached a visually satisfying result, but the performance are (on a GTX 960 @ 900p) quite slow: about 10-15 fps. I was trying to improve it. ATM the solely optimization it's the low transmittance (high alphaness) early exit. I wanted to implement even early exit on high transmittance (for example, when the cloud coverage is low the performance decrease a lot due to the absence of early exit): this require to keep in memory the last frame and check if in the previous frame (by finding the correct uv coords) its transmittance was high.

I've got some problems to find the correct uv coords. Currently I'm doing it in this way:

vec3 computeClipSpaceCoord(){

vec2 ray_nds = 2.0*gl_FragCoord.xy/iResolution.xy - 1.0;

return vec3(ray_nds, -1.0);

}

vec2 computeScreenPos(vec2 ndc){

return (ndc*0.5 + 0.5);

}

//for picking previous frame color

vec4 ray_clip = vec4(computeClipSpaceCoord(), 1.0);

vec4 camToWorldPos = invViewProj*ray_clip;

camToWorldPos /= camToWorldPos.w;

vec4 pPrime = oldFrameVP*camToWorldPos;

pPrime /= pPrime.w;

vec2 prevFrameScreenPos = computeScreenPos(pPrime.xy); And then use prevFrameScreenPos to sample the last frame texture. Now, this (obviously) doesn't work: what I feel is that the issue here is that I'm setting z coord in computeClipSpaceCoord() as -1.0, ignoring the depth of that fragment. But: how can I determine the depth of a fragment in volume rendering, since it's all done by raymarching in the fragment shader?

Anyway, it seems to be the key of Temporal Reprojection. I wasn't able to find anything about, do you have any resource/advice to implement this?

Thank you all for your help.