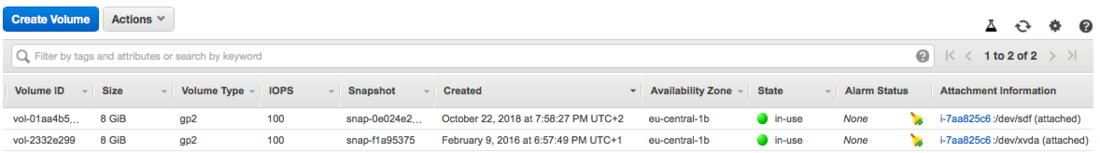

The information from lsblk doesn't seem to match the information in the console.

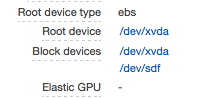

Specifically, the console says "root" is xvda, but your instance mounts xvdf1 on "/"

My guess is that the instance early boots from xvda (the original image) but mounts xvdf (the second volume) on the "/" file system. So you are, indeed, running on two volumes, but the first volume is only used when the instance is first "turned on."

I imagine you could get rid of the xvda volume by shutting down the instance, then re-configuring it to use xvdf as the "root" and un-attaching the xvda volume. (This may end up renaming the volume known as xvdf to xvda from the point of view of the instance, btw; I'm not sure about what EC2 does in that case)

There really is a four-level mapping that you need to follow here:

1) EBS volumes contains "blocks" of data. Raw data. Much like a raw hard disk, or stick of RAM for that matter. EBS volumes have "ARN" identifiers, and perhaps user-given labels/names for easy of access, but they aren't "devices" by themselves.

2) EC2 instances configure specific EBS volumes (by ARN) to specific local device names (like xvda or xvdf). For historical reasons, the configuration panel may refer to devices as "sd<y>" when the instance actually sees the name "xvd<y>."

3) Data on the raw block devices are structured into a partition table (which defines ranges of the raw device as "smaller raw devices" -- these are called xvda1 and xvdf1 on your instance, and in this case, probably just say "map the entire raw volume as a single partition."

4) Within a partition, raw data is arranged in various ways based on partition type. For a "swap" partition, it's raw storage that copies what's in RAM when the RAM is needed for something else, for example. For file systems, on Linux, you typically make an ext4 file system on the device. File systems are then made available to the OS by "Mounting" them at some path. Some file system needs to be mounted on the "/" path, but you can mount additional devices on other paths to separate out different parts of the system to different volumes. This is useful to be able to grow particular parts of the file system, or make sure that, say, usage in the "/var/log" directory can't consume all storage so that there's no space in "/home" or somesuch.