Hi all,

After seeing some missing pixels along the edges of meshes 'beside each other'/ connected, I first thought it was mesh specific/related.

But after testing the following, I still see this occuring:

- create a flat grid/plane, without textures

- output only black, white background (buffer cleared)

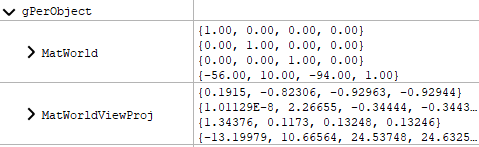

When I draw this plane twice, e.g. 0,0 (XZ) and 4,0 (XZ), with the plane being 4x4, I still see those strange pixels 'see through' (aiming for properly connected planes).

After quite some researching and testing (disable skybox, change buffer clear color, disable depth buffer etc), I still keep getting this.

I've been reading up on 't junction' issues, but I think here this isn't the case (verts all line up nicely).

As a workaround/ solution for now, I've just made the black foundation planes (below the scene), boxes with minimal height and bottom triangles removed. This way basically these 'holes' are not visible because the boxes have sides. Here a screenshot of what I'm getting.

I wonder if someone has thoughts on this, is this 'normal', do studios workaround this etc.

There might be some rounding issues, but I wouldn't expect that with this 'full' numbers (all ,0).