I'm looking at trying to recognize some motions. I'm doing a hobby project on an Arduino chip, so most major libraries are out, I've got a few dozen bytes of memory available, and machine learning algorithms aren't an option. The device has an accelerometer and gyroscope. I'll be attaching it to a sport kite on 40 meter / 120 ft line, but since the crowd here is more familiar with widescreen boxes, I'm using that for easier descriptions.

Sadly, having last completed linear algebra classes two decades ago and not doing this type of problem for years means I need to ask. ?

I have access to the raw acceleration data, which can tell me orientation. When the device is in the normal position (widescreen, facing up) I get a fairly stable acceleration vector of approximately (1,0,0). If I twist it so it is facing right standing portrait, I get (0,1,0). Twist it to facing left standing portrait, I get (0,-1,0). There is some drift and some value in the z acceleration because humans are involved, so it looks like when motion is stopped I get a unit-length vector for 1G.

I also have access to gyroscope data. When the device is in the normal position (widescreen, facing up) I get a fairly stable gyroscope value. I can use the per-interval data. For reference, X -90 degrees means the left side is toward the human and right side is away, X +90 means right side is toward human and right side is away. Y -90 is the "normal" upright position, 0 means they've set it down and it is on its back, Y -180 means they have flipped it over so it is lying on it's face. Z +90 is clockwise rotation, Z-90 is counterclockwise rotation.

The device internally uses both of them to try to self-stabilize, but I don't know those inner details.

Now to what I want to detect.

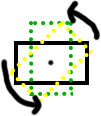

* Spinning, both clockwise and counterclockwise, and the rate

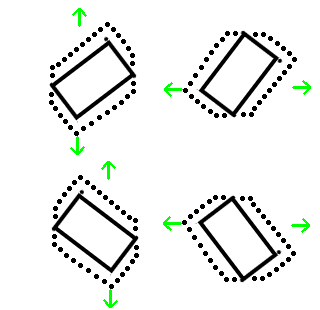

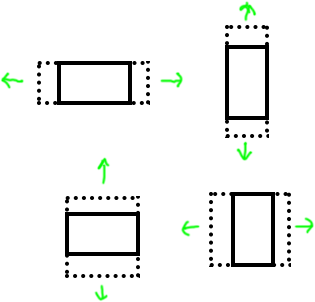

* Strong acceleration toward right, left, top, or bottom edge.

I think these are straightforward. The gyro angles will remain basically stable, but acceleration will increase. In the widescreen left/right shake I will get an acceleration that changes about (1, variable, 0). Variable runs in the +/- 0.5 range, more or less depending on how fast it is moved. In the portrait up/down shake I will get changes of (0, variable, 0), where it starts at +1 or -1 depending on which way is up, then shifts by up to about 0.5 depending on motion direction. All of those when rotations are basically constant.

In the widescreen up/down shake I will get (variable, 0, 0), in the portrait shake I will get (variable, 1, 0), with rotation angles basically constant.

Each of these can set the the flag to right, left, top, or bottom.

This one I can tell because the Z axis changes, but the magnitude of the acceleration vector remains basically constant at 1.0

This one can pull out rotation easily.

Now for the hard ones:

These, my intuition tells me at rest I should be seeing ( 0.7, 0.7, 0 ) with various sign differences, and acceleration variance for both axis, while the rotation angle remains basically constant.

However, I'm not easily able to figure out (in my brain) which is witch. I suspect I can come up with the answer by wrapping my brain around it for a few hours, but if someone can save me time because they know it offhand, that would help.

I'm looking for an easy way to detect acceleration toward top edge, bottom edge, right edge, or left edge, regardless of orientation.

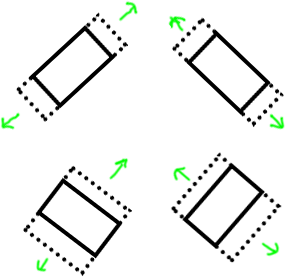

It is this one that has me stumped:

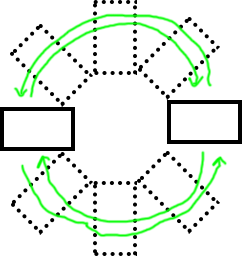

I want to be able to tell when large sweeping arcs are made. These are different from in-place rotation that is spinning only. These I'd like to show up as right-edge or left-edge, but they aren't detecting well at all because the forces are continuously varying.

My math history tells me something to do with atan2 on the X acceleration versus Y acceleration to tell me the direction, and then with some handwaving using the current Z direction, the result is I can tell which direction it is moving.

Any guidance here?

I'll probably be hammering away at the math for a few days as the hobby project allows only a few hours on nights and weekends. Any insights to help shorten that time is greatly appreciated.