Hi!

Im trying to use this radial shader in spheres, but unsuccessfully. the problem is related with screen coordinates. The shader only works if the sphere is in the center of the screen, just like show this pictures:

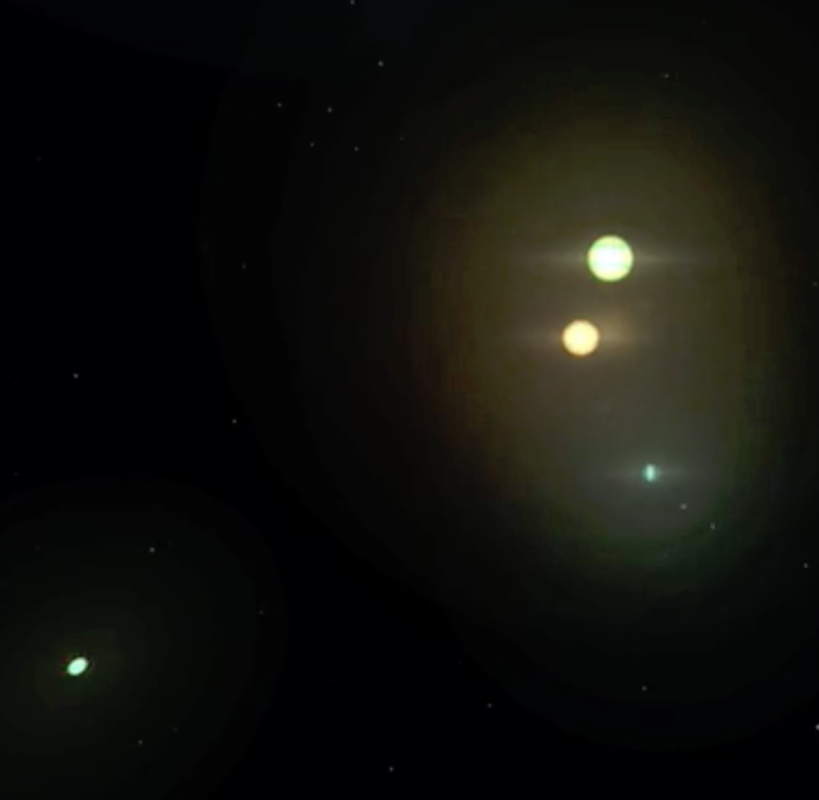

if its not, this is what happen:

i discovered that if i convert the position of the sphere to screen coordinates the shader works fine, just like i show in this code, using the function cam.worldToScreen():

fbo.begin();

ofClear(0);

cam.begin();

shader.begin();

sphere.setRadius(10);

sphere.setPosition(v.x, v.y, v.z);

sphere.draw();

shader.end();

cam.end();

fbo.end();

depthFbo.begin();

ofClear(0);

fbo.getDepthTexture().draw(0,0);

ofSetColor(255);

depthFbo.end();

radialBuffer.begin();

ofClear(0);

radial.begin();

f = cam.worldToScreen(v);

radial.setUniform3f("ligthPos", f.x, f.y, f.z);

radial.setUniformTexture("depth", depthFbo.getTexture(), 1);

fbo.draw(0,0);

radial.end();

radialBuffer.end();

radialBuffer.draw(0,0);and this is the shader:

#version 150

in vec2 varyingtexcoord;

uniform sampler2DRect tex0;

uniform sampler2DRect depth;

uniform vec3 ligthPos;

float exposure = 0.19;

float decay = 0.9;

float density = 2.0;

float weight = 1.0;

int samples = 25;

out vec4 fragColor;

const int MAX_SAMPLES = 100;

void main()

{

// float sampleDepth = texture(depth, varyingtexcoord).r;

//

// vec4 H = vec4 (varyingtexcoord.x *2 -1, (1- varyingtexcoord.y)*2 -1, sampleDepth, 1);

//

// vec4 = mult(H, gl_ViewProjectionMatrix);

vec2 texCoord = varyingtexcoord;

vec2 deltaTextCoord = texCoord - ligthPos.xy;

deltaTextCoord *= 1.0 / float(samples) * density;

vec4 color = texture(tex0, texCoord);

float illuminationDecay = .6;

for(int i=0; i < MAX_SAMPLES; i++)

{

if(i == samples){

break;

}

texCoord -= deltaTextCoord;

vec4 sample = texture(tex0, texCoord);

sample *= illuminationDecay * weight;

color += sample;

illuminationDecay *= decay;

}

fragColor = color * exposure;

}

the problem is that i want to apply this effect to multiples spheres, and i dont know how to do it, because i pass the position of the sphere like a uniform variable to the radial shader post processing.

but what i want in fact, is discover the way that apply this or others shader to the meshes and not to deal with this problem everytime (what about is the shader have not a uniform to determine the position of the object? which is the way to make a shader works in screen coordinates? how can do? i need some light here, becouse i feel that im missing something.

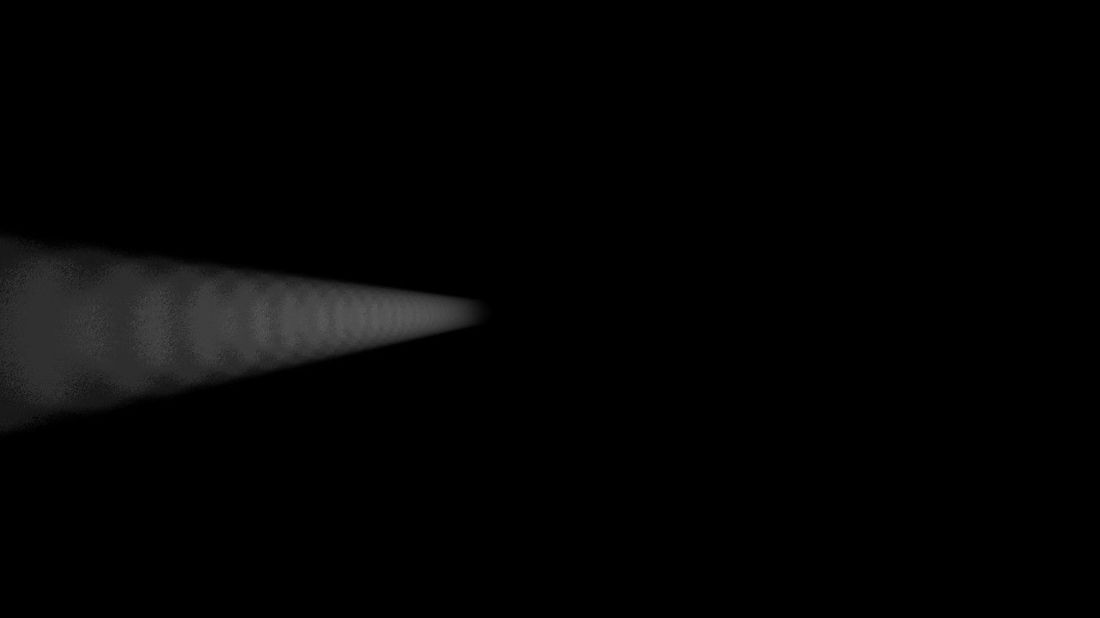

this is an illustrative picture for what i want to do :

but i want post processing shaders that can have the information of the objects and not always compute the effect in the center of the screen (in the center of the object is what i want). please, any suggestion will be apprecciate