Hello, I am trying to implement SSAO using DirectX11 but instead I got white screen with few black dots on model:

I am using normal-oriented hemispheres based on this tutorial: https://learnopengl.com/Advanced-Lighting/SSAO

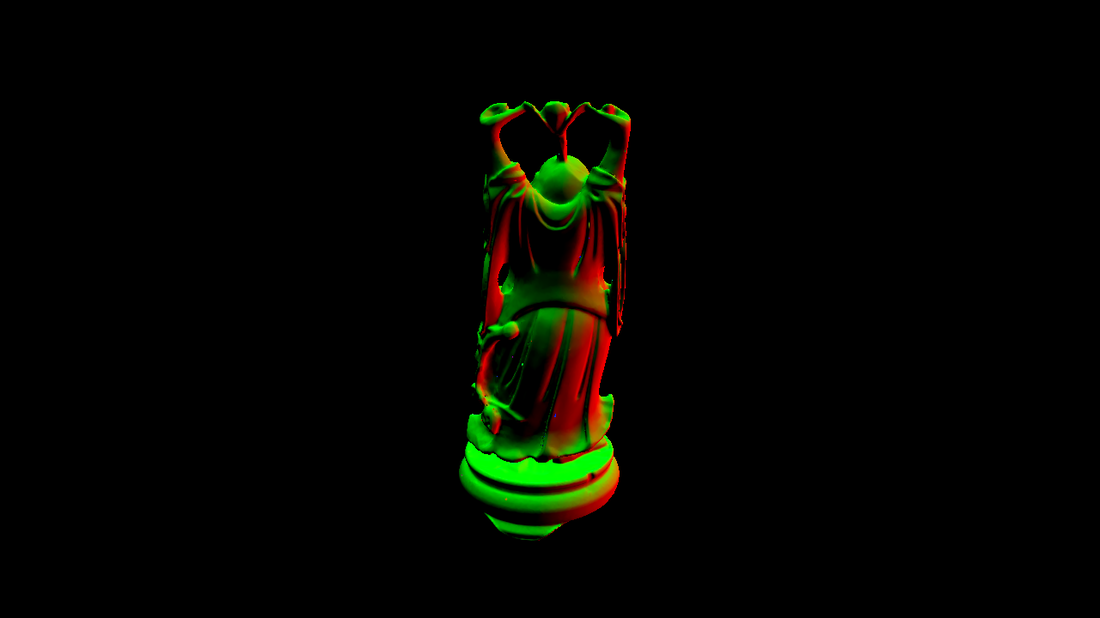

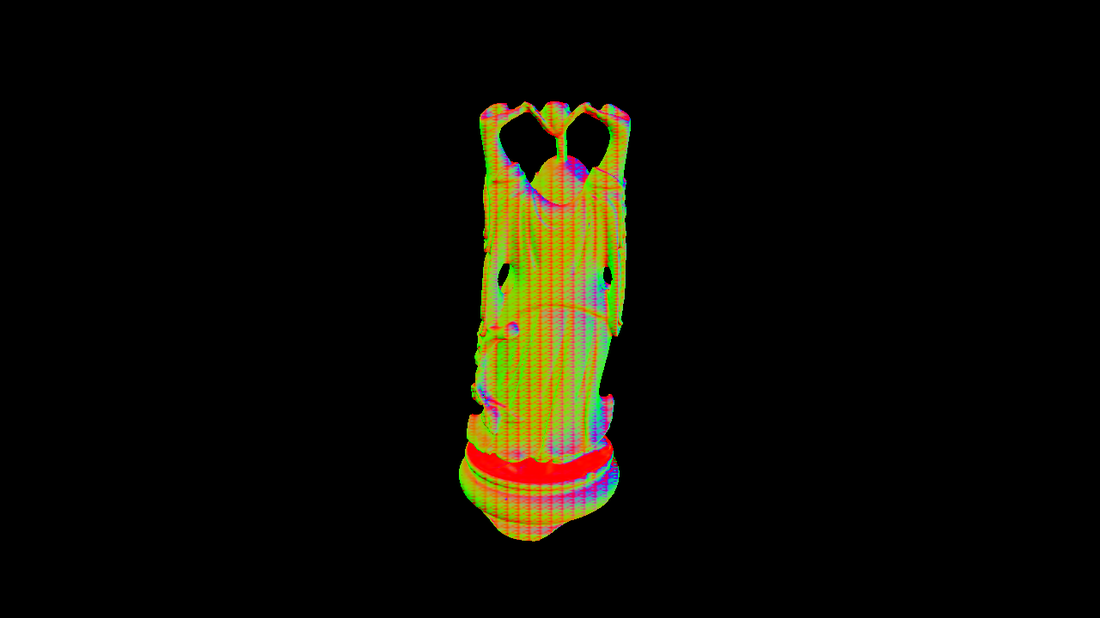

My G-Buffer (albedo skipped), Tangent and Bitangent buffer:

Position:

Normal:

Tangent:

Bitangent:

myPixelShader.ps:

////////////////////////////////////////////////////////////////////////////////

// Filename: ssaoShader.ps

////////////////////////////////////////////////////////////////////////////////

Texture2D textures[3]; //position, normal, noise

SamplerState SampleType;

//////////////

// TYPEDEFS //

//////////////

cbuffer KernelBuffer

{

float3 g_kernelValue[64];

};

struct PixelInputType

{

float4 positionSV : SV_POSITION;

float2 tex : TEXCOORD0;

float4x4 projection : TEXCOORD1;

};

const float2 noiseScale = float2(1280.0f / 4.0f, 720.0f / 4.0f);

const float radius = 0.5f;

const float bias = 0.025f;

////////////////////////////////////////////////////////////////////////////////

// Pixel Shader

////////////////////////////////////////////////////////////////////////////////

float4 ColorPixelShader(PixelInputType input) : SV_TARGET

{

float3 position = textures[0].Sample(SampleType, input.tex).xyz;

float3 normal = normalize(textures[1].Sample(SampleType, input.tex).rgb);

float3 randomVector = normalize(float3(textures[2].Sample(SampleType, input.tex * 100.0f).xy, 0.0f));

//float3 randomVector = normalize(textures[2].Sample(SampleType, input.tex * noiseScale).xyz);

float3 tangent = normalize(randomVector - normal * dot(randomVector, normal));

float3 bitangent = cross(normal, tangent);

float3x3 TBN = {tangent, bitangent, normal};

float3 sample = float3(0.0f, 0.0f, 0.0f);

float4 offset = float4(0.0f, 0.0f, 0.0f, 0.0f);

float occlusion = 0.0f;

for (int i = 0; i < 64; i++)

{

sample = mul(TBN, g_kernelValue[i]);

sample = position + sample * radius;

offset = float4(sample, 1.0f);

offset = mul(input.projection, offset);

offset.xyz /= offset.w;

offset.xyz = offset.xyz * 0.5f + 0.5f;

float sampleDepth = textures[0].Sample(SampleType, offset.xy).z;

occlusion += (sampleDepth >= sample.z + bias ? 1.0 : 0.0);

}

occlusion = 1.0f - (occlusion / 64.0f);

//float4 color = float4(position.x, position.y, position.z, 1.0f);

//return color;

return float4(occlusion, occlusion, occlusion, 1.0f);

}

I suppose that either my projection matrix is wrong (I am passing my global projection matrix that I use in every shader). Another option is that my kernel generation is wrong:

myKernelGeneration.cpp:

//Create kernel for SSAO

std::uniform_real_distribution<float> randomFloats(0.0f, 1.0f);

std::default_random_engine generator;

XMFLOAT3 tmpSample;

for (int i = 0; i < SSAO_KERNEL_SIZE; i++)

{

//Generate random vector3 ([-1, 1], [-1, 1], [0, 1])

tmpSample.x = randomFloats(generator) * 2.0f - 1.0f;

tmpSample.y = randomFloats(generator) * 2.0f - 1.0f;

tmpSample.z = randomFloats(generator);

//Normalize vector3

XMVECTOR tmpVector;

tmpVector.m128_f32[0] = tmpSample.x;

tmpVector.m128_f32[1] = tmpSample.y;

tmpVector.m128_f32[2] = tmpSample.z;

tmpVector = XMVector3Normalize(tmpVector);

tmpSample.x = tmpVector.m128_f32[0];

tmpSample.y = tmpVector.m128_f32[1];

tmpSample.z = tmpVector.m128_f32[2];

//Multiply by random value all coordinates of vector3

//float randomMultiply = randomFloats(generator);

//tmpSample.x *= randomMultiply;

//tmpSample.y *= randomMultiply;

//tmpSample.z *= randomMultiply;

//Scale samples so they are more aligned to middle of hemisphere

float scale = float(i) / 64.0f;

scale = lerp(0.1f, 1.0f, scale * scale);

tmpSample.x *= scale;

tmpSample.y *= scale;

tmpSample.z *= scale;

//Pass value to array

m_ssaoKernel[i] = tmpSample;

}

.thumb.png.84d4b41a95dbdc005969644d95605b40.png)

.thumb.png.7b9f0ee5f521cb8b1496d639fdf7d5a3.png)

.thumb.png.2e014882913acf46f7ac89d7dccb040a.png)