What GI is usable right now and scalable for open world? Match to "fast camera movement", in/out door scenes and budget for 3-4 ms. Ubosoft use Precomputed Radiance Transfer. Unreal Engine use Sidned Distance Field, Crytek use Light Propagation Volumes (?) ? What used in last open world games? Screen space approximations? PRT? What Naughty Dog use?

What GI is usable right now?

Err, the answer is, inevitably, a roll your own complex thing. The three questions I can think of are:

How fast, like is the camera flying like a jet, or just a car?

How much indoor/outdoor is there? Is there only a few interiors that are generally close to outdoors, or are there ultra complex interiors digging down into caves?

How much do you need in the way of reflections and please don't say it's a lot those are hard. Now onto solutions:

This is a very good overview of a low cost, static, diffuse only, multi bounce solution that has some content restrictions: it's only for big/relatively mid complexity environments and can't handle dramatically fast light changes. Also there's some entirely unnecessary steps, like raytracing every probe every frame just to handle doors/windows, and in the hopes that it can handle dynamic scenes (artists will easily break this): https://morgan3d.github.io/articles/2019-04-01-ddgi/

You can handle doors/windows in other ways. Have two versions of lightprobes near doors/windows with one for open and one for closed, or raytrace only probes that are near doors/windows with only geo primitives, and only when those doors/windows open/close, or etc.

The above is a somewhat improved and better documented version of this, but in the interest of completeness and showing you absolutely don't need that raytracing part: https://t.co/7fii07TJzl

and video: https://www.gdcvault.com/play/1023273/Global-Illumination-in-Tom-Clancy

Relatedly, the Call of Duty guys did something similar for a level in Infinite Warfare, but with cubemaps! While this would consume more memory and possibly compute depending on how often you update the lighting, you do get specular lighting from it!

Now for the life of me I can't find the paper, but the idea was relatively simple: Place cubemap lightprobes as normal, store visibility of cubemaps in textures as a g-buffer, relight those g-buffers just with diffuse lambertian lighting in a round robin fashion (do N cubemaps a frame), then take that output as a lit cubemap and use their clever filtering to do prefiltering on it: https://research.activision.com/publications/archives/fast-filtering-of-reflection-probes

Obviously, again, only slow relighting. And the bigger/more complex your environment the costlier and worse it gets. But it's easily combineable with the above papers, including the flat surfel list/gbuffer strategy from the above two. The Call of Duty one had a static grid of lightprobes with essentially ambient occlusion baked in to help correct for lighter/darker parts of the scene the sparser cubemaps didn't reach, but you could use the above paper lightgrids ideas to replace them with smaller diffuse lightprobes that do the same thing but look better.

The basic thing to remember about all these, including fine realtime scale details, is this seminal paper EG That which all shall copy this generation cause damn it's neat: https://users.aalto.fi/~silvena4/Publications/SIGGRAPH_2015_Remedy_Notes.pdf

Which isn't scalable to open worlds and has to be precomputed (but is somewhat dynamic). Still all the above and more owe a lot to that, so it's a great read.

Ok, that was exhausting. The only other idea is, the future! Well, a fast version of the future. Distance field occlusion is awesome for computing visibility, and can be changed... sooomewhat in realtime (like the above, not very fast, but fast enough for slow changes): http://advances.realtimerendering.com/s2015/DynamicOcclusionWithSignedDistanceFields.pdf

One can use this with... whatever else there is for actual lighting. Re-calculate a skybox environment light every once in a while and use this for occlusion. Do virtual point lights ala: http://www.jp.square-enix.com/tech/library/pdf/Virtual Spherical Gaussian Lights for Real-time Glossy Indirect Illumination (PG2015).pdf

For a single lightbounce, maybe combine with a grid of lightprobes that changes somewhat (relight a bit just based on time of day or whatever) like the above for secondary bounces? The heightfield lighting in Epic's paper was never really more than an experiment, heightfield conetracing or etc. could easily produce better distant GI, including distant reflections, with some more work EG just apply the principles of screenspace ray/conetracing to the heightfield (same principle): https://www.tobias-franke.eu/publications/hermanns14ssct/hermanns14ssct_poster.pdf

I had a quick read through this when the GDC 2019 papers appeared online: https://www.gdcvault.com/play/1026469/Scalable-Real-Time-Global-Illumination

They seem to have taken a pragmatic approach to dynamic GI and have to deal with large levels (64km x 64km). There's also a blog post about the same on the developer's web page:

https://enlisted.net/en/news/show/25-gdc-talk-scalable-real-time-global-illumination-for-large-scenes-en/#!/

Some food for thought!

T

@Frantic PonE, thanks. I know all this stuff. Here is good overview too

@Tessellator, I read this week ago. Slow camera, no "far away" cutscenes, bad things with geometry smaller than voxel cell. Well, finaly, I see that Tatarchuk's RPT and there variations by Ubisoft and Treyarch works once.

My own game engine uses Voxel Octree based GI. If I'm not mistaken some other available engines do support it (Lumberyard and Cryengine). Each implementation I have seen differs a bit though... (including mine).

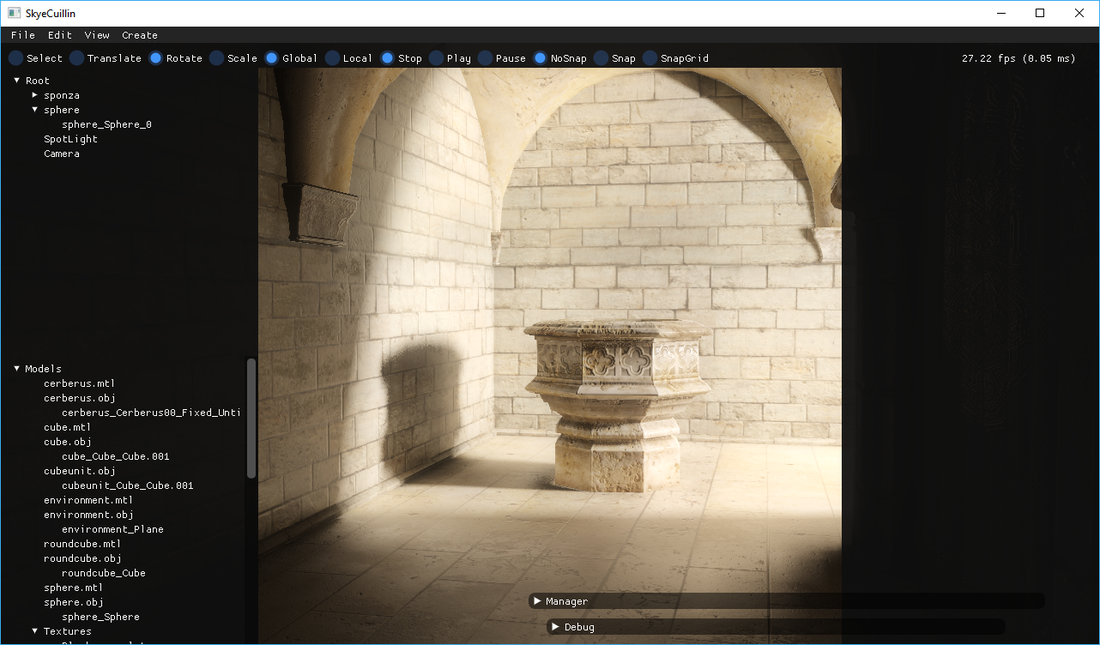

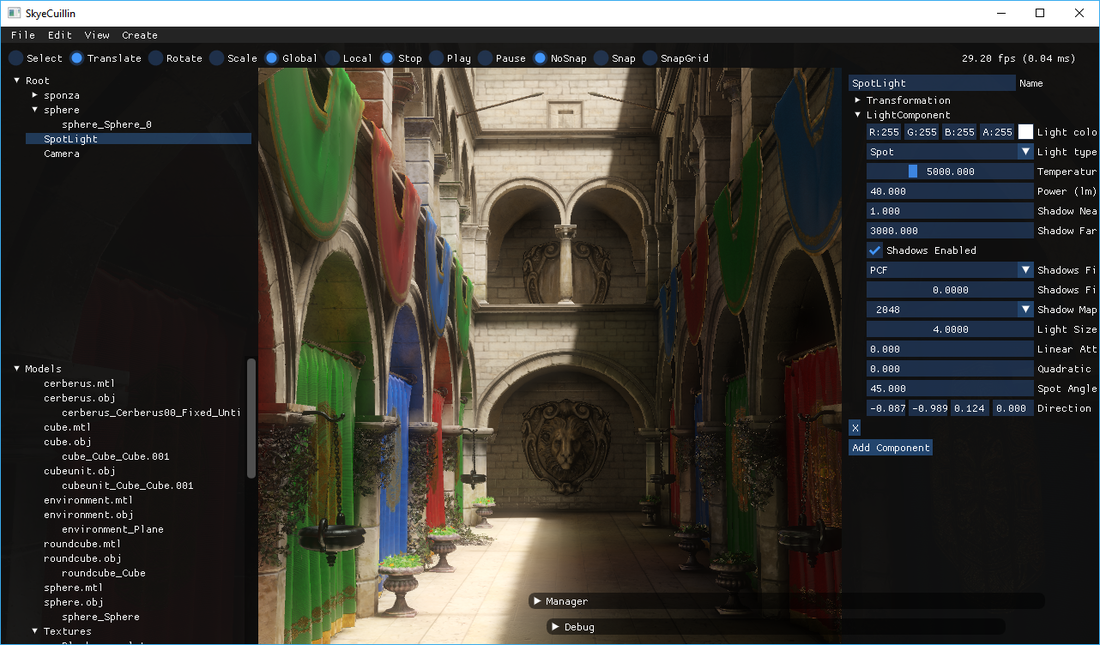

The solution is somewhat *scalable*, allows for fully dynamic objects and fully dynamic lights. Small shameless self promotion (results):

Now, few notes for voxel based global illumination:

- While it allows for static and dynamic geometry, you still have to voxelize it ... and generate interior nodes for voxel octree - and while this gives huge advantages (speed of cone tracing, lack of noise compared to path tracing among others), it has some problems (you can have details only as small as big are your voxels).

- Handling large scale scenes is not easy (there are some approaches - having 'cascaded voxels', or just simply having only some area around camera voxelized), so ... possible, but with some pitfalls

- While sparse voxel octrees sound nice (and could possibly scale even to HUGE scenes), using 3D texture with mipmaps (which is an octree!) outperforms it by a lot ... the only drawback is higher memory usage

- Using it for sharp reflections or caustics is not feasible - even at high resolutions sharp reflections will look bad (especially on spheres and smooth surfaces), additionally it is more heavy on performance than GI, on the other hand it is awesome for smooth reflections

- Without using of temporal filtering, it is not possible to use dynamic objects without flickering - see the following 2 videos:

Due to re-voxelization of objects flickering appears due to limited resolution of voxels.

This can be solved by doing temporal filtering, although can start ghosting for faster moving objects

Additional notes on performance:

- Resolution of your voxel data matters - increasing it doesn't just increase memory usage, but also increases performance required for traversal

- Using GI with MSAA can be overkill (I've used it in those 2 images) ... you may consider having GI calculated in half resolution buffer, and doing bilateral upsampling

As for implementations - there are multiple papers you can find (original one is https://research.nvidia.com/sites/default/files/pubs/2011-09_Interactive-Indirect-Illumination/GIVoxels-pg2011-authors.pdf), a nice description was also done by @turanszkij (here - https://wickedengine.net/2017/08/30/voxel-based-global-illumination/)

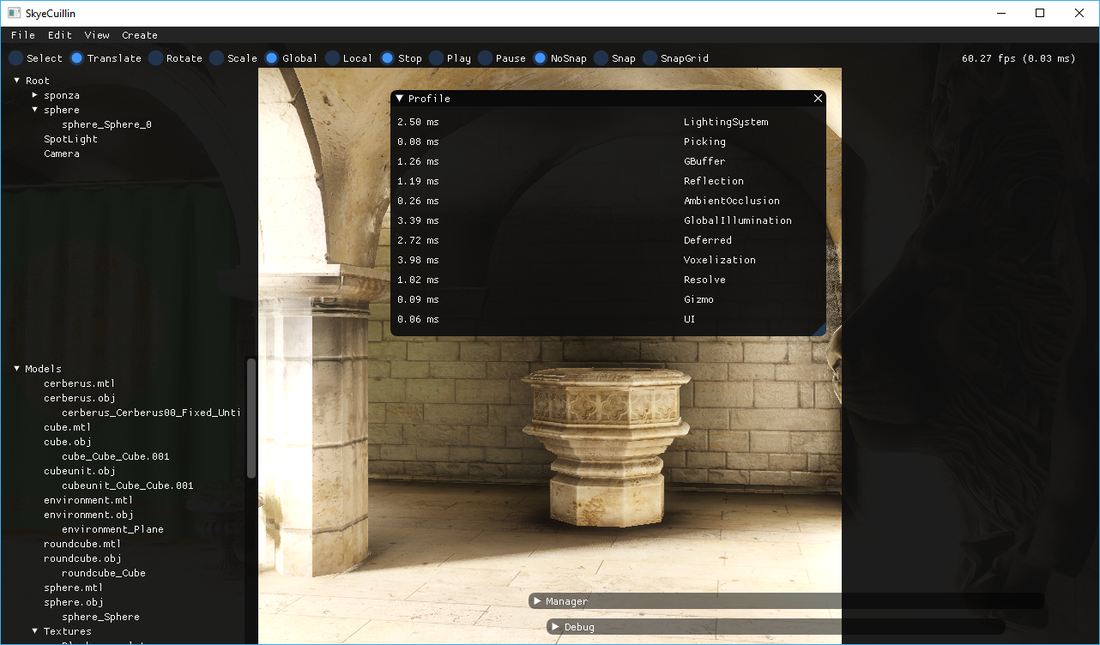

Time for real performance numbers - so here is an example with timing information:

So, to explain the numbers you are concerned about:

- Voxelization - is done every frame for whole scene (in editor I don't flag static voxels, in runtime you can)

- GlobalIllumination - is voxel cone tracing step for calculating GI (you trace multiple cones per each pixel)

- Reflection - is a voxel cone tracing step for calculating reflections (one cone per each pixel)

- AmbientOcclusion - is voxel cone tracing step calculating AO (not necessary - I was just experimenting with it, multiple cones per each pixel)

This one was with 256 x 256 x 256 voxels, and Crytek's Sponza atrium model (whole was inside the voxelization box). So - if you would dynamically voxelize and compute GI - you can get under 7.5ms (GPU used for this was AMD Radeon Rx 590).

There is a virtual point lights method that Naughty Dog used on Last of Us (I think). Works something like shadow maps, but save the color buffer from the light perspective along the shadow map and each pixel acts as a small point light.