HI,

Currently I am writing my own custom decoder for GIF and faced with an issue that when file has only global color table, output image has incorrect colors.

Here is my LZW decompression algorithm:

private Image DecodeInternalAlternative(GifImage gif)

{

var frame = gif.Frames[70];

var data = frame.CompressedData;

var minCodeSize = frame.LzwMinimumCodeSize;

uint mask = 0x01;

int inputLength = data.Count;

var pos = 0;

int readCode(int size)

{

int code = 0x0;

for (var i = 0; i < size; i++)

{

var val = data[pos];

int bit = (val & mask) != 0 ? 1 : 0;

mask <<= 1;

if (mask == 0x100)

{

mask = 0x01;

pos++;

inputLength--;

}

code |= (bit << i);

}

return code;

};

var indexStream = new List<int>();

var clearCode = 1 << minCodeSize;

var eoiCode = clearCode + 1;

var codeSize = minCodeSize + 1;

var dict = new List<List<int>>();

void Clear()

{

dict.Clear();

codeSize = frame.LzwMinimumCodeSize + 1;

for (int i = 0; i < clearCode; i++)

{

dict.Add(new List<int>() { i });

}

dict.Add(new List<int>());

dict.Add(null);

}

int code = 0x0;

int last = 0;

while (inputLength > 0)

{

last = code;

code = readCode(codeSize);

if (code == clearCode)

{

Clear();

continue;

}

if (code == eoiCode)

{

break;

}

if (code < dict.Count)

{

if (last != clearCode)

{

var lst = new List<int>(dict[last]);

lst.Add(dict[code][0]);

dict.Add(lst);

}

}

else

{

if (last != clearCode)

{

var lst = new List<int>(dict[last]);

lst.Add(dict[last][0]);

dict.Add(lst);

}

}

indexStream.AddRange(dict[code]);

if (dict.Count == (1 << codeSize) && codeSize < 12)

{

// If we're at the last code and codeSize is 12, the next code will be a clearCode, and it'll be 12 bits long.

codeSize++;

}

}

var width = frame.Descriptor.width;

var height = frame.Descriptor.height;

var colorTable = frame.ColorTable;

var pixels = new byte[width * height * 3];

int offset = 0;

for (int i = 0; i < width * height; i++)

{

var colors = colorTable[indexStream[i]];

pixels[offset] = colors.R;

pixels[offset + 1] = colors.G;

pixels[offset + 2] = colors.B;

offset += 3;

}

...

}For gifs, which has local color tables, everything seems decodes fine, but If gif has global color table or has X and Y offsets, then first frame is good, other frames - not.

Here is some examples:

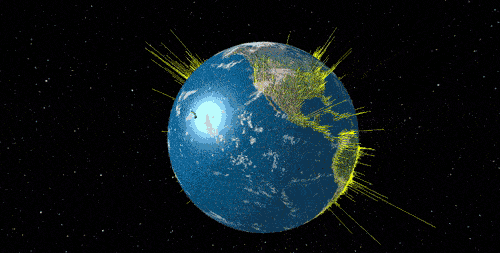

First - gifs with local color table and NO offset (all frames are the same size)

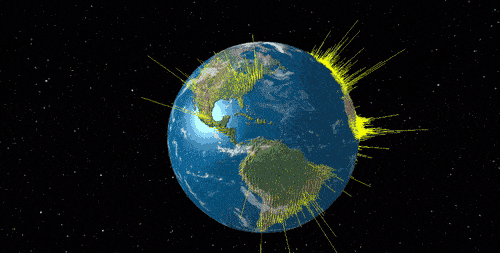

And here is a gif file, which has only global color table and different frame size.

I think that if first frame was built correctly, this issue might happening because of horizontal and vertical offsets (could be different for each frame), but the only thing I cannot understand in such case - why this is actually happening?

After decoding I must have valid color table indices in decoded array and I should not care about offsets.

Also must admit that LZW decoding algorithm seems working fine. At least it always produces array of the expected size.

I attach also my full test GIF files here.

So, could someone point me to the right direction and say why I see observed behavior in one case and dont see in another?