12 hours ago, Dawoodoz said:

* Ubuntu often comes with software emulated GPU drivers that are many times slower for 2D overlays. Installing drivers is usually a month's work of rebooting the X-server and manually modifying and recompiling the Linux kernel with a level 4 Nvidia support technician. Even our company's senior Linux admin called it a real headache. Now imagine a 12 year old gamer given the same task with one hour of patience before rebooting on Windows.

I used to work at Canonical (the makers of Ubuntu) on 2d overlay software (the Unity desktop, the Mir display server). We had a full-time expert who worked with all the GPU vendors to make installing the proprietary drivers a smooth and hassle-free experience, and the vendors would fall over themselves to help (Linux is a huge market for the GPU vendors, what with commercial render farms AI applications and bitcoin mining, unlike Windows where the markup on consumer-grade hardware is negligible). Using vendor-supplied graphics drivers on Ubuntu has been a painless and easy experience for at least a decade now and it's only gotten easier.

Ubuntu didn't install the proprietary drivers out of the box for licensing reasons.

12 hours ago, Dawoodoz said:

* In safety critical systems, the customer will usually specify "CPU only" because of safety concerns.

I now work for a popular realtime embedded OS company on safety critical systems. Customers are falling all over themselves trying to get GPU-based software to run on the virtual machines (!) they install in safety critical systems. Of course, the truly hard-core safety systems don't have any kind of graphical display (think: the brakes in your car or the controller for your stabilizer trim on your 737-MAX) and the kernel itself bans even floating-point operations. The rest: lane deviation detection systems, cockpit displays, CAT scanners, they all use GPUs and SSE/NEON SIMD extensions like there's no tomorrow.

Honestly, I think it's scary what they (and the ISO 61508/26262 auditors) consider safe. Stay off the roads and move to a cave, folks, it's all gonna collapse some day.

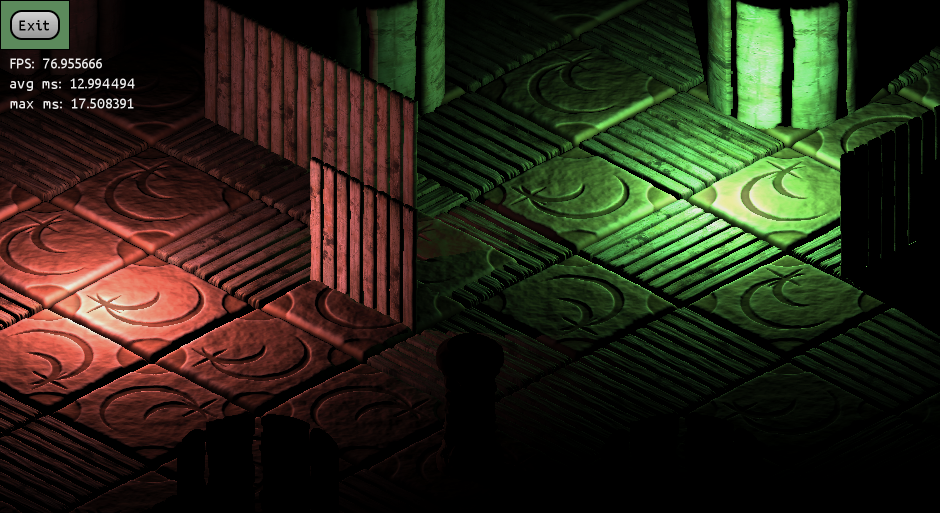

Mean time, I encourage you to pursue a CPU-only rendering engine. I knew someone who worked for a company that did just that and could beat GPU-based software in many specialized cases 10 years ago. Turns out there wasn't enough margin in it and the company went in the direction of a side project in animation software that was actually making money. I think there's probably a niche market for what you're trying to do.