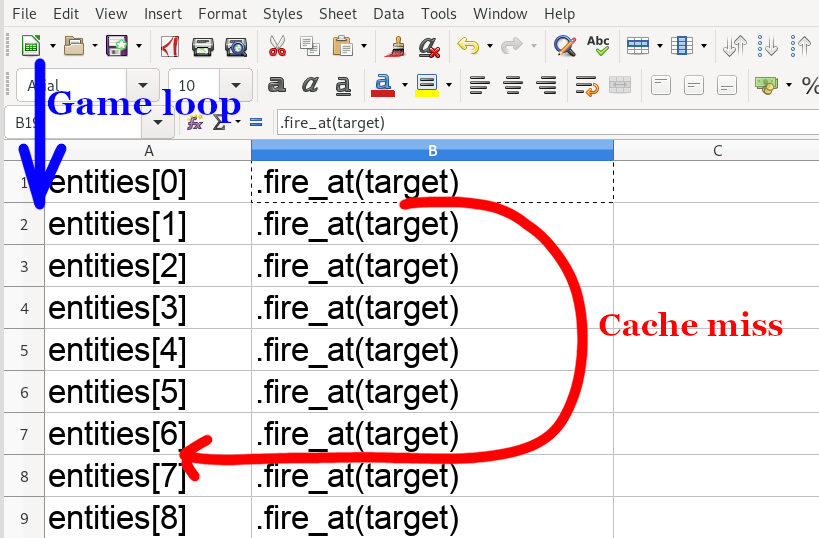

1. What does fire_at() do?

Does it access the target object extensively?

If yes, can you reduce the accessed data to something small? I would imagine firing at an actor can be reduced to firing at a scene component style object whose size can be reduced to just a handful of bytes.

2. What is the "target"?

If the target is a remote actor and there's only a handful of targets per firing object, then can you split off an above-mentioned scene component style chunk and copy it locally to the actor that is firing? (note that this is kinda moot without answering point 3)

3. Are you dealing with actual scale or trying to optimize the wrong thing?

If yes to either, can you time-slice your logic?

Point in case: Starcraft (or any RTS game for that matter, though Starcraft is a really nice example because it is extremely competitive and therefore needs to aim its requirements at machines that run on potato batteries with presumably weak hardware) supports armies of up to 200 units (I'm rounding here) per team. In a minimalist 1-on-1 game you can therefore have up to 200 zerglings or whatnot per team that need to find their paths relatively intelligently on a map that may be fairly large. Things get much worse when you have 3, 4 or 8 teams.

Now, pathfinding is an inherently cache-unfriendly problem, which is why you cannot brute-force your way through this. You cannot take 200 zerglings and find paths for all of them in one timeslice (tick).

Therefore, any modern RTS game likely employs any number of tricks from path caching to multi-tier pathfinding to simplify this problem. But there is a simpler way: spread out your work load.

You can do this it two ways: laterally (on different threads) or temporally (timeslicing)

Does every object in your game fire at one or more enemy every tick? Probably (hopefully) not.

Here's where you could cheat! Unless you can substantially improve cache coherence (as suggested in points 1 and 2) or come up with a clever set up your data in a completely different way, just ignore the cache issue and solve the problem architecturally.

Option A: create a task system and assume there's at least one other thread that can take 30-50% of the load off the main thread. This is actually fairly easy if you synchronize your data at regular intervals and work on thread-local data sets.

Or, better yet, option B: place your firing squad in a priority queue. Assume that every tick you have up not N milliseconds to spend on logic and work through that queue as you do right now. When you run out of time, simply finish the tick and keep processing the queue during the next tick. Unless you fill up a gigantic queue (we're talking millions of objects firing at any number of things) that brings a modern CPU core to its knees for more than a full second, all you'll see are very slight potential delays before a shot is fired here or there. Chances are that in a real world scenario, parts of your unit logic "lag" a bit but that remains unnoticeable to the player since, hopefully, they're busy, you know, playing