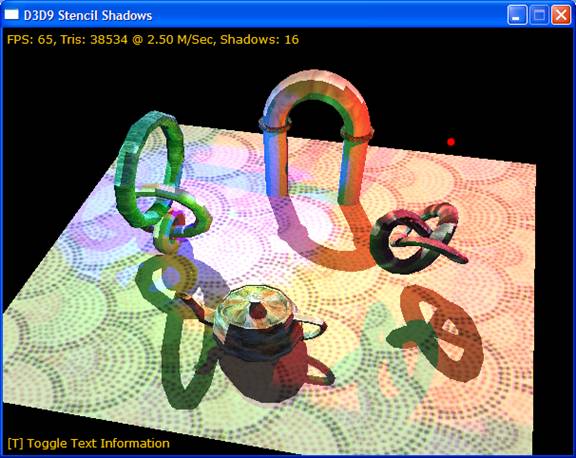

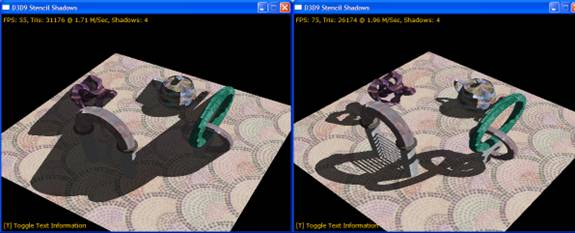

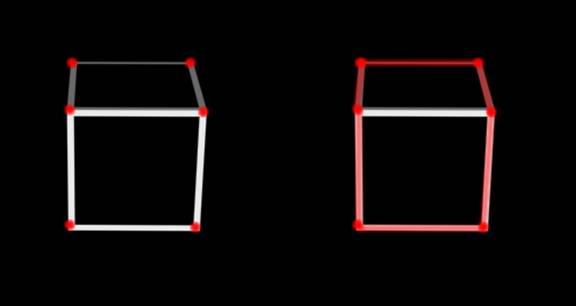

The above image is a screenshot from the sample program, it shows 4 shadow casting objects, and 4 dynamic lights (plus 1 ambient). Running at approx. 65 fps on a Pentium-4 3.06ghz / Radeon-9800 pro.

Notice how the shadows from different lights/objects interact and overlap each other.

Overview

Algorithm: Depth-pass stencil shadow volume rendering

Target Audience: Intermediate to advanced graphics programmers

Experience/Skill: Experience with Direct3D essential

Target Platform: Microsoft Direct3D 9.0 / Visual C++ 7

Introduction

Welcome to this article on shadow rendering in Direct3D 9.0; shadow rendering is not a particularly new effect - real time applications have been implementing various forms of shadows for many years now. However, it is only in the last couple of years that it has been possible (at reasonable speeds) on the majority of commercially available hardware. You can now expect to implement good quality shadows as a standard feature, and not just a fancy special effect.

Shadows are one of the most important light effects - far more so than fancy reflectance and BRDF models. The reason for this is that our brain makes great use of shadows to aid our spatial awareness. For example, if an object is hovering very slightly above a flat plane the addition of a shadow will aid greatly in identifying this. Also, as well as being useful for realism, they do look pretty cool - and you will see many of the next generation games creating incredible atmospheres using shadows and lights (think Doom-3, Half-Life 2 and Deus-Ex: The Invisible War).

The purpose of this article is to provide a solid example of "Depth-pass shadow volume rendering with multiple light sources". You'll see, as this article progresses, that rendering with one light source is very easy by comparison to rendering with 4 dynamic lights. You'll find lots and lots of examples online that cover the implementation of one light (there has been a good example in the last 3 versions of the DirectX SDK), but I aim to take it one step further.

The other important aim of this example is to primarily cover the implementation, and not get too bogged down in graphics theory. Theory will be discussed as and when it's needed, but for a deeper discussion you may wish to read one or more of the references.

The results of this article will be a working example with several shadow casting objects and up to 5 light sources dynamically moving each frame. Using the code in this article/download you should be able to implement the technique in your own applications. Whilst the article is aimed at Direct3D 9.0 hardware you could, with not much work, get it working under Direct3D 8.0.

Introduction to shadow volumes

What is a shadow volume? This is obviously a crucial question; luckily the answer is remarkably simple. The volume part refers to a "piece" of 3D space that is somehow identified as being different from the rest, much like you have "area" marking different sections of 2D space (e.g. A box drawn on a piece of paper). The shadow part refers to whether a certain point is inside or outside of a shadow (by the very nature of light, a point is either in or out of shadow - there is no in between1). Combine these two facts and you have a shadow volume defined as a piece of 3D space identified as being a shadow.

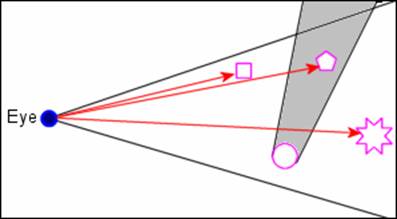

See the following diagram:

On the left of the diagram above, we see the shadow volumes. On the right we see just the final result. In the case of the LHS, any geometry that intersects (or is totally inside) the darkened areas

will be shadowed.

When we use this in a real-time scenario we can almost think of it as a very simple ray tracer. For every pixel that we draw to the screen we will check to see if it is in a shadowed area, in order to do this we will compare it to a shadow volume; the ray tracing is done in the form of a line from the camera to the pixel being tested for entering and exiting a shadow volume. Technically speaking it isn't using conventional ray-tracing algorithms, but I find it a useful analogy to help explain the idea to people.

This next diagram shows how the ray-tracing aspect works. If you take the Blue circle to be where the camera is, and the black lines coming from it indicate the edges of the view frustum (we only see what is between these two lines). The magenta shapes indicate potential geometry to be rendered (we'll only consider this in 2D for now, 3D gets too difficult for diagrams). The Magenta circle is casting a grey shadow across the scene - this is the shadow volume. In simplistic terms, when we render a pixel to the screen it can be traced back as a ray to a tiny part of an object in the scene - and this is where my ray tracing analogy comes from. If we draw a line (3 in this case) from the camera to each object we can tell if it intersects the grey area (shadow). More importantly we can determine how many "sides" it intersects. If it intersects one side of the volume it has entered shadow, if it intersects two sides it has entered and then left the shadow, corresponding respectively to in shadow and out of shadow. In the diagram, the line to the square crosses 0 sides of the volume, and is not in shadow; the line to the pentagon crosses 1 side of the volume so it's in shadow. The line to the final object, a star, crosses 2 sides of the shadow (enters the volume and leaves it) so it is deemed as out of the shadow.

Shadow volume rendering is quite a fashionable algorithm currently, many of the graphically intensive games due to be released towards the end of 2003 will be using this technique. However, if you monitor the more advanced graphics programmers and the work they publish you will find that they have already moved on. Various clever techniques are becoming increasingly popular that give better and better results.

There are quite a few limitations to shadow volumes, in many respects they are the medium-level of shadow rendering - they aren't the fastest (planar shadows generally are) and they don't look the best (soft shadowing/projective shadows generally look better). However, for the speed and features you get, they are probably the most practical to implement currently.

An overview of the main limitations:

1. Hardware Stencil Buffering

As you'll see later on, the application requires the use of a stencil buffer. The majority of hardware since the GeForce / Radeon hardware will have reasonable support, but you can still find a few

obscure chipsets. More importantly, you need as many bits for the stencil buffer as you can; you may be able to work with a 1-bit stencil, but ideally you want either a 4 or an 8 bit stencil buffer.

Support for 8-bit stencils has only been common in the more recent hardware.

2. Bandwidth Intensive

The algorithm will chew up as much graphics card bandwidth as you can throw at it, especially when it comes to rendering with multiple light sources. Fill rate in particular is very heavily used;

with a possible n+1 overdraw (where n is the number of lights). There are a few tricks you can use to reduce the trouble this causes.

3. No Soft Shadowing

The mathematical nature of a shadow volume dictates that there is no intermediary values - it's a Boolean operation - pixels are either in shadow or aren't. Therefore you can often see

distinct aliased lines around shadows. Apart from relying on Anti Aliasing, the main way to get soft shadowing is to use additional multiple lights and get a "jittered" sample for the shadowed

region, this can be computationally expensive.

4. Complicated for Multiple Light Sources

The majority of real-time scenes have several lights (4-5) enabled at any one point in time, whilst with this technique there is no limit to the number of lights (even if the device caps indicate a

fixed number) the more lights that are enabled the slower the system goes. The two factors to watch are geometric complexity (and number of meshes) and light count, the best systems will use an

algorithm to select only the most important lights, and only the affected geometry.

5. Problems When the Camera Intersects the Shadow Volume

There are quite serious issues with camera movement when using depth-pass shadow volume rendering. When the camera is positioned inside a shadow volume you will get noticeable artefacts appearing

on-screen. There aren't any good solutions to this problem apart from switching to another, similar, rendering algorithm: Depth-Fail. A combination of depth fail rendering, shadow volume capping and

projection tweaks allows for a robust shadow rendering procedure. The only trade off is that it is more complicated to implement, and marginally slower in the performance stakes. This article is

focusing on depth-pass rendering, for depth-fail examples/information you'll have to check out the references and/or look around online.

As hinted to earlier, several other techniques exist to render shadows in a 3D scene. Covering them here in any detail is far too lengthy - have a look at the references section if you want some more detail.

The easiest shadow technique is known as "planar shadows" where the casting geometry is flattened onto a plane using some clever matrix maths. The result is a very simple shadow, only particularly good for drop shadows. It has been extensively used in racing games, as the road can often be thought of as flat.

A more advanced technique is to use shadow-maps, and project them onto the scene as a texture stage. This gets quite complicated and can rely on extremely large render targets (1024x1024 and above) for good results. When combined with several clever shading and blending tricks it is possible to get very impressive results with this method.

Back to shadow volumes, in order for the algorithm to give acceptable results there are several requirements. Firstly the hardware must support stencil buffers (preferably with 4 or 8 bits of precision), this isn't too hard to find on modern hardware. The second requirement is a little less obvious - but important nonetheless, the geometry casting shadows must be of a medium to high tessellation. At low tessellations the shadow volume generation doesn't always give correct results (look at the teapot mesh at low-detail in this articles sample program), which are usually very obvious to the user. However, at high tessellations it can be quite demanding on the processing power. The third requirement is that you don't use any clever tessellation methods - in particular high-order primitives and displacement mapping. Because these are calculated on the GPU it is very difficult to calculate the correct silhouette to use, methods do exist, but they are prohibitively slow.

How to create a shadow volume

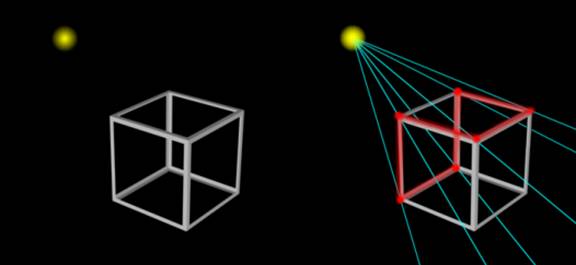

The algorithm behind creating a shadow volume is actually extremely simple, its time complexity is due to the potentially large amount of data it has to process while generating the volume. Take the following image:

On the left shows the original source mesh, along with the position of the light this is all the information we have available to us. In the right-hand image we see the same mesh, but with a shadow volume extruded. Basically, we pick the edges highlighted in red, and extrude them some distance beyond the mesh.

The key point to appreciate is that when you view the scene from the light's point of view (looking at the mesh) you should see no shadow. When you view the mesh this way it should be fairly easy to tell where the edge of the mesh is - the silhouette of the object. Take the following illustration:

Notice in the above illustration that the hi-lighted edges correspond with distinct edges and vertices on the mesh? All we need from our algorithm is a way to identify these edges and we'll be in business.

If we think about the basic geometry maths - vectors and matrices - we will often come up with the Dot Product of two vectors. In this particular case it is the key equation to identifying the silhouette edges. If we take the normal for the triangle that the edge belongs to (process the edge twice if it belongs to two triangles) and 'dot' it with the vector from the vertex to the light we will get the cosine of the angle. If this value is less than or equal to 0.0 then it is facing away from the light. We can then take this to be a silhouette edge.

Once we've collected a list of silhouette edges, which can also be thought of as the start or "top" of the shadow volume, we need to extrude them. It is this process that actually gives us the geometry we can use to calculate where shadows lie in our scene. This is done by taking the vertex at each end of the edge and subtracting a multiple of the vertex-to-light vector. This has the effect of extruding it into the distance - preferably a long way into the distance.

As an overview:

1. For every triangle in the mesh calculate its normal

2. Calculate the dot-product of this normal with the vertex-to-light vector

3. IF the dot-product is negative or zero we add the edges to a list

4. Where possible remove duplicate edges and/or "interior" edges

5. When we have a list of silhouette edges we extrude them some distance.

6. We store these triangles in a vertex buffer.

As an important note, this is just a programming algorithm - it can be implemented in almost any programming language, for almost any graphics platform / API.

There are various optimizations that can be implemented in this algorithm, some more complicated than others.

The most obvious optimization to make is that of caching regularly used data. In the code I wrote for this article, the mesh data never actually changes - so there is no point in identifying all the edges (locking buffers, processing all vertices/triangles etc...) each frame / update. Instead, it makes sense to create a list of edges that is updated only if/when the mesh data is altered. We can then run through this array on each frame, rather than mess about with Direct3D vertex buffers and waste time calculating another 2000 normals.

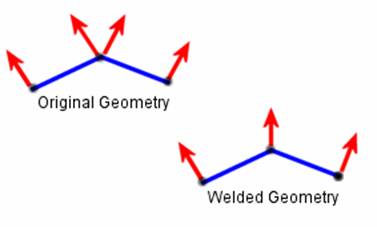

A slightly modified version of caching the data is to reduce the amount of data cached. During testing I changed the algorithm to weld edges where the two normal's were within 30o of each other. Given that an awful lot of meshes have quite highly tessellated yet smooth surfaces you may not need to include every single edge. My testing found that I could knock 20-30% of the edges off my list with this technique - with minimal effect on the shadow volume generated.

The final optimization I wish to discuss is that of vertex shaders. If you are using a programmable architecture (I chose not to for this sample) then you could develop a vertex shader script to extrude shadows on the GPU. There are a few inefficiencies in doing this (multiple extrusions required), however the speed of GPU's almost eliminates these side effects. Discussing vertex shader based extrusion is a bit too lengthy for this article, so you'll have to look at the references and/or search for some different articles.

The rendering process

Now we need to cover the more interesting aspects of shadow rendering - the actual rendering process! I'm going to dive straight in with this - you may wish to look through the DirectX-SDK shadow volume sample to get familiar with 1-light shadowing, although this isn't required.

It is crucial to this algorithm that you understand that the shadow rendering CANNOT be done all in one go. By that I mean that it is a built up process of rendering the correct geometry in the correct way - stage by stage. Due to this fact, it may well be worth investing some time in pre-computing regularly used data. As you'll see a bit later on, the geometry may well be transformed and rendered several times each frame - therefore any complex animation system might have a much heavier impact on the frame rate. The solution would be to animate the mesh once at the start of the frame, and then render from this static mesh.

The following is an overview of how the algorithm will work:

1. Clear colour, depth and stencil buffers

2. Disable all forms of lighting

3. Disable texturing and all shaders

4. Disable writing to the colour buffer and stencil buffer

5. Render the ENTIRE scene

6. Enable ambient light only

7. Enable all texturing and shading

8. Configure the colour buffer for additive rendering

9. Disable Z-writing (Z-testing remains on)

10. Render the ENTIRE scene

11. Disable ambient lighting

12. For each light in the scene

a. Clear the stencil buffer

b. Disable colour buffer rendering

c. Disable texturing and lighting

d. Configure the stencil buffer for first pass

e. Render shadow geometry with CCW culling

f. Configure the stencil buffer for second pass

g. Render shadow geometry with CW culling

h. Disable all lights EXCEPT the current light

i. Enable texturing and shading

j. Configure colour buffer for additive rendering

k. Render all geometry influenced by the current light [Frame Ends]

The above may look complicated, and in truth it is - however as soon as you understand the general idea of a single pass it's only a case of doing the same thing over-and-over again.

The above steps have been divided into 3 main sections; this is deliberate as it helps to split apart the different sections.

Lines 2 - 5 are often referred to as the "Z-Fill Pass", that is, we fill the Z-buffer with a correct representation of the scene. Notice that for the rest of the algorithm we just test against this but never actually write to it again. This is one of a few bandwidth-saving tricks.

Lines 6 - 10 are for ambient lighting, obviously these can skip if you don't want to use ambient lighting for the current frame/world. The reason this light source is separated from the others is because ambient lights can't cast shadows - so we don't need to complicate issues by including shadow volumes/stencil operations.

Lines 11 - 12 (inc. sub-parts of 12) are the real meat of the algorithm - this is where you will spend the majority of your execution time. I will discuss this in more detail shortly, but for now just think of it like this: we render each pass to be the contribution by the selected light, but certain areas will be masked off as shadowed (no contribution from the selected light).

Z-Fill< Rendering Pass

The following code is taken from the sample program, and represents the Z-Fill stage of the rendering process.

//colour buffer OFF

pDev->SetRenderState( D3DRS_ALPHABLENDENABLE, TRUE );

pDev->SetRenderState( D3DRS_SRCBLEND, D3DBLEND_ZERO );

pDev->SetRenderState( D3DRS_DESTBLEND, D3DBLEND_ZERO );

//lighting OFF

pDev->SetRenderState( D3DRS_LIGHTING, FALSE );

//depth buffer ON (write+test)

pDev->SetRenderState( D3DRS_ZENABLE, TRUE );

pDev->SetRenderState( D3DRS_ZWRITEENABLE, TRUE );

//stencil buffer OFF

pDev->SetRenderState( D3DRS_STENCILENABLE, FALSE );

//FIRST PASS: render scene to the depth buffer

for ( int i = 0; i < 8; i++ ) {

mshCaster->Render( pDev );

}

It's actually a very trivial piece of code, and just relies on a good knowledge of the available render state configurations. The render state changes are basically there to stop the scene rendering affecting anything other than the depth buffer.

The reason for doing this stage is two fold - firstly, we need the Z-data when it comes to rendering the shadow volumes, and secondly it greatly reduces overdraws later on. A crude approach to this technique would be to re-fill the depth buffer for each light-pass, but that would be rather pointless.

Overdraw is a big issue in current real-time environments where complex shaders and texture stages are routinely used; most modern hardware won't process the texturing/pixel-shading stage if the pixel fails the Z-test (that is, it is behind other pixels). Because all passes in this algorithm can Z-test against a properly filled depth buffer we can hope that the GPU will reject a reasonable percentage of pixels without wasting time on any complex texturing effects.

Ambient Lighting Pass

As far as rendering steps that actually affect the final result, this is the simplest.

//colour buffer ON

pDev->SetRenderState( D3DRS_ALPHABLENDENABLE, TRUE );

pDev->SetRenderState( D3DRS_SRCBLEND, D3DBLEND_ONE );

pDev->SetRenderState( D3DRS_DESTBLEND, D3DBLEND_ONE );

//ambient lighting ON

pDev->SetRenderState( D3DRS_LIGHTING, TRUE );

pDev->SetRenderState( D3DRS_AMBIENT, AMB_LIGHT );

pDev->LightEnable( 0, FALSE );

pDev->LightEnable( 1, FALSE );

pDev->LightEnable( 2, FALSE );

pDev->LightEnable( 3, FALSE );

//SECOND PASS: render scene to the colour buffer

for ( int i = 0; i < 8; i++ ) {

mshCaster->Render( pDev );

}

Ambient light is an interesting issue in that it can be thought of as a light source - yet it doesn't follow the same rules (or syntax) as the majority of lights that you'll be using. Also, due to its nature it doesn't cast any shadows. Therefore it needs to be separated from the rest of the lighting passes.

By the time we get to this stage of the rendering process the colour buffer and stencil buffer should be empty (black and '0' respectively), yet the depth buffer should have a perfect image of our scene. When we render our entire scene for the second time we should only need to render the pixels that are actually visible (that is, there should be no, or minimal, overdraw). The pixels rendered should be very fast - with no other lighting the calculations are only held back by any texture combinations being used. Some of the more elaborate shading effects could be skipped for this stage - bump mapping for example has little effect when using only an ambient light.

Each Light Rendering Pass

Introduction

int iSVolIdx = 0;

for ( int i = 0; i < iLightCount; i ++ ) {

//if enabled && casting shadows

if ( lList->bEnabled && lList->bCastsShadows ) {

//clear stencil buffer

pDev->Clear( 0, NULL, D3DCLEAR_STENCIL, D3DCOLOR_XRGB(0,0,0), 1.0f, 0 );

//turn OFF colour buffer

pDev->SetRenderState( D3DRS_ALPHABLENDENABLE, TRUE );

pDev->SetRenderState( D3DRS_SRCBLEND, D3DBLEND_ZERO );

pDev->SetRenderState( D3DRS_DESTBLEND, D3DBLEND_ONE );

//disable lighting (not needed for stencil writes!)

pDev->SetRenderState( D3DRS_LIGHTING, FALSE );

//turn ON stencil buffer

pDev->SetRenderState( D3DRS_STENCILENABLE, TRUE );

pDev->SetRenderState( D3DRS_STENCILFUNC, D3DCMP_ALWAYS );

//render shadow volume

//OPTIMISED: use support for 2-sided stencil

if ( b2SidedStencils ) {

//USE THE LATEST 1-PASS METHOD

//configure the necessary render states

pDev->SetRenderState( D3DRS_STENCILPASS, D3DSTENCILOP_INCR );

pDev->SetRenderState( D3DRS_CULLMODE, D3DCULL_NONE );

pDev->SetRenderState( D3DRS_TWOSIDEDSTENCILMODE, TRUE );

//render the geometry once to the stencil buffer

for ( int j = iSVolIdx; j < ( iSVolIdx + 4 ); j++ ) {

pVols[j]->Render( pDev );

}

//reset any necessary states

pDev->SetRenderState( D3DRS_TWOSIDEDSTENCILMODE, FALSE );

} else {

//USE THE TRADITIONAL 2-PASS METHOD

//set stencil to increment

pDev->SetRenderState( D3DRS_STENCILPASS, D3DSTENCILOP_INCR );

//render front faces

pDev->SetRenderState( D3DRS_CULLMODE, D3DCULL_CCW );

for ( int j = iSVolIdx; j < ( iSVolIdx + 4 ); j++ ) {

pVols[j]->Render( pDev );

}

//set stencil to decrement

pDev->SetRenderState( D3DRS_STENCILPASS, D3DSTENCILOP_DECR );

//render back faces

pDev->SetRenderState( D3DRS_CULLMODE, D3DCULL_CW );

for ( int j = iSVolIdx; j < ( iSVolIdx + 4 ); j++ ) {

pVols[j]->Render( pDev );

}

pDev->SetRenderState( D3DRS_CULLMODE, D3DCULL_CCW );

}

//Increment shadow volume index, slightly hack-ish but it'll do.

//basically, there is no formula for the idx into pVols[] so we

//just have to keep on counting...

iSVolIdx += 4;

//alter stencil buffer

pDev->SetRenderState( D3DRS_STENCILFUNC, D3DCMP_GREATER );

pDev->SetRenderState( D3DRS_STENCILPASS, D3DSTENCILOP_KEEP );

//turn on CURRENT light, turn off all others

pDev->SetRenderState( D3DRS_LIGHTING, TRUE );

pDev->SetRenderState( D3DRS_AMBIENT, D3DCOLOR_XRGB(0,0,0) );

pDev->LightEnable( 0, i == 0 ? TRUE : FALSE );

pDev->LightEnable( 1, i == 1 ? TRUE : FALSE );

pDev->LightEnable( 2, i == 2 ? TRUE : FALSE );

pDev->LightEnable( 3, i == 3 ? TRUE : FALSE );

//turn ON colour buffer

pDev->SetRenderState( D3DRS_ALPHABLENDENABLE, TRUE );

pDev->SetRenderState( D3DRS_SRCBLEND, D3DBLEND_ONE );

pDev->SetRenderState( D3DRS_DESTBLEND, D3DBLEND_ONE );

//render scene

for ( int k = 0; k < 8; k++ ) {

mshCaster[k]->Render( pDev );

}

//reset any necessary render states

pDev->SetRenderState( D3DRS_STENCILENABLE, FALSE );

} //if(enabled&&caster)

} //for(each light)

The code above looks far more complicated than it actually is. Unfortunately due to the nature of it being cut-n-pasted from the sample program, there are a few variables and object referred to that won't make any sense. For this reason I strongly suggest that you download the sample program.

The core of the above code is a simple For( ) loop, one that goes through each light source in the "world"; it is this For( ) loop you may want to alter if you implement some of the optimizations discussed later on. Inside the For( ) loop is a simple If( ) block; this block contains all the code to render one pass, but it will only do this if the light is turned on and if it is set to cast shadows (a feature of the lights in the sample code is that you can turn off their shadow-casting ability).

Once we've reached the code for actually rendering the pass with shadows, it is simply a case of following the list of instructions outlined at the very start of this section. The only really important thing to note is that I've included the code for a 2-sided stencil operation; this is a new feature allowed by Direct3D9 (provided driver support exists) and effectively allows the elimination of a transform/render for the shadow volume(s).

The above code is optimised for render states, that is, I've removed any unnecessary state changes, and moved some standard calls to the initialisation section of the sample. If we look at the depth/stencil configuration as found in the initialise code:

pDev->SetRenderState( D3DRS_STENCILREF, 0x1 );

pDev->SetRenderState( D3DRS_STENCILMASK, 0xffffffff );

pDev->SetRenderState( D3DRS_STENCILWRITEMASK, 0xffffffff );

pDev->SetRenderState( D3DRS_CCW_STENCILFUNC, D3DCMP_ALWAYS );

pDev->SetRenderState( D3DRS_CCW_STENCILZFAIL, D3DSTENCILOP_KEEP );

pDev->SetRenderState( D3DRS_CCW_STENCILFAIL, D3DSTENCILOP_KEEP );

pDev->SetRenderState( D3DRS_CCW_STENCILPASS, D3DSTENCILOP_DECR );

b2SidedStencils = ( ( caps.StencilCaps & D3DSTENCILCAPS_TWOSIDED ) != 0 );

It is the second line that is the key to realising why this technique is called depth-pass. It may seem odd that I'm talking about a "ZFAIL" render state with respect to a Z-Pass name; however if you think about it - the normal D3DRS_STENCILPASS state could be called D3DRS_STENCILZPASS. The bottom line is that in the above code we alter the stencil buffer on a _STENCILPASS but do nothing on a _STENCILZFAIL; a depth-fail algorithm would do the opposite.

Crucial Optimization Tips

I'm going to use this section to briefly round up a few optimization notes that you really should take the time to implement if you are going to make serious use of this technique.

I've found in my testing that the real key to good performance with this technique is to manage your resources well. I know this holds true for most 3D applications, but in this case we will be using the same/similar geometry over and over again on each frame. The number of active lights will often multiply any reduction of processing we can get.

I mentioned earlier in the article that caching animated meshes at the start of the frame (where possible) might be beneficial. The idea of caching geometry doesn't just have to apply here - it can be applied to both the construction of shadow volumes and the persistence of shadow volumes across frames (if the light doesn't move, why update the shadow volume). If frame rate is particularly poor then a movement delta can be employed; if you calculate the speed at which the light and/or geometry is moving then you may choose to skip the re-calculation of shadow volumes (and wait until a noticeable change in geometry/lighting has occurred). The sample code has this as an option - update the shadow volumes only every n frames; and it works quite well. Setting the delay to "2" (update every 3rd frame) then you can gain 40fps without much of a loss in quality.

The other really crucial optimization - one that far outweighs any other - is to render only the geometry that you must. This is an old adage really, and applies across the computer graphics spectrum; however with shadow rendering it requires a slightly different look at what geometry is rendered. Key points to note are:

1. Geometry off screen can cast shadows on-screen, such that view-frustum culling should be used carefully if you use it to select which shadow volumes are to be rendered.

2. A light can only cast shadows as far as it can illuminate. Thus there is no point generating a shadow volume for an object 200m away from a point light with a range of 100m.

3. Each light requires a whole rendering pass, so we only want to enable lights that will have a visible effect. This is actually very easy, comparing a sphere (point light) against the view-frustum planes is trivial, and we know that directional lights and ambient lights will almost always have some influence.

The final optimization tip to note can be quite powerful - with a few clever changes to the code you can eliminate the need for a separate Z-Fill and Ambient lighting passes, the net result is you save the transform time for an entire render of the scene. The following code shows how you do this:

if ( bAmbientLight ) {

//colour buffer ON

pDev->SetRenderState( D3DRS_ALPHABLENDENABLE, FALSE );

pDev->SetRenderState( D3DRS_SRCBLEND, D3DBLEND_ONE );

pDev->SetRenderState( D3DRS_DESTBLEND, D3DBLEND_ONE );

//ambient lighting ON

pDev->SetRenderState( D3DRS_LIGHTING, TRUE );

pDev->SetRenderState( D3DRS_AMBIENT, AMB_LIGHT );

pDev->LightEnable( 0, FALSE );

pDev->LightEnable( 1, FALSE );

pDev->LightEnable( 2, FALSE );

pDev->LightEnable( 3, FALSE );

} else {

//colour buffer OFF

pDev->SetRenderState( D3DRS_ALPHABLENDENABLE, TRUE );

pDev->SetRenderState( D3DRS_SRCBLEND, D3DBLEND_ZERO );

pDev->SetRenderState( D3DRS_DESTBLEND, D3DBLEND_ZERO );

//lighting OFF

pDev->SetRenderState( D3DRS_LIGHTING, FALSE );

} //if(bAmbientLight)

//depth buffer ON (write+test)

pDev->SetRenderState( D3DRS_ZENABLE, TRUE );

pDev->SetRenderState( D3DRS_ZWRITEENABLE, TRUE );

//stencil buffer OFF

pDev->SetRenderState( D3DRS_STENCILENABLE, FALSE );

//FIRST PASS: render scene to the depth buffer

for ( int i = 0; i < 8; i++ ) {

mshCaster->Render( pDev );

}

pDev->SetRenderState( D3DRS_ZWRITEENABLE, FALSE );

Combined with this tip, I also want to suggest looking at Direct3D state blocks for render states. Note that it's a fairly repetitive algorithm we use each frame, such that it may make good sense to use state blocks instead of the multiple SetRenderState( ) calls.

Considerations when using this technique

I've decided to separate this section from optimizations, as this is more of a conceptual view of the algorithm. The following things could possibly be optimised or have their efficiency improved but it's unlikely.

The most major consideration and one that I've brushed over a couple of times now is that the algorithm requires "n+1" passes - both with rasterization and transformation. In a well lit environment it is possible to have n+1 overdraw on the majority of pixels - meaning that you're wonderful pixel shader or texture stage setup could hurt you many times more than it did when you didn't have shadows..

The lights in this sample program use additive blending - that is, if you put lots of lights in a scene you are likely to end up with a very bright final image. As a consequence you may wish to reduce the brightness of some lights, or even look at a different combiner (modulate instead of additive for example).

Source code for this article

You can download the source code for this article by clicking on this link: here.

The source code should be fairly straightforward to follow, it was written with Visual C++ .Net (2002), but should compile fine with Visual C++ 6 and/or other compilers. The code is commented throughout, and there are a set of #define's at the top of each source module allowing you to customise various properties.

When you have the source code running, you can press "T" twice to get a list of the controls for the sample.

References

The following selection of references is by no means a definitive list, more of a list of those that I found useful when I did my research and wrote this article...

Books

Real-Time Rendering Tricks and Techniques in DirectX (ISBN: 1-931841-27-6), Kelly Dempski

I found this book to be a good overview of all algorithms at an applied level. Chapter 27 covers simple planar shadows, chapter 28 covers shadow volumes (as in this article) and chapter 29 covers

shadow maps. An interesting thing to note is that the authors coverage of shadow volumes includes the use of vertex shader extrusion.

Real-Time Rendering, Second Edition (ISBN: 156881-182-9), Tomas Akenine-M?ller and Eric Haines

This is one of those books that all serious graphics programmers seem to have, and whilst it has little applied content (and no samples), it is a great overview and all round discussion of real-time

computer graphics. Chapter 6, part 12 has a good overview of real-time shadow rendering research. Makes for good background reading.

Game Programming Gems (ISBN: 1-58450-049-2), Edited by Mark DeLoura

An excellent all round book, chapter 5.7 by Yossarian King covers planar shadows ("Ground-Plane Shadows"). Chapter 5.8 by Gabor Nagy covers projective shadows ("Real-Time Shadows on Complex

Objects").

Game Programming Gems 2 (ISBN: 1-58450-054-9), Edited by Mark DeLoura

The sequel to the previously listed book, is also very good and has another couple of articles on shadowing. Chapter 4.10, "Self Shadowing Characters", by Alex Vlachos, David Gosselin and Jason L.

Mitchell (ATI Research) isn't the best article around, but might be interesting to some people. Chapter 5.6, "Practical Priority Buffer Shadows", by Sim Dietrich (Nvidia) introduces a more optimal

way (although the only hardware I know of that supports this is Nvidia's) of rendering projective shadows.

Game Programming Gems 3 (ISBN: 1-58450-233-9), Edited by Dante Treglia

The second sequel in the ever-popular series, this time only features on article on shadowing. Chapter 4.6, "Computing Optimized Shadow Volumes for Complex Data Sets", by Alex Vlachos and Drew Card

(ATI Research) explain an algorithm for picking the correct triangles to render for projective shadow rendering - despite the name, it's not amazingly useful when using the technique explained

in this article.

Websites

"The Theory of Stencil Shadow Volumes" by Hun Yen Kwoon

http://www.gamedev.net/columns/hardcore/shadowvolume/

"Cg Shadow Volumes" by Razvan Surdulescu

http://www.gamedev.net/columns/hardcore/cgshadow/

Nvidia's Robust Shadow Volumes paper (includes link to Carmacks Reverse)

http://developer.nvidia.com/object/robust_shadow_volumes.html

"Stencil Shadow Volumes with Shadow Extrusion using ASM or HLSL"

http://www.booyah.com/article04-dx9.html

"Z Pass to Z Fail - Capping shadow volumes" - An interesting topic if you wish to extend the code in this article

http://www.gamedev.net/community/forums/topic.asp?topic_id=179947

About the author

Jack Hoxley is currently studying for a BSc in Computer Science at the University of Nottingham, England; and has been interested in computer graphics for a long time now. Jack also runs (time permitting) www.DirectX4VB.com - a collection of over 100 tutorials regarding all aspects of Microsoft's DirectX API. He can be contacted via email: Jack.Hoxley@DirectX4VB.com or jjh02u@cs.nott.ac.uk ...

---

1 People often make a big thing about so-called "soft shadows", where the edges around a shadow appear to blend smoothly between shadowed and lit. My statement that there is no in-between therefore sounds a little odd. The reasoning for this is actually very simple, soft shadows are a feature of very complicated (comparatively) global illumination systems - ones where they factor in the reflection and transmission of light as energy (ray tracing and radiosity are good examples). If you calculate a light as being able to reflect off surrounding surfaces, or to be emitted from area-lights you will get these blurred edges. At the time of writing this article, real-time dynamic global illumination is difficult if not impossible.