Hi all,

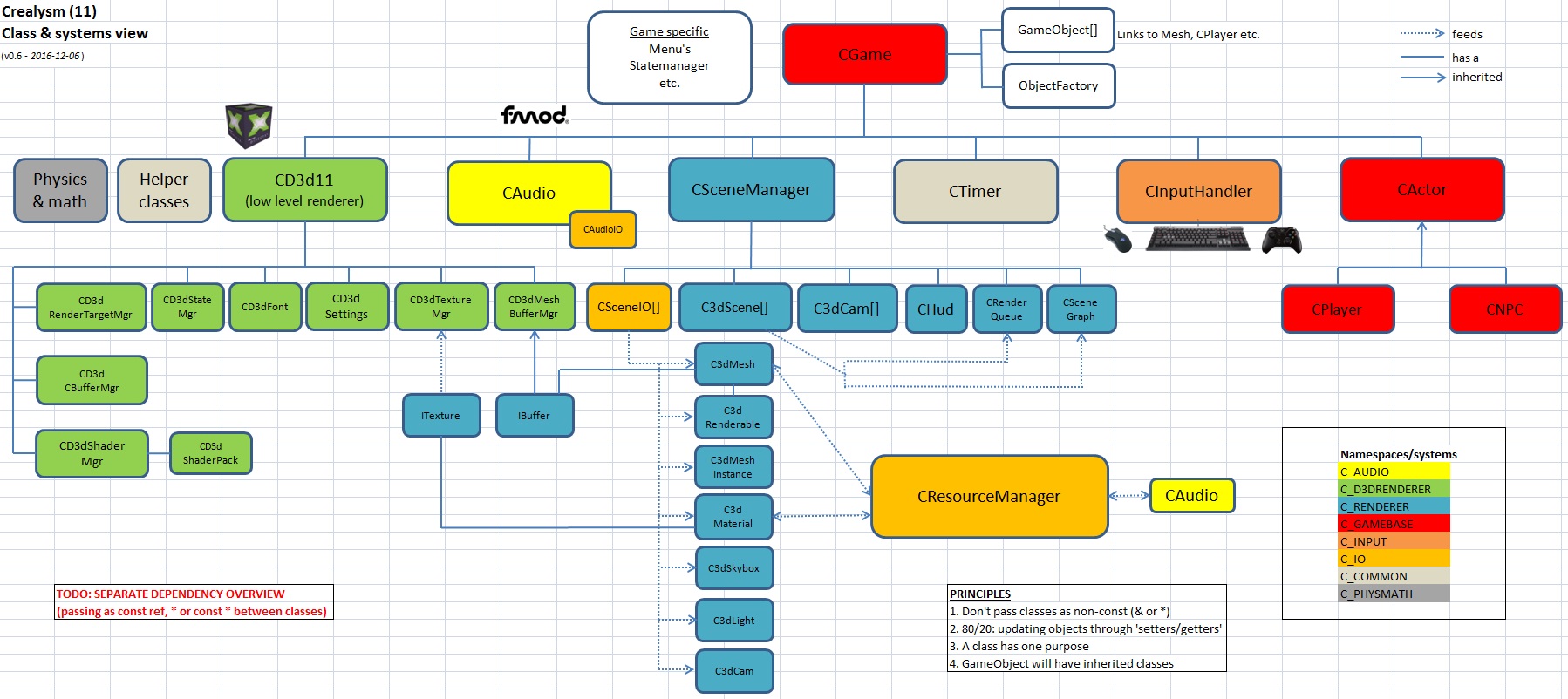

I'm struggling a bit on design related thing in my new d3d11 engine.

Here's the situation:

- I have a low level renderer (d3d11)

- meshes are stored in a mesh class

- there's an IBuffer class (interface), which basically links api-independent mesh class to the d3d11 low level renderer

(IBuffer contains the D3D11 buffer, used for vertices or indices)

So far I've thought of two options how to brings this together:

1. Give the mesh class 2 IBuffer's as members, one for the vtx buffer and one for the indexbuffer

2. Let the low-level renderer have a meshbuffer manager, which stores all the IBuffers.

In this case, the manager returns an ID/handle for each buffer, which is stored in the mesh object (mVtxBufferId and mIndexBufferId)

But solutions come with advantages and disadvantages.

I'm leaning towards option 1, where I encounter something practical:

- the IBuffer class has a 'Create' function, which needs a template of const * data (can be either vertices or indices, uint's or some struct)

(because of the template I cannot create the buffer through the constructor, using a template)

- the Create function also takes the D3D11 device pointer (needed for creation)

I could solve this by one of these options:

1. Make the IBuffers in the Mesh public, so I can call the Create function from outside the mesh class

(keeping the mesh API independent, otherwise I would need to make a CreateBuffers function in the API independent mesh class, taking a D3D11 device ptr)

2. Accept that an API independent class (mesh), needs a D3D11 device ptr (to create buffers). I really don't like this option btw.

I'm curious how you would solve this and/or if you could give me some 'pointers' on how to continue.

Btw, option 2 works for sure (I've already implemented this), but it doesn't feel clean because I cannot create the buffer through the constructor (because of the template function). Also option 2 has the disadvantage that a mesh has to know the ID of the buffer(s) in the manager. Meaning that when you 'kill' a buffer in the manager, you cannot reshuffle the std::vector, otherwise the buffer ID's in the mesh object won't match up anymore. But I already figured out that I can use a 'freelist' for this, to be able to fill up the 'gaps' in the vector for released IBuffers.

Last but not least, I could also consider some sort of 'factory' pattern, where IBuffers are returned. This would be in line with option 2 above.

Any input is appreciated.