[font=arial]In this update I'll talk about the new interface for creating World instances, the World.Builder![/font]

[font=arial]dustArtemis[/font]

dustArtemis[font=arial] is a fork of [/font]Artemis Entity System[font=arial], which is a BSD-licenced small Java framework for setting up Entities,…

[font=arial]In this update I'll talk about the latest release of dustArtemis, which changes a bit the component handling.[/font]

[font=arial]dustArtemis[/font]

[font=arial]dustArtemis is a fork of Artemis Entity System, which is a BSD-licenced small Java framework for setting up Entities, Compon…

[font=arial]In this update I'll talk about the latest features of dustArtemis, int based entities and component pooling.[/font]

[font=arial]dustArtemis is a fork of Artemis Entity System, which is a BSD-licenced small Java framework for setting up Entities, Components a…

[font=arial]dustArtemis[/font]

[font=arial]dustArtemis is a fork of Artemis Entity System, which is a BSD-licenced small Java framework for setting up Entities, Components a…

[font=arial]In this update I'll talk about the latest feature of dustArtemis, fixed length bit sets for component tracking![/font]

[font=arial]dustArtemis is a fork of Artemis Entity System, which is a BSD-licenced small Java framework for setting up Entities, Component…

[font=arial]dustArtemis[/font]

[font=arial]dustArtemis is a fork of Artemis Entity System, which is a BSD-licenced small Java framework for setting up Entities, Component…

In this update: Entity pooling and refactoring EntityObservers.

[font=arial]dustArtemis is a fork of Artemis Entity System, which is a BSD-licenced small Java framework for setting up Entities, Components and Systems.[/font]

[font=arial]Entity pooling[/font]

Well, in an E…

[font=arial]dustArtemis[/font]

[font=arial]dustArtemis is a fork of Artemis Entity System, which is a BSD-licenced small Java framework for setting up Entities, Components and Systems.[/font]

[font=arial]Entity pooling[/font]

Well, in an E…

[font=arial]Small update, moved the repo to GitHub, and config files.[/font]

[font=arial]dustArtemis is a fork of Artemis Entity System, which is a BSD-licenced small Java framework for setting up Entities, Components and Systems.[/font]

[font=arial]GitHub[/font]

[font=ar…

[font=arial]dustArtemis[/font]

[font=arial]dustArtemis is a fork of Artemis Entity System, which is a BSD-licenced small Java framework for setting up Entities, Components and Systems.[/font]

[font=arial]GitHub[/font]

[font=ar…

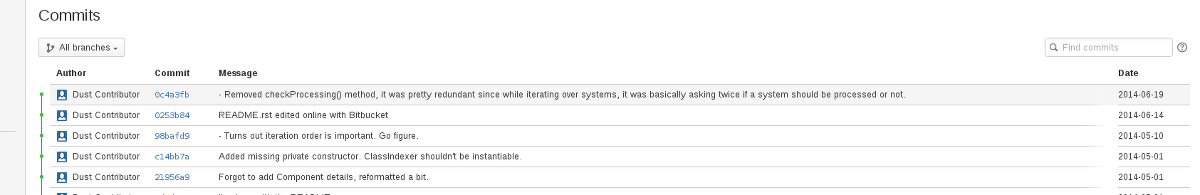

[font=arial]It's been a while since I updated this journal, not because I didn't had anything to write about though. So, recap on updates it is.[/font]

[font=arial]dustArtemis is a fork of Artemis Entity System, which is a BSD-licenced small Java framework for setting u…

[font=arial]dustArtemis[/font]

[font=arial]dustArtemis is a fork of Artemis Entity System, which is a BSD-licenced small Java framework for setting u…

[font=verdana]Do you know in which results page dustArtemis appears if you search for "artemis framework" in Google? Absolutely in none of them! So we're going to celebrate by talking about the ComponentManager class.[/font]

[font=verdana]dustArtemis is a fork of Arte…

[font=verdana]dustArtemis[/font]

[font=verdana]dustArtemis is a fork of Arte…

Hi! In this entry I'm going to describe the ComponentManager class and the changes I made to it in dustArtemis, including a few bug fixes

dustArtemis is a fork of Artemis Entity System, which is a BSD-licenced small Java framework for setting up Entities, Components…

[font=verdana]dustArtemis[/font]

dustArtemis is a fork of Artemis Entity System, which is a BSD-licenced small Java framework for setting up Entities, Components…

Hi! In this entry I'm going to review the latest changes in dustArtemis and some thoughts on potentially big performance issue.

dustArtemis is a fork of Artemis Entity System, which is a BSD-licenced small Java framework for setting up Entities, Components and System…

[font=verdana]dustArtemis[/font]

dustArtemis is a fork of Artemis Entity System, which is a BSD-licenced small Java framework for setting up Entities, Components and System…

Hi! This is the first entry of a journal dedicated to dustArtemis, my fork of Artemis Entity System framework, that I've been using for the pseudo-engine project I started this year.

dustArtemis is a …

[font=verdana]dustArtemis[/font]

dustArtemis is a …

Advertisement

Popular Blogs

Advertisement