After what seems like an eternity (but in reality was about 1 year) we now have quite a bit of information about the Hololens. Perhaps even more interesting is that we have a pretty good picture of what development for the device will look like. If you haven't seen it already, you can start looki…

Its always a good practice to think about what you have done over the past year, and of course to think about what is coming in the next year. I have found this to be a good way to gain perspective about my various projects and activities, so I will indulge in some thinking out loud here.

2015

This …

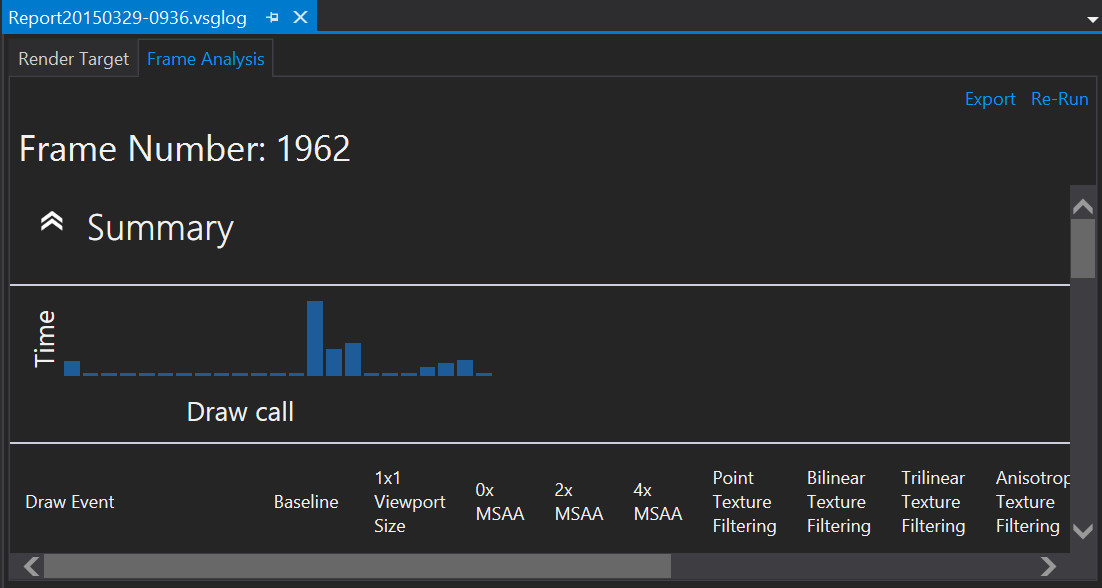

Way back when D3D9 was first released, graphics debugging and performance was a complete black art. It was really, really difficult to know what was going on, and when the graphical output wasn't quite what it was supposed to be then you really had to put on your detective hat and begin to deduce w…

I have a small confession to make: I have very rarely used STL algorithms, even though I know I should. There is lots of really great reasons why I should, there top C++ developers out there that tell you to use them whenever it applies, but I just don't feel 100% comfortable with them yet. With …

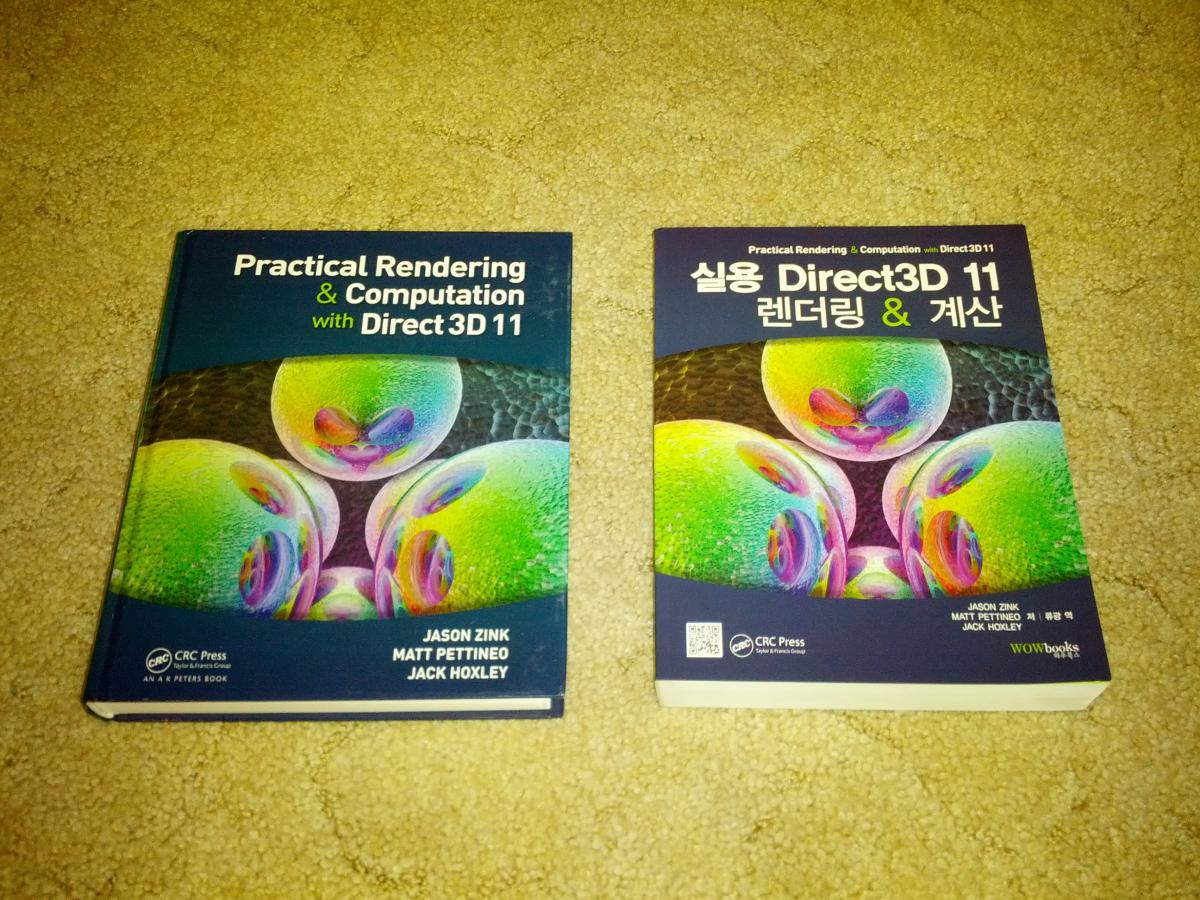

In general, I have always been interested in computer graphics. There is lots of different problems to solve, and if you like math/geometry then there really aren't many better ways to exercise your brain than working in this area. Once you know how to take geometry and project it onto an image, …

Last time around, I described current data layout of my primary scene graph class - the Entity3D. After some thought and further research into what direction I want to take the engine, I decided to make a few changes. Let's start with the printout of the current layout - after my recent changes:[…

Just a short entry for a quick plug. In case you haven't heard of it yet, there is a very developer focused event coming up this week from Microsoft. You can find all the details on the event page here: http://www.aka.ms/connect. There is some great stuff in store, so you won't be sorry to tune …

CppCon 2014

In case you haven't heard about, all of the sessions from CppCon 2014 are being released on the CppCon YouTube Channel. This is a fantastic way for you to catch up on the latest and greatest things that people are doing with C++ (especially modern C++), and the price is about as good as …

In case you haven't heard about, all of the sessions from CppCon 2014 are being released on the CppCon YouTube Channel. This is a fantastic way for you to catch up on the latest and greatest things that people are doing with C++ (especially modern C++), and the price is about as good as …

Managing Dependencies

Hieroglyph 3 has two primary dependencies - Lua and DirectXTK. Lua is used to provide simple scripting support, while DirectXTK is used for loading textures. Both of these libraries are included in the Hieroglyph 3 repository in source form. This allows for easy building wit…

Hieroglyph 3 has two primary dependencies - Lua and DirectXTK. Lua is used to provide simple scripting support, while DirectXTK is used for loading textures. Both of these libraries are included in the Hieroglyph 3 repository in source form. This allows for easy building wit…

Building Hieroglyph 3

Hieroglyph 3 always has had an 'SDK' folder where the engine static library is built to in its various configuration and platform incarnations, and the include (*.h, *.inl) files are copied to an include folder. This lets a user of the engine have an easy way to build the engi…

Hieroglyph 3 always has had an 'SDK' folder where the engine static library is built to in its various configuration and platform incarnations, and the include (*.h, *.inl) files are copied to an include folder. This lets a user of the engine have an easy way to build the engi…

Over the years, I have relied on the trusty old Milkshape3D file format for getting my 3D meshes into my engine. When I first started out in 3D programming, I didn't have a lot of cash to pick up one of the heavy duty modeling tools, so I shelled out the $20 for Milkshape and used that for most of…

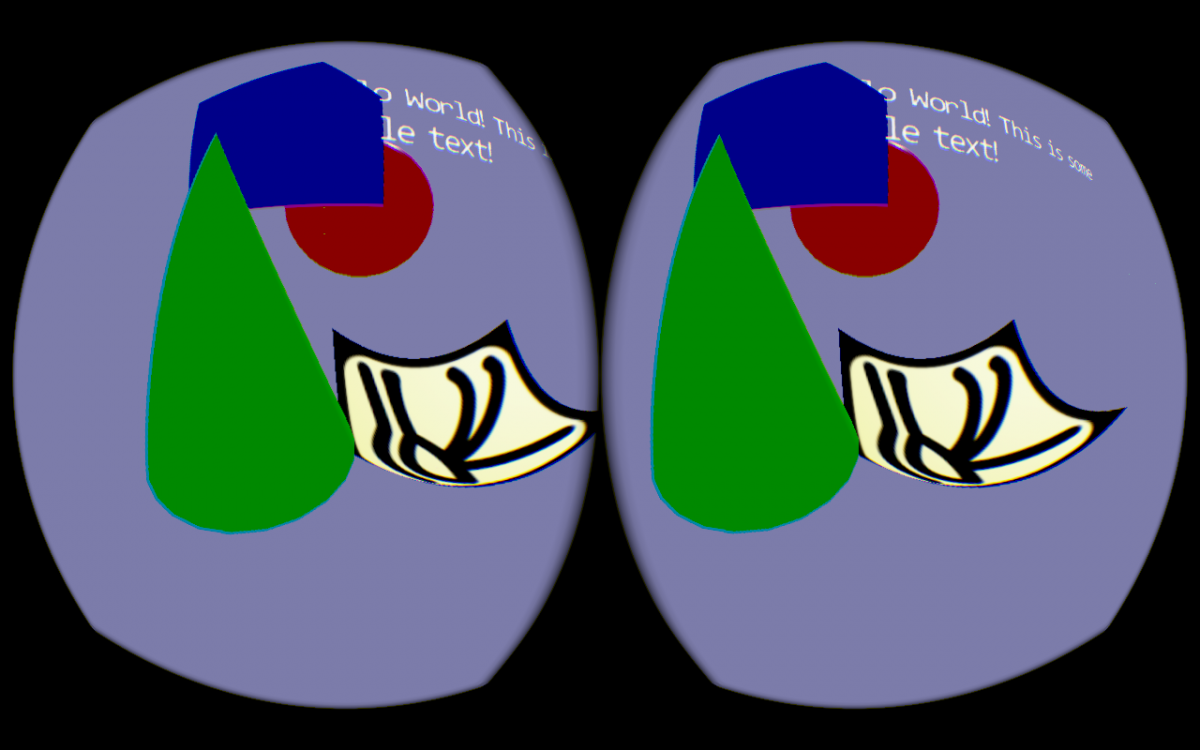

I recently have been adding support to Hieroglyph 3 for the Oculus Rift. This post is going to discuss the process a little bit, and how the design of the Hieroglyph 3 engine ended up providing a hassle free option for adding Rift interaction to an application. Here's the first screen shot of the…

[font=arial]I recently picked up a copy of the book "Developing Microsoft Media Foundation Applications" by Anton Polinger. I have been interested in adding some video capture and playback for my rendering framework, and finally got a chance to get started on the book.

What I immediately found inter…

What I immediately found inter…

Introduction

At the BUILD 2014 conference, Max McMullen provided an overview of some of the changes coming in Direct3D 12. In case you missed it, take a look at it here. In general, I really enjoy checking out the API changes that are made with each iteration of D3D, so I wanted to take a short br…

At the BUILD 2014 conference, Max McMullen provided an overview of some of the changes coming in Direct3D 12. In case you missed it, take a look at it here. In general, I really enjoy checking out the API changes that are made with each iteration of D3D, so I wanted to take a short br…

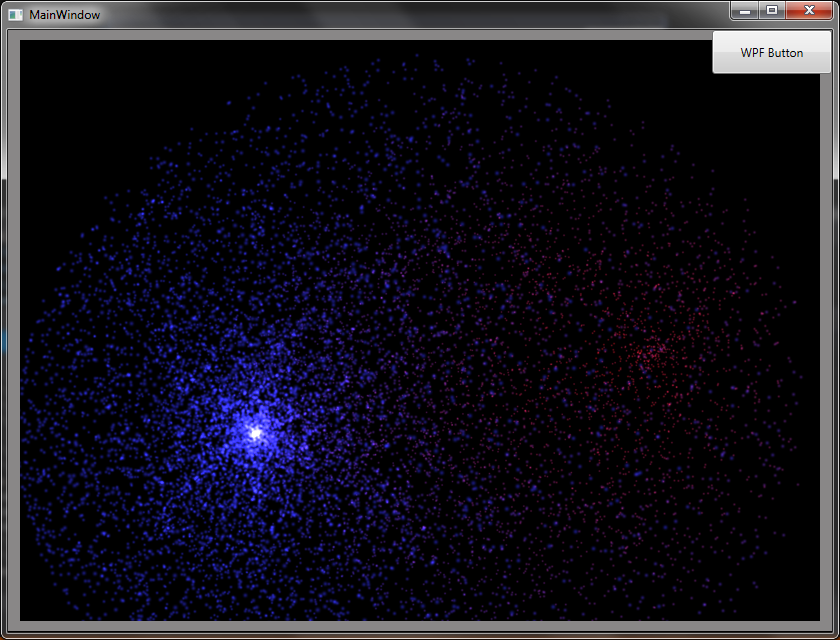

As I mentioned in my last entry, I am in the process of evaluating a number of different UI frameworks for use with Direct3D 11 integrated into them. This is mostly for editor style applications, and also an excuse for me to learn some other frameworks. The last few commits to the Hieroglyph 3 co…

Introduction

Lately I have found myself looking for an easy (or easier) way to get some native D3D11 code to play nicely with a user interface framework. Way back when I first started out writing Hieroglyph, I had the basic idea that I could just make my own user interface in the engine. I think m…

Lately I have found myself looking for an easy (or easier) way to get some native D3D11 code to play nicely with a user interface framework. Way back when I first started out writing Hieroglyph, I had the basic idea that I could just make my own user interface in the engine. I think m…

As the title implies, I have been thinking quite a bit more about the object lifetime management models and how they will be used in Hieroglyph 4. In the past (i.e. Hieroglyph 3), I more or less used heap allocated objects as a default, and only generally didn't use stack allocation all that much.…

I found out today that I have indeed been re-awarded as a Visual C++ MVP this year :) That makes five years running, and I'm really happy that I will have the chance to continue on. There is literally tons of different concepts in modern C++ that I want to start writing about again, so we will ha…

Going Native - The Conference

This post has two purposes, both related to its title. First and foremost, today through Friday the 'Going Native 2013' conference is happening. If you aren't familiar with it, this is a C++ conference held by Microsoft in Redmond and it is devoted to all things C++. …

This post has two purposes, both related to its title. First and foremost, today through Friday the 'Going Native 2013' conference is happening. If you aren't familiar with it, this is a C++ conference held by Microsoft in Redmond and it is devoted to all things C++. …

I am sure you have heard by now about the new BUILD 2013 conference and all of the goodies that were presented there. Of special interest to the graphics programmer are the new features defined in the Direct3D 11.2 specification level. I watched the "What's New in Direct3D 11.2" presentation, whi…

I just received my copy of GPU Pro 4 today, which was a nice surprise. I had contributed a chapter on Kinect Programming with Direct3D 11, and it is really nice to see it in print. And of course, there is also lots of other interesting articles that I have been digging through as well.

In general,…

In general,…

Last time I discussed how I am currently using pipeline state monitoring to minimize the number of API calls that are submitted to the Direct3D runtime/driver. Some of you were wondering if this is a worthwhile thing to try out, and were interested in some empirical test results to see what the di…

My last couple of commits to Hieroglyph 3 addressed a performance issue that most likely all graphics programmers that have made it beyond the basics have grappled with: Pipeline State Monitoring. This is the system used to ensure that your engine only submits the API calls that are really necessa…

The most recent commit to the Hieroglyph 3 repository has reduced my usage of the simple event system in the engine. I think the reasons behind this can apply to others, so I wanted to make a post here about it and try to share my experiences with the community.

A long time ago, I added an event ma…

A long time ago, I added an event ma…

As I alluded to last time around, one of the new features that I have been working on lately is Glyphlets. These little guys are basically an abstraction of an application, and only provide the bare minimum functionality that an app requires. The simplicity of the interface is demonstrated by the…

My goodness, how time flies... The past year has been a flurry of activity, with many major aspects of my life being tumbled around and changed up. I successfully moved back from Germany in the March timeframe, and was living in a temporary situation with my in-laws while we found a permanent hou…

Lately I have been preparing Hieroglyph 3 for the switch to VS2012, and also expanding it to multiple platforms as well (i.e. Win7 desktop, Win8 desktop, Windows Store Apps, etc...). These are important tasks, but aren't very exciting at all... So as a side project, I implemented the first compon…

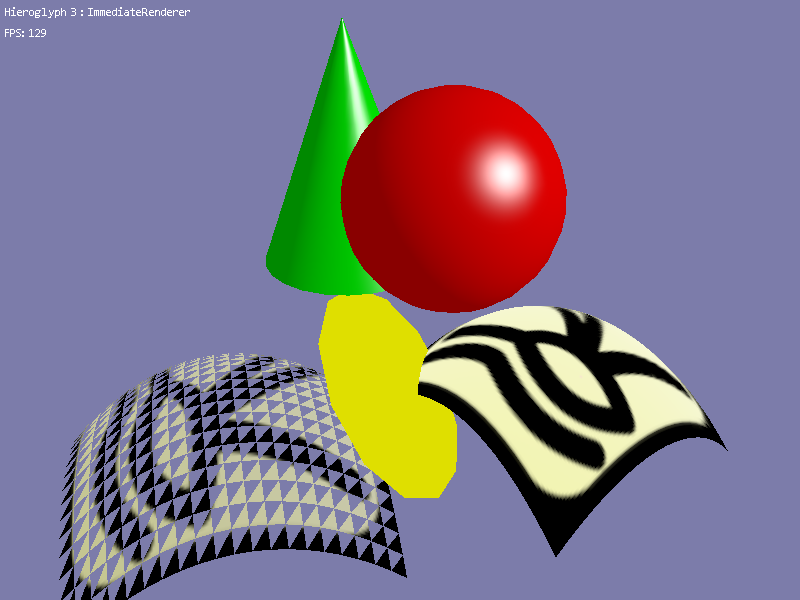

Last time, I described a few of the new 'immediate' style rendering classes that I have recently added to Hieroglyph 3. Since then, I have refactored some of the common code used in these classes into a handy set of template classes. I have found them very useful already, so I thought I would des…

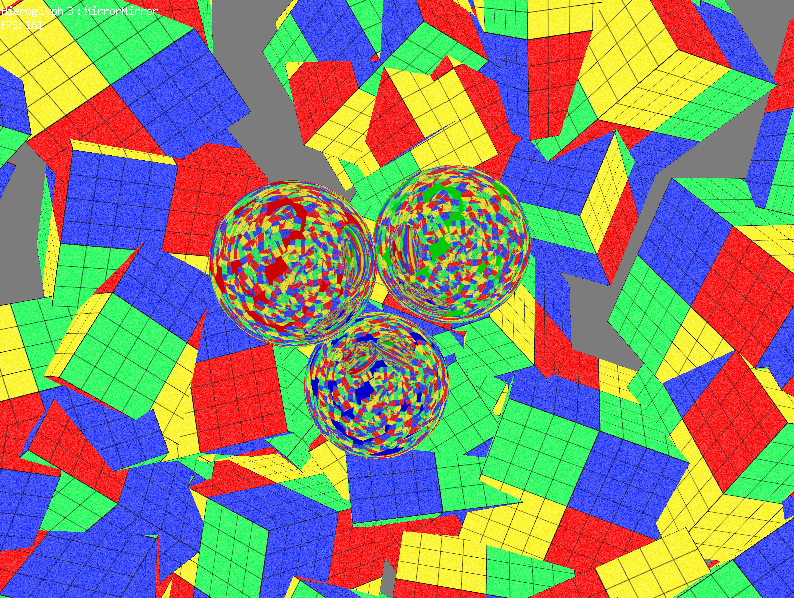

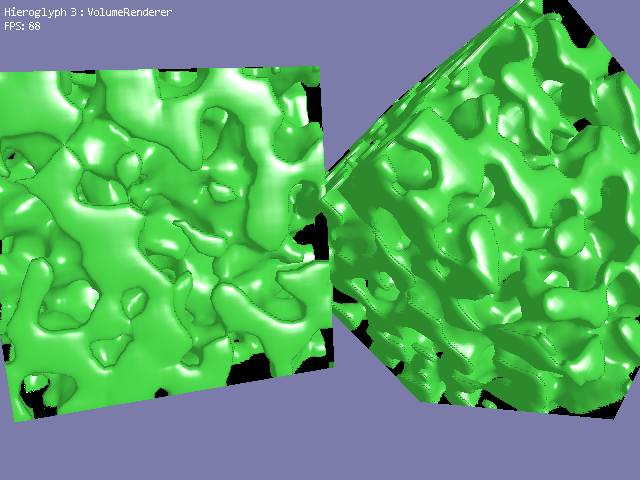

Over the past few commits to the Hieroglyph 3 repository, I have been building up some generalized geometry classes in an effort to make working with customized pipeline input forms easier. This has resulting in a few classes that specialize the PipelineExecutorDX11 class, which is responsible for…

Advertisement

Popular Blogs

Advertisement