My skeleton's design gave me an easy way to move a point "with" a given bone. Each bone has it's own Axes, which is always aligned with the bone. For the purpose of the skeleton this was called "EndAxes" because it's the axes around which all child bones get rotated.

The Axes is literary that, an Axes class. I wrote it with some features useful specifically for this type of work. The two functions used are called GetGlobalPointFromLocalPoint and GetLocalPointFromGlobalPoint.

They're fairly simple functions that look like this:

Vec3f Axes::getGlobalPointFromLocalPoint(Vec3f point)

{

Vec3f res(0.0f, 0.0f, 0.0f);

res = x_axis*point.x + y_axis*point.y + z_axis*point.z;

return res;

}

Vec3f Axes::getLocalPointFromGlobalPoint(Vec3f point)

{

Vec3f res(0.0f, 0.0f, 0.0f);

res.x = point.dot(x_axis);

res.y = point.dot(y_axis);

res.z = point.dot(z_axis);

return res;

}

Getting a global point from a local point is easy - simply take the local point's coordinates, multiply them by the Axes's axis, and add them up.

Doing the reverse is simply a matter of getting the projection of the global point's coordinates on to each of the Axis.

Note that x_axis, y_axis and z_axis are all of type Vec3, which is a self-written vector class, which functions as you'd expect it to.

My Axes class also doesn't store an origin. It assumes that if you pass in a point, it has already been translated as though the Axes are at the origin. This allows for the class to remain fairly generic, and since translation is just a matter of adding two vectors, it's hardly a difficulty.

So, my idea was to load a model, and for each point in the model, associate with a bone. Store the point's coordinates relative to that bone's Axes, using GetLocalPointFromGlobalPoint.. Then, after the bone has changed, simply call GetGlobalPointFromLocalPoint(..) with the stored original relative coordinates.

This works out fine, and the math isn't too costly either, simply multiplications and additions,

[size="4"]The Vertex-Bone automatic attachment problem

The main problem is, which bones to associate with which vertex. I posted on the forums here, and I got linked to an interesting article: http://blog.wolfire....usion-skinning/

The described method fills the model with voxels and then, for each attaches the bones closest to the vertex by path of the filled in voxels. The method described works fine, and could be use as a general purpose attachment method. It successfully overcomes the problem that certain vertecies are close to bones which they aren't actually suppose to be attached to.

Unfortunately, it also required, what to me seems like a lot of extra work, to create an algorithm to fill in the model with voxels correctly. According to the article, the method also took about a minute to run on the CPU (though he does mention that on the GPU it should be much faster).

Discarding the idea for an absolutely general purpose method, I decided to incorporate some extra knowledge about the skeleton structure I had.

The first simple step was to build a connectivity table. For each bone, I defined which bones are connected to it, and which one is connected at which end - meaning, for example, that the R_FOREARM bone is connected to the R_HAND bone and the R_ARM bone, but the R_ARM bone is connected to the start of the R_FOREARM bone, while the R_HAND to the end of the bone. Note that while this method now required extra information, the extra information isn't hard to enter, and it could be generated automatically from an existing bone structure. This means that there is some hope that this could be applied as a general purpose algorithm.

The actual vertex-bone attachment ran in two loops. The first loop simply picked the closest bone to the vertex, and called it a "parent" bone. It's because of this that the arms of the skeleton start off outstreched. Again, a minor requirement, but certainly not a difficult one.The pseudo code is fairly simple:

For each Vertex in the Model

For each Bone in the Skeleton

if CurrentBone is closer than the CurrentMinDistance

Parent Bone = CurrentBone; CurrentMinDistance = distance to CurrentBone;

note: I'm not going to post the actual code because it looks a little hard to understand with the indexing, but the code should be available on my site for download soon.

However, only attaching the vertex to one bone doesn't provide for good animation. Each vertex needs to be attached to multiple bones, and the attachments need to be weighted, for good looking results.

This is why after figuring out the parent bone, I use the bone connectivity information stored in the table to find out which other bones the vertex may be attached to. The pseudo code looks like this:

For each Vertex in the Model

{

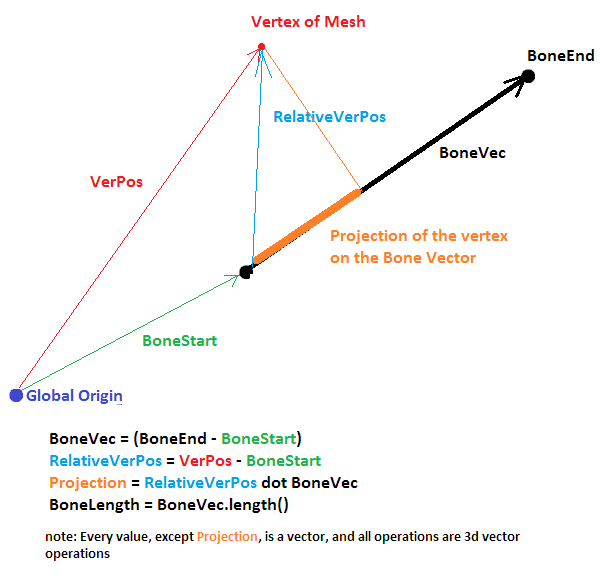

Get the Projected length of the CurrentVertex onto the ParentBone's vector (the vector from the Bone's start to the Bone's end)

If (Projected length / ParentBone's length is within a ratio [L,U])

{

The vertex is ONLY attached to the parent bone, so we add the ParentBone to the list of attached bones, with weight of 1

}

else

{

Add The ParentBone to the Vertex's Bone attachment list, with weight 1/distance to center of ParentBone

Determine which end of the ParentBone is closer to the Vertex

With that information, obtain a list of other bones this vertex is attached to from the ConnectivityTable

For Every Connected Bone

{

Add the Bone to the Vertex's Bone attachment list,with eight 1/distance to center of Bone

}

Normalize all the weights (so that they add up to 1, but keeping their ratios)

}

}

Obtaining the projection of the Vertex on the Bone vector is a crucial part, and since the code didn't explain it too well, here's a diagram:

The bounds for the ratio [L, U] consists of two numbers, normally ranging within 0 to 1. It essentially makes for "hard" attachment only to the parent bone, and it can also differ from bone to bone (for example, I wanted almost all the vertecies of the head to be "hard" attached to the head bone. Because of that bound for the ratio were [0, 1] for the Head.

The other important thing to note, is that I load my character model in parts. I do this because it makes it easy to interchange parts, like say, for example, a different pair of pants.

[size="4"]Some Results:

The above algorithm guarantees that if two vertecies are at the same position at design time, then, in any animation, they will also move together. This guarantees that even if the model is loaded in different parts, it will still look like only 1 model.

The algorithm is also very quick, for a model of ~3000 vertecies it ran in ~70 milliseconds, meaning, I had no real need to store the vertex-bone attachment information because generating it was easy. The algorithm runs in linear time, because the bone list's size is a constant, and the only variable is the number of vertecies in the model.

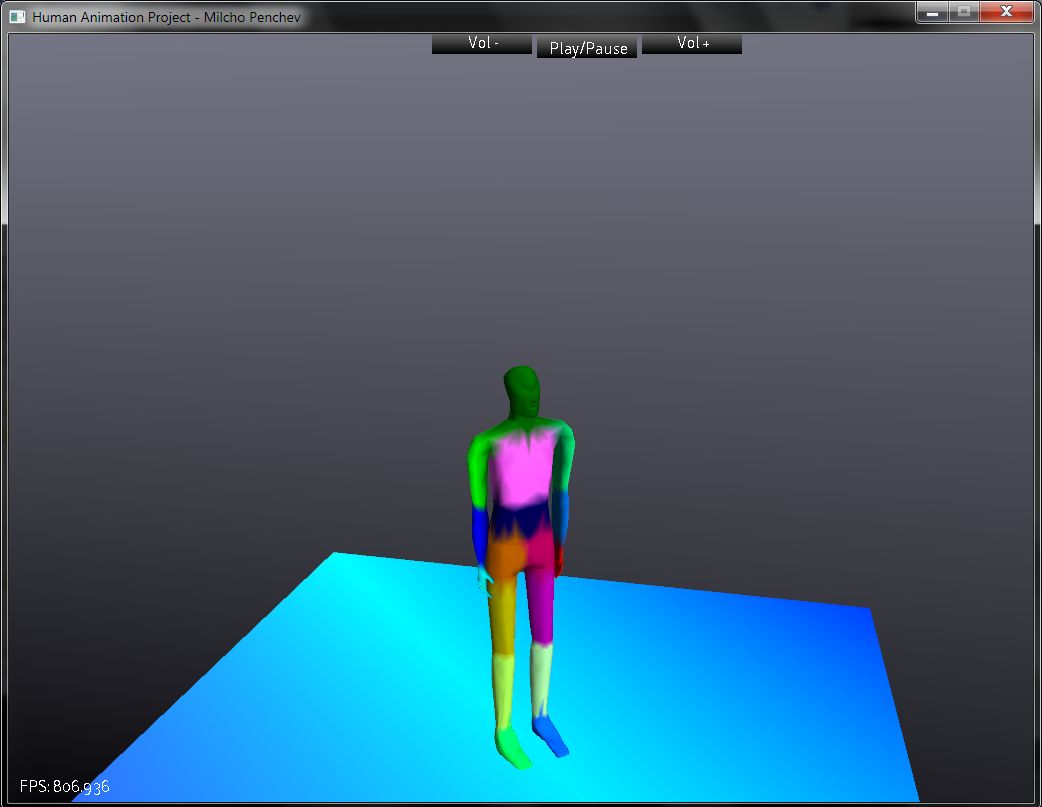

Here's a screenshot of the model with each vertex colored per parent bone:

And here's a video of a two animations in progress:

[media]

[/media]

That covers it for my aim for animating the model. My .OBJ loader doesn't load textures yet, but that's completely unrelated to model animation, and is not a top priority right now.

I've already started working on terrain generation, and I'll post some quick results soon!